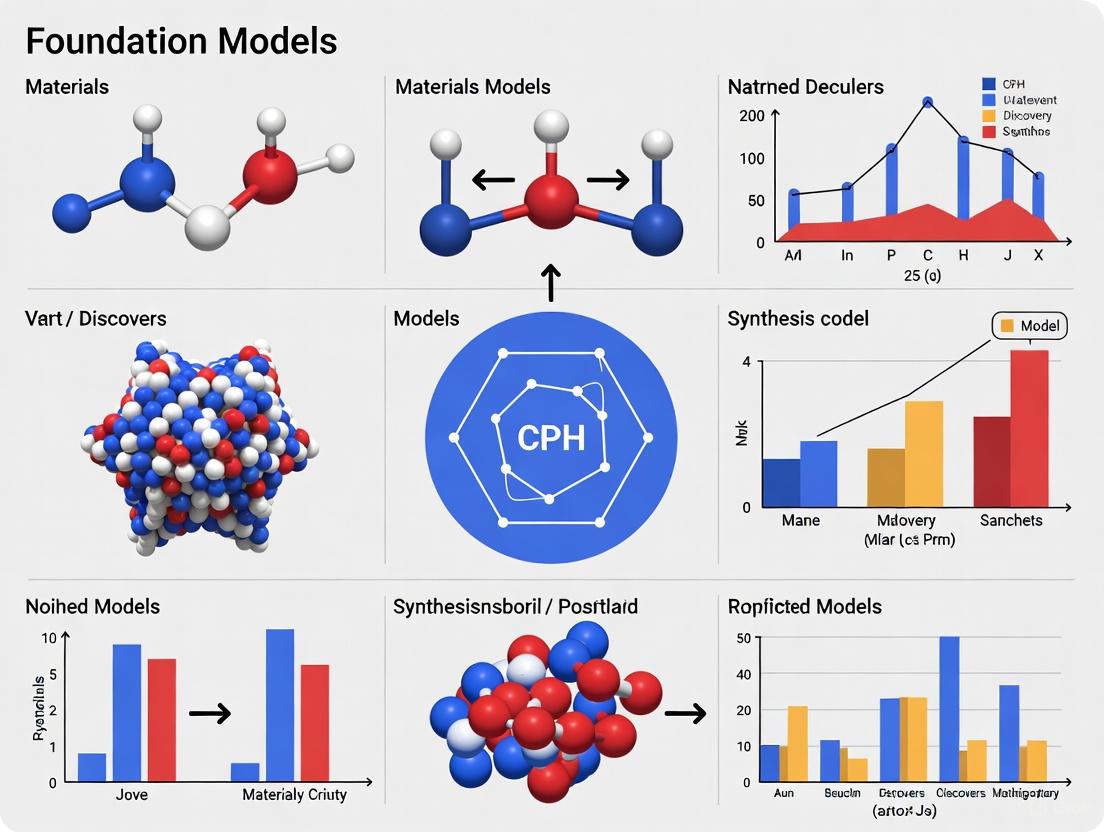

Foundation Models for Materials Discovery and Synthesis: A New Paradigm for AI-Driven Scientific Innovation

Foundation models, large-scale AI systems pre-trained on vast datasets, are revolutionizing materials science and drug development.

Foundation Models for Materials Discovery and Synthesis: A New Paradigm for AI-Driven Scientific Innovation

Abstract

Foundation models, large-scale AI systems pre-trained on vast datasets, are revolutionizing materials science and drug development. This article explores the current state and future directions of these models, covering their fundamental principles, key architectural approaches like transformer-based and graph neural networks, and their transformative applications in property prediction, inverse design, and synthesis planning. It further addresses critical challenges such as data scarcity and model generalization, while providing a comparative analysis of leading models and frameworks. Finally, it synthesizes key takeaways and outlines the future implications of this technology for accelerating biomedical research and clinical translation.

What Are Foundation Models and Why Are They Transforming Materials Science?

Foundation models are large-scale AI models trained on vast and diverse datasets, capable of being adapted to a wide range of downstream tasks [1]. In materials discovery, these models leverage self-supervised learning on extensive scientific data to accelerate the identification, design, and synthesis of novel materials with targeted properties [1]. Their emergence marks a paradigm shift from task-specific models to versatile, generalist AI tools that can handle complex scientific challenges, including molecular generation, property prediction, and synthesis planning. By learning fundamental representations from broad data, these models provide a powerful starting point for various applications in materials science and drug development, significantly reducing the need for large, labeled datasets for every new task [1] [2].

Core Architectures and Adaptation Mechanisms

Foundation models are characterized by their architectural flexibility and the methodologies used to adapt them to specific scientific domains.

Primary Model Architectures

Table 1: Key Architectures for Foundation Models in Materials Science

| Architecture Type | Core Function | Common Incarnations | Typical Applications in Materials Science |

|---|---|---|---|

| Encoder-Only | Creates meaningful representations and understanding of input data [1]. | BERT and its variants [1] [2]. | Property prediction from structure, named entity recognition from scientific literature [1]. |

| Decoder-Only | Generates new outputs sequentially, one token at a time [1]. | GPT models [1] [2]. | De novo molecular generation, synthesis route planning [1]. |

| Encoder-Decoder | Handles both understanding complex inputs and generating sequences. | Original Transformer [1]. | Tasks requiring full comprehension and generation, such as translating a material property description into a structure. |

Strategies for Downstream Task Adaptation

Adapting a pre-trained foundation model to specialized tasks in materials research is crucial for achieving high performance. Several key strategies exist:

Fine-Tuning: This process involves taking a pre-trained foundation model and further training it on a smaller, task-specific dataset. This updates the model's weights to specialize in the target domain, such as defect classification in casting products, where fine-tuning with 200 labeled examples improved accuracy from 83% to over 95% [2]. The process requires a curated, relevant dataset and careful training to avoid "catastrophic forgetting" of general knowledge [2].

Retrieval-Augmented Generation (RAG): RAG enhances a foundation model's knowledge by integrating an information retrieval system. When generating a response, the model first retrieves relevant documents from an external knowledge base (e.g., material databases or scientific literature) and uses this information to produce more accurate and context-specific outputs [2]. This is particularly valuable for incorporating the latest research findings not present in the model's original training data.

Downstream Filtering and Alignment: This technique involves applying an additional layer of rules or a model to filter the foundation model's outputs. It ensures the generated content aligns with user preferences, such as chemical correctness, synthesizability, or safety guidelines, reducing harmful or non-useful outputs [1] [2].

Application Notes and Protocols for Materials Research

The following section provides detailed methodologies for implementing foundation models in key research applications.

Application Note 1: Oracle-Guided Generative Molecular Design

This protocol details the use of generative foundation models coupled with evaluative "oracles" for designing novel molecules with desired properties, a method highlighted by NVIDIA's BioNeMo Blueprint [3].

1. Experimental Aim: To create an autonomous, iterative workflow for generating and optimizing novel molecular structures targeted for specific therapeutic or functional properties.

2. Research Reagent Solutions: Table 2: Key Components for Oracle-Guided Molecular Design

| Component | Function | Example Tools / Methods |

|---|---|---|

| Generative Foundation Model | Proposes novel molecular structures from scratch or fragments. | GenMol, MolMIM [3]. |

| Computational Oracle | A computational scoring function that evaluates proposed molecules based on desired outcomes. | Docking scores (e.g., DiffDock), ML-predicted affinity, QED, solubility predictors [3]. |

| Experimental Oracle (Ultimate Validator) | A physical assay or high-fidelity simulation that provides high-confidence validation. | In vitro binding assays, free energy perturbation (FEP) calculations, high-throughput screening [3]. |

| Fragment Library | A set of basic molecular building blocks used to seed the generative model. | Commercially available fragment libraries, BRICS-decomposed molecules [3]. |

3. Detailed Protocol:

Step 1: Initialization

- Define the target property for optimization (e.g., binding affinity to a specific protein, solubility).

- Select an appropriate generative model (e.g., GenMol NIM) and a corresponding computational oracle.

- Prepare an initial library of molecular fragments in SMILES format.

Step 2: Iterative Generation and Optimization Loop Repeat for a predetermined number of cycles (e.g., 10 iterations):

- Generation: Use the generative model to create a large set of candidate molecules (e.g., 1000) from the current fragment library.

- Evaluation: Pass all generated molecules to the computational oracle to obtain a quantitative score (e.g., predicted binding affinity) for each.

- Selection and Ranking: Filter and rank the molecules based on their oracle scores. Select the top-performing candidates (e.g., top 100) that meet a minimum score threshold.

- Library Update: Decompose the top-performing molecules into new fragments (e.g., using BRICS decomposition) and update the fragment library for the next generation.

Step 3: Experimental Validation

- Synthesize the top-ranked molecules from the final iteration.

- Validate the molecules using experimental oracles (e.g., in vitro assays) to confirm the predicted properties.

Diagram 1: Oracle-guided molecular design workflow.

Application Note 2: The ME-AI Framework for Interpretable Descriptor Discovery

This protocol outlines the Materials Expert-Artificial Intelligence (ME-AI) framework, which combines expert intuition with machine learning to uncover interpretable descriptors for predicting material properties, such as identifying topological semimetals (TSMs) [4].

1. Experimental Aim: To discover quantitative, human-interpretable descriptors that predict emergent material properties from a set of primary atomistic and structural features.

2. Research Reagent Solutions: Table 3: Key Components for the ME-AI Framework

| Component | Function | Example in TSM Study [4] |

|---|---|---|

| Curated Experimental Dataset | A high-quality, expert-labeled dataset of materials and their properties. | 879 square-net compounds from ICSD, labeled as TSM or trivial. |

| Primary Features (PFs) | Readily available atomistic or structural features chosen based on expert intuition. | 12 features, including electronegativity, electron affinity, valence electron count, dsq, dnn. |

| Chemistry-Aware Kernel | A Gaussian Process kernel that encodes chemical intuition, guiding the model to find meaningful correlations. | Dirichlet-based Gaussian Process model. |

| Expert Labeling | The process of classifying materials based on expert knowledge, including band structure analysis and chemical logic. | Labeling via band structure comparison (56%), chemical logic for alloys (38%). |

3. Detailed Protocol:

Step 1: Data Curation and Expert Labeling

- Define a specific class of materials to study (e.g., square-net compounds).

- Curate a dataset from reliable structural databases (e.g., Inorganic Crystal Structure Database, ICSD).

- For each material, extract a set of primary features (PFs) based on domain knowledge (e.g., electronegativity, key bond lengths).

- Label each material with the target property. This should leverage expert insight, such as direct analysis of experimental or computational band structures, and chemical logic for related compounds.

Step 2: Model Training and Descriptor Discovery

- Select a model suited for small, interpretable datasets. The ME-AI framework uses a Dirichlet-based Gaussian Process (GP) model with a chemistry-aware kernel.

- Train the GP model on the curated dataset of PFs and expert labels.

- Analyze the trained model to uncover the emergent descriptors—mathematical combinations of the PFs—that are most predictive of the target property.

Step 3: Model Validation and Generalization

- Evaluate the predictive performance of the discovered descriptors on a hold-out test set of materials.

- Critically, test the model's transferability by applying it to a different but related class of materials (e.g., applying a model trained on square-net TSMs to predict topological insulators in rocksalt structures) [4].

Diagram 2: ME-AI workflow for descriptor discovery.

Data Extraction and Challenges

A critical prerequisite for training effective foundation models in materials science is the extraction of high-quality data from diverse sources. A significant volume of materials information resides in scientific documents, reports, and patents [1]. Advanced data-extraction models must parse multiple modalities:

- Text: Using Named Entity Recognition (NER) to identify material names and properties [1].

- Images and Tables: Leveraging Vision Transformers and specialized algorithms (e.g., Plot2Spectra, DePlot) to extract molecular structures and numerical data from figures and charts [1].

- Multimodal Integration: Combining text and visual information to construct comprehensive datasets, such as extracting key patented molecules from Markush structures in patents [1].

Challenges include licensing restrictions, dataset bias, and ensuring data quality given that minor variations can profoundly influence material properties (e.g., the "activity cliff" phenomenon) [1].

Application Notes: Foundation Models in Materials Science

The application of artificial intelligence in scientific discovery is undergoing a fundamental transformation, moving from narrowly focused, task-specific models to versatile foundation models trained on broad data that can be adapted to a wide range of downstream tasks [5] [6]. In materials science, this shift enables unprecedented acceleration in the discovery and development of new materials, from topological semimetals to advanced fuel cell catalysts [1] [4] [7].

Current Applications and Performance

Foundation models are being deployed across the materials discovery pipeline, demonstrating significant performance improvements over traditional methods. The table below summarizes key quantitative results from recent implementations.

Table 1: Performance Metrics of AI Systems in Materials Discovery

| AI System / Model | Primary Application | Dataset Size | Key Performance Metrics | Reference |

|---|---|---|---|---|

| ME-AI Framework | Predicting Topological Semimetals | 879 square-net compounds | Identified 5 emergent descriptors; Transferred learning to rocksalt structures [4]. | |

| CRESt Platform | Fuel Cell Catalyst Discovery | 900+ chemistries, 3,500+ tests | 9.3x improvement in power density per dollar; Record power density with 1/4 precious metals [7]. | |

| Chemical Foundation Models | Property Prediction & Molecular Generation | Trained on ~10^9 molecules (ZINC, ChEMBL) | Predict properties from 2D representations (SMILES, SELFIES); Generate novel molecular structures [1]. |

Key Research Reagent Solutions

The effective implementation of foundation models in materials research relies on a suite of computational and data "reagents." The following table details these essential components and their functions.

Table 2: Essential Research Reagents for AI-Driven Materials Science

| Research Reagent | Type | Primary Function | Examples |

|---|---|---|---|

| Broad Chemical Databases | Data | Pre-training and fine-tuning foundation models on known chemical space. | PubChem, ZINC, ChEMBL [1] |

| Structure Representations | Data Encoding | Representing molecular and crystalline structures for model input. | SMILES, SELFIES, Crystallographic descriptors [1] |

| Multi-Modal Data Extractors | Tool | Parsing and integrating information from diverse sources like text, tables, and images in scientific literature. | Named Entity Recognition (NER), Vision Transformers [1] |

| Literature-Based Knowledge Embeddings | Data | Creating numerical representations of scientific text to guide experimental design. | Encoded insights from scientific papers and patents [7] |

| Automated Robotic Platforms | Hardware | Executing high-throughput synthesis and testing suggested by AI models. | Liquid-handling robots, automated electrochemical workstations [7] |

Experimental Protocols

Protocol 1: ME-AI for Discovering Material Descriptors

This protocol outlines the workflow for the Materials Expert-Artificial Intelligence (ME-AI) framework, which translates expert intuition into quantitative, interpretable descriptors for targeted material properties [4].

Procedure

- Expert Curation of Primary Features (PFs):

- Select a set of 12-15 primary atomistic and structural features based on domain expertise. For square-net compounds, this included electron affinity, electronegativity, valence electron count, and key crystallographic distances (

d_sq,d_nn) [4].

- Select a set of 12-15 primary atomistic and structural features based on domain expertise. For square-net compounds, this included electron affinity, electronegativity, valence electron count, and key crystallographic distances (

- Dataset Assembly and Expert Labeling:

- Curate a dataset of experimentally characterized materials (e.g., 879 compounds from the Inorganic Crystal Structure Database).

- Label materials based on target property (e.g., Topological Semimetal status) using a combination of experimental band structure data (56%) and expert chemical logic for related compounds (44%) [4].

- Model Training with Dirichlet-based Gaussian Process:

- Employ a Gaussian process model with a chemistry-aware kernel.

- Train the model to discover emergent descriptors composed of the primary features that predict the expert-labeled properties.

- Descriptor Validation and Transfer Testing:

- Validate discovered descriptors on held-out data from the same material family.

- Test the model's generalizability by applying it to a different but related structure family (e.g., applying a model trained on square-net compounds to rocksalt topological insulators) [4].

Visualization: ME-AI Workflow

Protocol 2: CRESt for Autonomous Materials Discovery

This protocol details the operation of the Copilot for Real-world Experimental Scientists (CRESt) platform, a multimodal, robotic system that integrates literature knowledge, human feedback, and high-throughput experimentation to discover and optimize functional materials [7].

Procedure

- Natural Language Tasking and Knowledge Embedding:

- Researchers converse with CRESt via a natural language interface to define objectives (e.g., "find a low-cost, high-activity fuel cell catalyst").

- The system searches scientific literature and databases, creating numerical representations ("knowledge embeddings") of potential material recipes [7].

- Dimensionality Reduction and Bayesian Optimization:

- Perform Principal Component Analysis (PCA) on the knowledge embedding space to define a reduced, efficient search space.

- Use Bayesian Optimization (BO) within this reduced space to propose the most promising experimental recipes [7].

- Robotic High-Throughput Experimentation:

- Execute the proposed recipes using an integrated robotic system:

- Synthesis: Liquid-handling robot and carbothermal shock system.

- Characterization: Automated electron microscopy, X-ray diffraction.

- Testing: Automated electrochemical workstation [7].

- Execute the proposed recipes using an integrated robotic system:

- Multimodal Feedback and Active Learning:

- Feed results (textual data, images, performance metrics) and human feedback back into the large language model to augment the knowledge base.

- Use computer vision (cameras, visual language models) to monitor experiments, detect irreproducibility, and suggest corrections.

- Redefine the search space and repeat the BO loop for continuous optimization [7].

Visualization: CRESt System Workflow

The advent of foundation models represents a paradigm shift in computational materials science. These models, trained on broad data at scale, can be adapted to a wide range of downstream tasks, offering unprecedented capabilities for materials discovery and synthesis research [1]. At the heart of this revolution lies the Transformer architecture, whose unique components enable the sophisticated understanding and generation of sequential data.

Originally designed for natural language processing, the Transformer's attention mechanism has proven remarkably versatile, providing the backbone for applications spanning from molecular property prediction to synthesis planning [1] [8]. The architecture's core innovation—self-attention—allows models to weigh the importance of different parts of the input data when making predictions, whether analyzing crystal structures or generating novel molecular representations.

This article explores the three principal Transformer architectural variants—encoder-only, decoder-only, and encoder-decoder models—within the context of materials discovery. We detail their operational mechanisms, specific applications in materials science, and provide practical experimental protocols for implementing these architectures in research settings.

Architectural Fundamentals

Core Components of the Transformer

The original Transformer architecture, introduced by Vaswani et al., comprises two primary stacks: the encoder and the decoder. The encoder processes input sequences to build contextualized representations, while the decoder generates output sequences based on these representations [9] [10]. The self-attention mechanism forms the core of both components, enabling the model to dynamically focus on different parts of the sequence during processing.

Self-Attention Mechanism: This mechanism transforms input sequences into queries, keys, and values through learned linear transformations. The attention scores are computed as:

[ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V ]

where ( Q ) represents the query vector, ( K ) the key vector, ( V ) the value vector, and ( d_k ) the dimension of the key vectors [11]. This calculation allows each element in the sequence to attend to all other elements, capturing long-range dependencies essential for understanding complex material structures.

Multi-Head Attention: By running multiple self-attention processes in parallel, each with different learned parameters, the model can capture diverse relationships and nuances within the data [11]. The outputs from these attention heads are concatenated and linearly transformed to produce the final output.

Positional Encoding: Since Transformers process all tokens simultaneously without inherent sequence order, positional encodings are added to input embeddings to provide information about token positions. While original Transformers used sinusoidal functions of absolute positions, improved variants like Transformer-XL employ relative positional encodings that better handle long sequences [10].

Architectural Variants: A Comparative Analysis

Most Transformer models specialize into one of three architectural variants, each optimized for different classes of tasks in materials discovery workflows.

Table 1: Core Transformer Architectures and Their Applications in Materials Science

| Architecture | Primary Function | Key Models | Materials Science Applications |

|---|---|---|---|

| Encoder-Only | Understanding & analyzing input data | BERT, RoBERTa [11] | Property prediction from structure [1], named entity recognition from literature [1], material classification |

| Decoder-Only | Generating new sequences | GPT series, LLaMA [12] [13] | De novo molecular design [1], synthesis planning, generative material discovery |

| Encoder-Decoder | Transforming input sequences to output sequences | T5, BART [10] [13] | Cross-modal translation (e.g., structure to description), material optimization, reaction prediction |

The selection of an appropriate architecture depends fundamentally on the task requirements. Encoder-only models excel at comprehension tasks where full bidirectional context is essential. Decoder-only models specialize in creative generation tasks where sequential prediction is paramount. Encoder-decoder models provide the flexibility to transform one sequence type into another, bridging different data modalities common in materials research [13].

Encoder-Only Architectures for Material Analysis

Operational Principles

Encoder-only models employ bidirectional self-attention, meaning each token in the input sequence can attend to all other tokens, enabling comprehensive context understanding [11] [13]. These models are pre-trained using denoising objectives, where random tokens are masked and the model must reconstruct the original input. This training approach forces the model to develop deep, bidirectional representations of the input data [10].

During pre-training, encoder models maximize the log-likelihood of reconstructing masked tokens given their surrounding context:

[ \sum{t=1}^{T} mt \log p(xt | x{-m}) ]

where ( mt ) is 1 if the token at position ( t ) is masked, and 0 otherwise, and ( x{-m} ) represents the corrupted text sequence [10]. This objective enables the model to develop robust contextual understanding, which can be transferred to various downstream tasks in materials science with minimal fine-tuning.

Applications in Materials Discovery

Encoder-only architectures have demonstrated significant utility in multiple domains of materials research:

Property Prediction: Encoder models pre-trained on large molecular databases can be fine-tuned to predict material properties from structural representations. For instance, models based on the BERT architecture have been successfully applied to predict properties from 2D molecular representations like SMILES or SELFIES, though this approach sometimes omits critical 3D conformational information [1].

Scientific Literature Mining: Models like MatBERT, which are pre-trained on materials science literature, enable efficient extraction of material-property relationships from textual data [8]. These models can perform named entity recognition to identify materials, properties, and synthesis conditions mentioned in research papers, facilitating automated knowledge base construction.

Multimodal Material Representation: The MultiMat framework demonstrates how encoder-only architectures can align multiple material modalities (crystal structure, density of states, charge density, and textual descriptions) into a shared latent space [8]. This approach produces highly effective material representations that transfer well to various downstream prediction tasks.

Experimental Protocol: Fine-Tuning Encoder Models for Property Prediction

Research Reagents & Computational Tools:

- Pre-trained Model Weights: Base encoder model (e.g., MatBERT, SciBERT) [8]

- Domain-Specific Dataset: Labeled material property data (e.g., Materials Project, PubChem) [1]

- Deep Learning Framework: PyTorch or TensorFlow with transformer libraries

- Computational Resources: GPU clusters for efficient fine-tuning

Procedure:

- Data Preparation: Curate a dataset of material structures and associated target properties. Represent structures using appropriate descriptors (SMILES, SELFIES, graph representations, or crystal fingerprints).

- Model Initialization: Load pre-trained weights from a base encoder model trained on broad scientific corpora.

- Architecture Adaptation: Replace the pre-training head with a task-specific output layer matching the prediction task (classification or regression).

- Fine-tuning: Train the adapted model on the target dataset using gradual unfreezing strategies to prevent catastrophic forgetting while adapting to the specific domain.

- Validation: Evaluate model performance on held-out test sets using domain-relevant metrics (MAE, RMSE, ROC-AUC).

Troubleshooting Tips:

- For small datasets, employ layer-wise freezing to prevent overfitting

- Use data augmentation techniques specific to molecular representations (e.g., SMILES enumeration)

- Implement early stopping based on validation performance

Decoder-Only Architectures for Generative Materials Design

Operational Principles

Decoder-only models utilize masked self-attention, where each token can only attend to previous tokens in the sequence, making them inherently autoregressive [12] [10]. This architectural constraint makes them ideally suited for sequential generation tasks, as they naturally model the conditional probability distribution of sequences.

These models are typically pre-trained using a next-token prediction objective, where they learn to maximize the likelihood of each token given all previous tokens:

[

\sum{t=1}^{T} \log p(xt | x_{

where ( x_{

Modern large language models (LLMs) predominantly use decoder-only architectures and are typically trained in two phases: (1) pre-training on vast amounts of unlabeled text data to develop general language understanding, followed by (2) instruction tuning to align model outputs with user intentions [13]. This approach has proven remarkably effective for scientific applications, including materials discovery.

Applications in Materials Discovery

De Novo Molecular Design: Decoder models can generate novel molecular structures by sequentially producing string-based representations like SMILES or SELFIES [1]. When conditioned on desired property constraints, these models can explore chemical space to identify promising candidates for synthesis.

Synthesis Planning: Models can generate plausible reaction pathways or synthesis procedures for target compounds by drawing upon knowledge embedded in chemical literature and patent databases [1]. This application accelerates experimental planning and can suggest novel synthetic routes.

Multimodal Generative Modeling: Recent advancements like Google's PaLM-E demonstrate how decoder-only models can unify multiple tasks in a single neural network, processing diverse inputs including text, images, and structural data to generate material recommendations and synthesis procedures [10].

Experimental Protocol: Conditional Generation of Materials

Research Reagents & Computational Tools:

- Pre-trained Decoder Model: Foundation model (e.g., GPT variants, specialized molecular generators)

- Conditioning Data: Property datasets with structural information

- Sampling Methods: Temperature scaling, nucleus sampling, beam search

- Validation Tools: Molecular dynamics simulations, property prediction models

Procedure:

- Model Selection: Choose a decoder model pre-trained on relevant chemical or materials data, or adapt a general-purpose LLM through continued pre-training.

- Conditioning Strategy: Implement property-controlled generation through either:

- Guidance Techniques: Classifier-guided or classifier-free diffusion approaches

- Prompt Engineering: Structured prompts that specify desired properties and constraints

- Generation: Sample from the model using appropriate decoding strategies:

- Greedy Search: For deterministic outputs

- Temperature Sampling: For diverse exploration of chemical space

- Beam Search: Balancing quality and diversity

- Validation: Filter generated structures using:

- Chemical Validity Checks: Ensure syntactically valid representations

- Property Prediction: Screen candidates using surrogate models

- Stability Assessment: Evaluate synthetic accessibility and stability

Optimization Tips:

- Balance exploration and exploitation through temperature scheduling

- Implement iterative refinement cycles where generated candidates inform subsequent generation rounds

- Use ensemble methods to improve generation reliability

Encoder-Decoder Architectures for Multimodal Materials Research

Operational Principles

Encoder-decoder models (also called sequence-to-sequence models) utilize both components of the Transformer architecture [13]. The encoder processes the input sequence with full bidirectional attention, building comprehensive representations. The decoder then generates the output sequence autoregressively while attending to both previous decoder states and the full encoder output.

This architecture is particularly suited for transformation tasks where the input and output are different in structure or modality. The pretraining of these models often involves reconstruction objectives where the input is corrupted in some way (e.g., by masking random spans of text), and the model must generate the original uncorrupted sequence [13].

The T5 model exemplifies this approach, training with a span corruption objective where random contiguous spans of tokens are replaced with a single mask token, and the model must predict the entire masked span [10] [13]. This approach teaches the model both comprehension (in the encoder) and generation (in the decoder) capabilities.

Applications in Materials Discovery

Cross-Modal Translation: Encoder-decoder models can translate between different material representations, such as converting crystal structures to textual descriptions or extracting structured data from experimental reports [8]. For example, the MultiMat framework aligns encoders for different modalities (crystal structure, density of states, charge density, text) into a shared latent space, enabling seamless translation between them.

Generative Question Answering: These models can answer complex materials science questions by comprehending the query and context (encoder) then generating detailed explanations (decoder). This capability facilitates knowledge extraction from the vast materials science literature.

Reaction Prediction: Encoder-decoder architectures can predict reaction outcomes by encoding reactant structures and conditions, then decoding to product representations, bridging the comprehension-generation divide essential for synthesis planning.

Experimental Protocol: Multimodal Alignment for Material Representation

Research Reagents & Computational Tools:

- Modality-Specific Encoders: Graph neural networks for structures, transformers for sequences, CNNs for spectral data

- Alignment Framework: Contrastive learning infrastructure

- Multimodal Dataset: Paired data across modalities (e.g., structures with properties, spectra with descriptions)

- Evaluation Benchmarks: Downstream task datasets for transfer learning assessment

Procedure:

- Encoder Selection: Choose appropriate architecture for each data modality:

- Alignment Pre-training: Train modality encoders using contrastive learning to align representations in shared latent space:

- Positive Pairs: Different modalities describing the same material

- Negative Pairs: Randomly sampled materials

- Objective: Maximize similarity for positive pairs, minimize for negative pairs

- Cross-Modal Fine-tuning: Adapt the aligned model for specific translation tasks:

- Structure → Property: Using aligned crystal structure encoder

- Text → Candidate Materials: Using text encoder to query material space

- Validation: Evaluate both alignment quality and downstream task performance

Optimization Strategies:

- Implement hard negative mining to improve contrastive learning

- Use progressive alignment starting with most correlated modalities

- Employ temperature scaling in contrastive loss to control representation concentration

Table 2: Experimental Applications of Transformer Architectures in Materials Discovery

| Application Domain | Encoder-Only | Decoder-Only | Encoder-Decoder |

|---|---|---|---|

| Property Prediction | High accuracy for classification and regression tasks [1] | Limited applicability | Moderate performance with additional context |

| Molecular Generation | Not suitable | State-of-the-art for de novo design [1] | Limited use |

| Synthesis Planning | Information extraction from literature [1] | Procedure generation and optimization [1] | Reaction prediction and optimization |

| Multimodal Learning | Individual modality encoding [8] | Limited research | Cross-modal translation and alignment [8] |

| Literature Mining | Named entity recognition and relation extraction [1] | Limited use | Question answering and summarization |

Implementation Considerations for Materials Research

Computational Requirements and Optimization

Implementing Transformer architectures for materials discovery presents significant computational challenges. Standard self-attention mechanisms scale quadratically with sequence length (O(n²)), making them prohibitively expensive for long sequences such as high-resolution spectral data or large molecular graphs [13].

Efficient Attention Mechanisms:

- Sparse Attention: Models like Longformer use local attention windows combined with global attention on preselected tokens to maintain linear scaling with sequence length [13]

- LSH Attention: Reformer employs locality-sensitive hashing to approximate attention, clustering similar queries together for efficient processing [13]

- Axial Positional Encodings: Factorizing positional encodings into smaller matrices reduces memory requirements for long sequences [13]

Domain-Specific Adaptations: Vision Transformers adapted for materials science, such as MAG-Vision for magnetic material modeling and Swin3D for 3D scene understanding, demonstrate how the core Transformer architecture can be specialized for scientific domains [14] [15]. These adaptations often incorporate domain-specific inductive biases while maintaining the expressive power of self-attention.

Data Preparation and Representation

The representation of materials data significantly impacts model performance. While encoder-only models commonly use 2D representations like SMILES or SELFIES for molecular property prediction, these representations omit critical 3D conformational information [1]. For inorganic solids, graph-based representations or primitive cell features that capture 3D structure typically yield better performance [1].

Emerging Best Practices:

- Multimodal Training: Aligning multiple representations (structural, spectral, textual) in shared latent spaces improves model robustness and performance [8]

- Data Augmentation: Techniques like SMILES enumeration for molecular data or symmetry operations for crystal structures increase data efficiency

- Transfer Learning: Leveraging models pre-trained on large scientific corpora significantly improves performance on specialized materials tasks with limited data [1]

Transformer architectures have emerged as foundational components in the digital infrastructure for materials discovery, each variant offering distinct capabilities tailored to different aspects of the research pipeline. Encoder-only models provide powerful comprehension for property prediction and literature mining, decoder-only architectures enable generative exploration of chemical space, and encoder-decoder models facilitate multimodal translation between different material representations.

As these architectures continue to evolve, we anticipate increasing specialization for scientific domains, with models incorporating deeper physical principles and domain knowledge. The integration of Transformer architectures with high-throughput experimentation and simulation represents a promising pathway toward autonomous materials discovery systems, accelerating the design of novel materials addressing critical energy, healthcare, and sustainability challenges.

The advent of foundation models is catalyzing a paradigm shift in materials discovery, moving away from task-specific algorithms towards general-purpose, scalable artificial intelligence systems [1] [16]. These models rely critically on the data representations upon which they are built. The journey of computational materials science has been marked by an evolution in these representations, from early hand-crafted features to the current era of data-driven representation learning [1]. This progression has given rise to three principal data modalities that form the backbone of modern materials informatics: 1D SMILES strings, 2D molecular graphs, and 3D crystalline structures.

Each modality offers distinct advantages and captures different aspects of molecular and material information, making them suitable for varied applications across the materials discovery pipeline. The choice of representation significantly influences model performance, with each modality presenting unique challenges and opportunities for foundation model development. This article examines these key data modalities within the context of foundation models for materials discovery, providing structured comparisons, detailed protocols, and practical resources for researchers navigating this rapidly evolving landscape.

Comparative Analysis of Data Modalities

Table 1: Characteristics of Key Molecular and Material Representations

| Representation | Data Structure | Key Features Captured | Primary Applications | Notable Models/Methods |

|---|---|---|---|---|

| SMILES (1D) | Linear string | Atomic sequence, branching, cyclic structures, functional groups | Property prediction, molecular generation, pre-training | MLM-FG [17], MoLFormer [17] |

| 2D Graph | Node-edge graph | Atom connectivity, molecular topology, bond types | Virtual screening, QSAR, classification tasks | GNNs, MolCLR, GROVER [17] |

| 3D Structure | Atomic coordinates | Molecular conformation, shape, volume, surface properties | Ligand-based virtual screening, synthesizability prediction | ROCS [18], USR [18], CSLLM [19] |

Table 2: Performance Comparison Across Modalities on Benchmark Tasks

| Task Type | Dataset/ Benchmark | Best Performing Model | Key Metric & Score | Representation Used |

|---|---|---|---|---|

| Synthesizability Prediction | ICSD + Theoretical Structures [19] | CSLLM (Synthesizability LLM) | Accuracy: 98.6% [19] | 3D Crystal Structure |

| Molecular Property Prediction | MoleculeNet (BBBP, ClinTox, Tox21, HIV, MUV) [17] | MLM-FG | Outperformed baselines in 5/7 classification tasks [17] | SMILES (1D) |

| Virtual Screening | DUD-E and other benchmark datasets [18] | ROCS | Volume Tanimoto Coefficient [18] | 3D Molecular Shape |

| Precursor Prediction | Binary/Ternary Compounds [19] | CSLLM (Precursor LLM) | Success Rate: 80.2% [19] | 3D Crystal Structure |

SMILES Strings: Protocols and Applications

Experimental Protocol: MLM-FG Pre-training with Functional Group Masking

Purpose: To enhance molecular representation learning by incorporating structural awareness through targeted masking of chemically significant functional groups in SMILES strings [17].

Workflow:

- Input Processing: Receive SMILES string (e.g., "O=C(C)Oc1ccccc1C(=O)O" for aspirin).

- Functional Group Parsing: Identify and tag subsequences corresponding to known functional groups using a chemical knowledge base [17] [20].

- Random Masking: Randomly select and mask a proportion of the identified functional group subsequences.

- Model Training: Train transformer-based model to predict masked functional groups using contextual information from the unmasked portions of the SMILES string.

- Fine-tuning: Adapt the pre-trained model to downstream tasks using labeled datasets.

Key Advantages: Effectively infers structural information without requiring explicit 2D or 3D structural data; demonstrates robust performance across diverse molecular property prediction tasks [17].

2D Molecular Graphs: Methods and Implementation

Application Notes for Foundation Model Training

2D molecular graphs represent molecules as nodes (atoms) and edges (bonds), explicitly capturing molecular topology and connectivity information. This representation has proven particularly valuable for graph neural networks in materials discovery applications.

Key Implementation Considerations:

- Node Features: Atom type, hybridization, formal charge, aromaticity

- Edge Features: Bond type, conjugation, spatial relationship

- Message Passing: Neighborhood aggregation mechanisms that enable information flow across molecular structure

While 2D graphs provide richer structural information than SMILES strings, recent evidence suggests that well-designed SMILES-based models can sometimes surpass graph-based approaches on certain benchmark tasks, highlighting the continued importance of representation learning research across modalities [17].

3D Structural Representations: Advanced Applications

Experimental Protocol: 3D Synthesizability Prediction with CSLLM

Purpose: To accurately predict the synthesizability of 3D crystal structures and identify appropriate synthetic methods and precursors using large language models [19].

Workflow:

- Data Curation:

- Structure Representation: Convert crystal structures to "material string" text format containing essential lattice, composition, atomic coordinate, and symmetry information [19]

- Model Architecture: Employ three specialized LLMs within Crystal Synthesis LLM framework:

- Synthesizability LLM: Binary classification of synthesizability

- Method LLM: Classification of synthetic method (solid-state/solution)

- Precursor LLM: Identification of appropriate precursors

- Training: Fine-tune LLMs on balanced dataset of 150,120 structures

- Validation: Evaluate generalizability on complex structures with large unit cells

Performance Metrics: Synthesizability LLM achieved 98.6% accuracy, significantly outperforming traditional thermodynamic (74.1%) and kinetic (82.2%) stability methods [19].

Experimental Protocol: 3D Shape-Based Virtual Screening with ROCS

Purpose: To identify biologically active compounds through 3D shape similarity screening using volume overlap calculations [18].

Workflow:

- Conformer Generation: Generate multiple low-energy 3D conformations for each database compound

- Molecular Representation: Represent molecular volume using Gaussian functions for analytical computation [18]

- Volume Overlap Optimization: Superimpose query and database compounds to maximize volume overlap using SIMPLEX algorithm [18]

- Similarity Scoring: Calculate Tanimoto coefficient based on overlapped volume: Tanimoto = Vquery,template / (Vquery + Vtemplate - Vquery,template) [18]

- Chemical Similarity: Optionally compute chemical similarity using atom typing (H-bond donors/acceptors, charged groups, hydrophobic regions)

Advantages: Capable of identifying active compounds with 2D structural dissimilarity to query; particularly valuable when target receptor structure is unavailable [18].

Table 3: Key Computational Tools and Datasets for Materials Foundation Models

| Resource Name | Type | Primary Function | Access | Relevance to Modalities |

|---|---|---|---|---|

| PubChem [17] | Database | ~100M purchasable drug-like compounds for pre-training | Public | SMILES, 2D structures |

| ICSD [19] | Database | Experimentally validated crystal structures | Licensed | 3D crystalline structures |

| ROCS [18] | Software | 3D shape-based molecular similarity searching | Commercial | 3D molecular shape |

| Viz Palette [20] | Tool | Color palette evaluation for data visualization | Open Access | Research communication |

| Materials Project [19] | Database | Computational material properties and structures | Public | 3D crystal structures |

| ZINC/ChEMBL [1] | Database | Commercially available compounds & bioactivity data | Public | SMILES, 2D graphs |

| CSLLM Framework [19] | Model | Crystal synthesizability and precursor prediction | Research | 3D crystal structures |

| MLM-FG [17] | Model | Molecular property prediction with FG masking | Research | SMILES strings |

The integration of multiple data modalities—from sequential SMILES strings to structural 2D graphs and complex 3D crystalline representations—forms the foundation of next-generation AI systems for materials discovery. Each modality offers complementary strengths: SMILES for efficient pre-training on large datasets, 2D graphs for explicit topological information, and 3D structures for capturing shape-dependent properties and synthesizability.

Evidence suggests that specialized approaches within each modality can deliver exceptional performance, from MLM-FG's functional group masking for SMILES-based property prediction to CSLLM's remarkable 98.6% accuracy in 3D crystal synthesizability assessment [17] [19]. The future of materials foundation models lies not in identifying a single superior modality, but in developing sophisticated multimodal approaches that leverage the unique advantages of each representation type, ultimately accelerating the discovery and synthesis of novel functional materials.

The emergence of foundation models represents a paradigm shift in materials discovery, transitioning from hand-crafted feature design to automated, data-driven representation learning [1]. These models, defined as "a model that is trained on broad data (generally using self-supervision at scale) that can be adapted to a wide range of downstream tasks," rely fundamentally on the availability of massive, high-quality datasets [1]. The separation of representation learning from downstream tasks enables researchers to leverage generalized knowledge acquired from phenomenal volumes of data and apply it to specific material property prediction, synthesis planning, and molecular generation challenges [1].

Large-scale chemical repositories including PubChem, ZINC, and the Materials Project form the essential data bedrock for training and validating these foundation models. The quality, diversity, and accessibility of data within these repositories directly influence model performance on critical tasks such as predicting conductivity, melting point, flammability, and other properties valuable for battery design and pharmaceutical development [21]. However, the materials science domain presents unique data challenges, as minute structural variations can profoundly impact material properties—a phenomenon known as an "activity cliff" [1]. Models trained on insufficient or non-representative data may overlook these critical effects, potentially leading research down non-productive pathways.

Repository Landscape and Quantitative Analysis

Each major repository offers specialized data types, requiring researchers to select sources based on their specific research objectives and desired material systems. The table below provides a structured comparison of these essential resources.

Table 1: Key Characteristics of Major Materials Data Repositories

| Repository | Primary Data Types | Scale | Key Applications | Access Method |

|---|---|---|---|---|

| PubChem [22] | Small organic molecules, bioactivity data, substance descriptions | 3 primary databases (Substance, Compound, BioAssay) [22] | Drug discovery, bioactivity prediction, chemical biology | Web interface, PUG API [22] |

| ZINC [23] | Commercially-available compounds, purchasable molecules | Over 230 million compounds in ready-to-dock 3D formats [23] | Virtual screening, lead discovery, analog searching | Database downloads, web interface [23] |

| Materials Project [24] | Inorganic crystals, calculated material properties, band structures | Not explicitly quantified in search results; extensive collection of calculated material properties | Battery materials research, catalyst design, electronic materials | REST API (MPRester), web interface [24] |

Data Extraction and Standardization Protocols

Protocol: Multi-Modal Data Extraction from Scientific Documents

The automation of data extraction from scientific literature and patents is crucial for building the comprehensive datasets needed for foundation model training [1].

- Objective: Automate the extraction of structured materials data (chemical structures, properties, synthesis conditions) from heterogeneous document formats (PDFs, patents, reports) to augment training corpora for foundation models.

- Materials and Inputs: Scientific literature in PDF format, patent documents, chemical database exports, and image files containing molecular structures or data plots.

- Procedure:

- Textual Named Entity Recognition (NER): Employ BERT-based or specialized transformer models (e.g., LLaMat [25]) fine-tuned on materials science text to identify and extract material names, properties, and synthesis parameters [1] [25].

- Image-Based Structure Identification: Process document images using Vision Transformers or Graph Neural Networks to detect and convert depicted molecular structures into machine-readable representations like SMILES or SELFIES [1].

- Plot Data Extraction: Utilize specialized tools (e.g., Plot2Spectra [1]) to extract numerical data points from spectroscopy plots and other graphical data representations.

- Multi-Modal Data Association: Implement schema-based extraction models [1] to link identified materials with their corresponding properties and synthesis details across text and image modalities.

- Data Validation: Cross-reference extracted data against existing databases and apply domain-specific rules to identify and flag inconsistencies.

Protocol: Chemical Structure Standardization

Structural inconsistencies in chemical data present significant obstacles to model training. The following protocol ensures data uniformity.

- Objective: Convert diverse chemical structure representations into standardized, canonical forms to ensure consistency across training data and improve foundation model performance.

- Materials and Inputs: Chemical structures in various formats (SMILES, SELFIES, molecular structure images, connection tables).

- Procedure:

- Structure Validation: Check for invalid atom valences, unusual bond lengths, and other structural impossibilities. Reject structures that cannot be corrected automatically (approximately 0.36% of cases in PubChem) [26].

- Aromaticity Perception: Apply consistent aromaticity models (e.g., Hückel's rule) to standardize ring system representations, converting between Kekulé and aromatic forms as needed [26].

- Tautomer Standardization: Generate canonical tautomeric representatives using rules based on predicted stability or count-based scoring functions, acknowledging that approximately 44% of structures require modification during standardization [26].

- Stereochemistry Assignment: Detect and explicitly define stereocenters using standardized stereodescriptors.

- Charge Normalization: Adjust molecular representations to predominant ionization states at physiological pH when appropriate for the application.

- Quality Control: The PubChem standardization service is publicly accessible for validation and comparison. Continuous monitoring of modification rates and rejection reasons is essential [26].

Foundation Model Training and Workflows

Experimental Workflow for Foundation Model Development

The development of foundation models for materials science follows a systematic workflow that transforms raw data into predictive capabilities. The diagram below illustrates this multi-stage process.

Protocol: Training Domain-Specific Foundation Models

Building on the structured workflow, this protocol details the specific steps for creating foundation models tailored to materials discovery tasks.

- Objective: Develop a domain-adapted foundation model (e.g., LLaMat [25]) for materials research through continued pre-training of base LLMs on specialized scientific corpora.

Research Reagent Solutions:

Table 2: Essential Research Reagents for AI-Driven Materials Discovery

Resource/Platform Function Application Context SMILES/SELFIES Text-based molecular representation Encoding chemical structures for language model processing [1] Transformer Architectures Neural network backbone Base model for understanding molecular sequences [1] ALCF Supercomputers High-performance computing Training large-scale models on billions of molecules [21] LLaMA Base Models Foundational language models Starting point for domain-specific adaptation [25] Materials Science Corpus Domain-specific training data Continued pre-training for domain adaptation [25] Procedure:

- Data Curation: Compile a diverse corpus of materials science literature, crystallographic data (CIF files), and existing chemical databases. For battery materials, this includes data on electrolytes and electrodes [21].

- Molecular Representation: Convert all chemical structures to standardized SMILES or SELFIES strings to create a unified textual representation [1] [21].

- Continued Pre-training: Initialize with a base LLM (e.g., LLaMA-2/3) and continue unsupervised training on the domain corpus. For the LLaMat model, this involves training on extensive materials literature and crystallographic data [25].

- Task-Specific Fine-Tuning: Adapt the pre-trained model to downstream tasks (property prediction, structure generation) using smaller, labeled datasets. Experiments show that models trained on billions of molecules significantly outperform single-property prediction models [21].

- Model Alignment: Employ reinforcement learning or preference optimization to align model outputs with scientific correctness and safety requirements.

- Validation: Systematically evaluate the model on materials-specific NLP tasks, structured information extraction, and crystal structure generation capabilities [25].

Applications and Validation in Materials Discovery

Application Notes: Property Prediction and Inverse Design

Foundation models enable powerful property prediction capabilities that accelerate the discovery of novel materials for specific applications.

- Battery Materials Discovery: Researchers at the University of Michigan used Argonne supercomputers to train foundation models that predict key electrolyte properties including conductivity, melting point, and flammability [21]. The model, trained on billions of molecules, unified multiple single-property prediction capabilities and outperformed previous specialized models, demonstrating the efficiency of the foundation approach [21].

- Crystal Structure Generation: The LLaMat-CIF variant demonstrates unprecedented capabilities in generating stable crystal structures, achieving high coverage across the periodic table [25]. This inverse design approach enables researchers to discover novel crystalline materials with desired properties without exhaustive experimental screening.

- Multi-Modal Data Integration: Advanced foundation models can integrate textual information with structural data (e.g., CIF files) and numerical properties, enabling more accurate predictions of structure-property relationships [25]. This is particularly valuable for predicting properties like band gaps in inorganic solids where 3D structural information is crucial [1].

Protocol: Validation and Experimental Feedback

- Objective: Establish a rigorous validation pipeline to verify model predictions through computational and experimental methods.

- Procedure:

- Computational Validation: Compare property predictions against first-principles calculations (DFT, molecular dynamics) for known materials to establish accuracy benchmarks [24].

- Cross-Validation: Perform k-fold cross-validation using available experimental data from repositories like PubChem and Materials Project.

- Prospective Validation: Select top candidate materials identified by the model for experimental synthesis and testing [21].

- Model Refinement: Incorporate experimental results into future training cycles to improve model accuracy through continuous learning.

- Case Study Implementation: The University of Michigan team plans to collaborate with laboratory scientists to synthesize and test the most promising battery material candidates identified by their AI models, closing the loop between prediction and validation [21].

The integration of large-scale data repositories with foundation models is fundamentally transforming materials discovery. The structured protocols outlined in this document provide a roadmap for researchers to leverage these powerful resources effectively. As foundation models continue to evolve, their ability to predict material properties, generate novel structures, and accelerate the design of next-generation materials will play an increasingly crucial role in addressing global challenges in energy, healthcare, and sustainability.

Future developments will likely focus on improved multi-modal data integration, more sophisticated standardization approaches for complex material systems, and enhanced model architectures specifically designed for scientific discovery. The researchers behind these initiatives emphasize making their models available to the broader scientific community, promising to democratize access to AI-driven materials discovery [21] [25].

How Foundation Models Work: Architectures and Real-World Applications in Discovery and Synthesis

The advent of foundation models is fundamentally reshaping the paradigm of property prediction in materials science and drug discovery. These models, trained on broad data using self-supervision and adaptable to a wide range of downstream tasks, offer a powerful alternative to traditional, labor-intensive methods for predicting critical properties such as energy, band gap, and bio-activity [1]. This shift is enabling a more data-driven approach to inverse design, where desired properties guide the discovery of new molecular entities and materials [1].

Traditional approaches to property characterization have relied on cycles of material synthesis and extensive experimental testing, or computationally expensive first-principles calculations like Density Functional Theory (DFT) [27]. While accurate, these methods are often prohibitively slow or costly for screening large chemical spaces. Foundation models, particularly large language models (LLMs) adapted for scientific domains, are now demonstrating unprecedented capabilities in extracting knowledge from the vast scientific literature and structured databases, accelerating the prediction of key properties and the design of novel materials [25].

Foundation Models in Materials Property Prediction

Foundation models in materials science are typically built upon transformer architectures and can be categorized into encoder-only and decoder-only types. Encoder-only models, drawing from the Bidirectional Encoder Representations from Transformers (BERT) architecture, excel at understanding and representing input data, making them well-suited for property prediction tasks [1]. Decoder-only models, on the other hand, are designed for generative tasks, such as predicting and producing new chemical structures token-by-token [1].

A key strength of the foundation model approach is the separation of representation learning from specific downstream tasks. The model is first pre-trained in an unsupervised manner on massive, unlabeled corpora of text and structured chemical data, learning generalizable representations of chemical language and structure. This base model can then be fine-tuned with significantly smaller amounts of labeled data to perform specific tasks like band gap prediction or bio-activity classification [1]. Specialized models like LLaMat demonstrate this through continued pre-training of general-purpose LLMs (like LLaMA) on extensive collections of materials literature and crystallographic data, endowing them with superior capabilities in materials-specific natural language processing and structured information extraction [25].

Table 1: Types of Foundation Models for Property Prediction

| Model Type | Base Architecture Examples | Primary Function | Common Applications in Materials Discovery |

|---|---|---|---|

| Encoder-only | BERT [1] | Understanding/representing input data | Property prediction from structure [1] |

| Decoder-only | GPT [1] | Generating new outputs token-by-token | Molecular generation, synthesis planning [1] |

| Domain-Adapted LLMs | LLaMat, LLaMat-CIF [25] | Domain-specific NLP & structured data tasks | Information extraction, crystal structure generation [25] |

Application Note 1: Band Gap Prediction in Conjugated Polymers

Background and Objective

Predicting the band gap energy (E₉) of donor–acceptor (D–A) conjugated polymers (CPs) is critical for the design of efficient organic photovoltaics (OPVs). The band gap directly influences the light-harvesting efficiency and open-circuit voltage of the solar cell. Traditional DFT calculations, while accurate, are computationally expensive, creating a bottleneck for high-throughput screening [27]. This application note details a machine learning Quantitative Structure-Property Relationship (QSPR) approach to build accurate predictors for E₉, leveraging a manually curated dataset of CPs.

Experimental Protocol and Workflow

Data Set Curation

- Source Selection: Manually select 60 commonly used donor units and 52 acceptor units from successfully synthesized and characterized CPs in organic electronics literature [27].

- Structure Enumeration: Construct 3,120 unique D–A structures using a genetic algorithm for R-group enumeration to ensure a 1:1 donor-to-acceptor ratio [27].

- Reference Data Generation: Perform DFT calculations (e.g., using B3LYP functional and 6–311+G(d) basis set) on the training set to obtain reference E₉ values for model training and validation [27].

Descriptor Extraction and Feature Selection

- Descriptor Generation: Compute a comprehensive set of molecular descriptors and fingerprints. This includes:

- Polymer Fingerprints: Radial, MOLPRINT2D, dendritic, linear fingerprints to encode composition and structure [27].

- Electronic Descriptors: Frontier orbital energy levels (HOMO/LUMO) and other electronic properties [27].

- Other Cheminformatic Descriptors: Topological, molecular, and functional group count descriptors [27].

- Feature Selection: Apply feature selection techniques (e.g., correlation analysis, feature importance ranking) to identify the most informative descriptors and reduce dimensionality, which is crucial for improving model performance and generalizability [27].

Model Training and Validation

- Algorithm Selection: Employ a variety of linear and nonlinear regression algorithms, such as Kernel Partial Least-Squares (KPLS) regression.

- Performance Benchmarking: Systematically benchmark model performance using different descriptor types and algorithm combinations. Validate models using hold-out test sets and cross-validation, reporting metrics like R².

The following workflow diagram illustrates the protocol for band gap prediction:

Key Results and Data

The study provided a quantitative comparison of different model configurations, yielding the following results:

Table 2: Performance of ML Models for Band Gap (E₉) Prediction

| Model Algorithm | Key Descriptor/Fingerprint Types | Performance (R²) | Key Findings |

|---|---|---|---|

| KPLS Regression | Radial Fingerprint | 0.899 | Achieved highest accuracy for E₉ prediction [27] |

| KPLS Regression | MOLPRINT2D Fingerprint | 0.897 | Performance nearly equivalent to Radial fingerprint [27] |

| Various Models | Electronic Descriptors (e.g., HOMO/LUMO) | Significant Performance Improvement | Critical for predicting electronic properties like E₉ [27] |

Application Note 2: Foundation Models for Molecular Property Prediction

Data Extraction and Curation for Pre-training

The performance of foundation models is heavily dependent on the quality, size, and diversity of their training data. For materials science, this involves large-scale data extraction from multiple sources [1].

- Structured Databases: Resources like PubChem, ZINC, ChEMBL, and the Materials Project provide structured information on materials and molecules for training chemical foundation models [1].

- Scientific Literature and Patents: A significant volume of materials information is embedded in documents (scientific reports, patents). Advanced data-extraction models use:

- Named Entity Recognition (NER): To identify material names and properties from text [1].

- Multimodal Models: To extract data from text, tables, and images (e.g., molecular structures from patent images) simultaneously [1].

- Tool Integration: Using specialized algorithms (e.g., Plot2Spectra for spectroscopy plots, DePlot for charts) to convert visual information into structured data for LLMs [1].

Protocol for Fine-tuning on Downstream Tasks

Once a base foundation model is pre-trained, it can be adapted for specific property prediction tasks.

- Task Formulation: Frame the prediction task (e.g., energy, band gap, bio-activity) as a regression or classification problem.

- Dataset Preparation: Prepare a labeled dataset for the target property. This dataset can be significantly smaller than the pre-training corpus.

- Model Fine-tuning: Update the weights of the pre-trained model using the task-specific dataset. This process allows the model to leverage its general chemical knowledge while specializing in the target property.

- Alignment (Optional): The model can undergo an alignment process where its outputs are conditioned to favor chemically valid, synthesizable, or otherwise desirable structures, improving the practical utility of its predictions [1].

The logical flow of data from raw sources to a functional property predictor is shown below:

The Scientist's Toolkit: Research Reagents and Infrastructure

Successful implementation of property prediction pipelines relies on a suite of data resources, software, and infrastructure.

Table 3: Essential Resources for Materials Property Prediction

| Resource Name | Type | Function and Description |

|---|---|---|

| PubChem, ZINC, ChEMBL [1] | Chemical Database | Provides vast, structured information on molecules for training and validating foundation models. |

| Materials Project [28] | Materials Database | Offers open access to computed properties of known and predicted materials, enabling benchmarking. |

| SMILES/SELFIES [1] | Molecular Representation | String-based representations of molecular structure used as input for many 2D property prediction models. |

| MGED (Materials Genome Engineering Databases) [28] | Data Infrastructure | A platform for integrated management of shared materials data and services, simplifying data collection and analysis. |

| Kadi4Mat [29] | Research Data Infrastructure | Combines an electronic lab notebook with a repository, supporting structured data storage and reproducible workflows throughout the research process. |

| Schrödinger AutoQSAR [27] | Software Platform | Used for automated QSPR model building, including descriptor generation, feature selection, and model training. |

| Tetrahydrocorticosterone-d5 | Tetrahydrocorticosterone-d5, MF:C21H34O4, MW:355.5 g/mol | Chemical Reagent |

| 7-Deaza-7-propargylamino-dATP | 7-Deaza-7-propargylamino-dATP, MF:C14H20N5O12P3, MW:543.26 g/mol | Chemical Reagent |

The integration of foundation models into property prediction marks a significant leap forward for materials discovery and drug development. By building accurate predictors for energy, band gap, and bio-activity, researchers can rapidly screen vast chemical spaces, guiding synthesis efforts toward the most promising candidates. As demonstrated, this involves a sophisticated pipeline from multimodal data extraction and curation to model pre-training and task-specific fine-tuning. The continued development of specialized models like LLaMat, coupled with robust data infrastructures that adhere to FAIR principles, promises to further accelerate the design of next-generation materials and therapeutics, solidifying the role of AI as an indispensable partner in scientific research.

Generative inverse design represents a paradigm shift in materials science and drug discovery. Unlike traditional forward problems that predict properties from a given structure, inverse design starts with desired properties and aims to identify the molecular or crystalline structures that exhibit them [30] [31]. This approach directly addresses the core challenge of functional materials design, where researchers seek optimal structures under specific constraints. The advent of foundation models and specialized generative architectures has significantly advanced this field, enabling the exploration of chemical spaces far beyond human intuition and conventional screening methods [1] [32].

The fundamental challenge in inverse design lies in the one-to-many mapping inherent in property-to-structure relationships. For a small set of target properties, numerous molecular structures may provide satisfactory solutions. However, as hypothesized in Large Property Models (LPMs), supplying a sufficient number of property constraints can make the property-to-structure mapping unique, effectively narrowing the solution space to optimal candidates [30]. This principle underpins recent advancements in generative models for both organic molecules and inorganic crystals, bridging the gap between computational prediction and experimental synthesis.

Foundation Models and Key Architectures

Foundation models pretrained on broad data have emerged as powerful tools for materials discovery. These models, adapted from architectures like transformers, can be fine-tuned to specific downstream tasks with relatively small labeled datasets [1]. The materials informatics field has developed specialized foundation models that understand atomic interactions across the periodic table, enabling property predictions for multi-component systems without recalculating fundamental physics for each new material [33].

Table 1: Key Generative Model Architectures for Inverse Design

| Model Name | Architecture Type | Application Domain | Key Capabilities |

|---|---|---|---|

| Large Property Models (LPM) | Transformer [30] | Organic Molecules | Property-to-molecular-graph mapping using 23 chemical properties |

| MatterGen | Diffusion Model [32] | Inorganic Crystals | Generates stable, diverse materials across periodic table |

| Con-CDVAE | Conditional Variational Autoencoder [33] | Crystal Structures | Property-constrained generation via latent space embedding |

| CDVAE | Variational Autoencoder [32] | Crystal Structures | Base generative model for crystalline materials |

| DiffCSP | Diffusion Model [32] | Crystal Structures | Structure prediction via diffusion process |

These models employ different strategies for conditioning generation on properties. MatterGen uses adapter modules for fine-tuning on property constraints and classifier-free guidance during generation [32]. LPMs directly learn the property-to-structure mapping by training on multiple molecular properties simultaneously [30]. Con-CDVAE implements a two-step training scheme that incorporates an additional network to model the latent distribution between crystal structures and multiple property constraints [33].

Experimental Protocols and Methodologies

Protocol: Large Property Model Training for Molecular Design

Purpose: Train a transformer model to generate molecular structures conditioned on multiple property constraints.

Materials and Data Requirements:

- Dataset Curation: Collect ~1.3 million molecules with up to 14 heavy atoms (CHONFCl elements) from sources like PubChem [30]

- Property Calculation: Compute 23 molecular properties using GFN2-xTB (dipole moment, HOMO-LUMO gap, solvation free energies, etc.) and PubChem descriptors (logP, H-bond acceptors/donors, etc.)

- Structure Optimization: Generate optimized 3D geometries using Auto3D for all molecular structures

Training Procedure:

- Data Preprocessing:

- Split dataset into training/validation/test sets (typical ratio: 80/10/10)

- Normalize all property values to zero mean and unit variance

- Represent molecular graphs using SELFIES or SMILES representation

Model Architecture:

- Implement transformer architecture with encoder-decoder structure

- Configure input layer to accept property vectors of length 23

- Design output layer to generate molecular graph representations token-by-token

Training Configuration:

- Optimize parameters using minimization analogous to f(p) = P(G) [30]

- Employ teacher forcing during training with cross-entropy loss

- Train for sufficient epochs until validation loss plateaus

Conditional Generation:

- Sample from P(G|p0, p1, ..., pN) by providing target property vector

- Use beam search or nucleus sampling for diverse generation

- Validate generated structures with separate property prediction models

Validation: Assess reconstruction accuracy, structural validity, and property matching for generated molecules.

Protocol: Active Learning Framework for Crystal Generation

Purpose: Implement an iterative active learning cycle to enhance generative model performance in data-scarce property regions.

Materials:

- Initial Dataset: Curate from MatBench (e.g., matbenchlogkvrh) or Materials Project [33]

- Generative Model: Con-CDVAE or MatterGen as conditional generator

- Property Predictor: Foundation Atomic Models (MACE-MP-0) or graph neural networks (CGCNN)

- Validation Tools: DFT calculation software (VASP, Quantum ESPRESSO)

Procedure:

- Initial Training:

- Preprocess dataset: remove unstable structures, filter by complexity, focus on target material classes

- Train conditional generative model (Con-CDVAE) on initial dataset

- Set training parameters: 600 steps with weighted losses for different structural attributes [33]

Active Learning Cycle:

- Generation Phase: Use trained model to generate candidate structures with target properties

- Screening Phase: Implement three-stage screening:

- Stage 1: Structural stability filter (e.g., symmetry check)

- Stage 2: Property prediction using FAMs or GNNs

- Stage 3: DFT validation for selected candidates

- Expansion Phase: Add validated structures to training dataset

- Retraining Phase: Fine-tune generative model on expanded dataset

Iteration:

- Repeat active learning cycle for 3-5 iterations

- Monitor improvement in success rate of generating stable, novel crystals with target properties

- Focus generation on challenging property regions (e.g., high bulk modulus >350 GPa)

Validation: Evaluate percentage of generated structures that are stable, unique, and new (SUN) after DFT relaxation.

Active Learning Workflow for Crystal Generation

Protocol: Diffusion-Based Crystal Generation with MatterGen

Purpose: Generate novel, stable inorganic crystals with targeted properties using diffusion models.

Materials:

- Dataset: Alex-MP-20 (607,683 stable structures with up to 20 atoms from Materials Project and Alexandria) [32]

- Model: MatterGen diffusion model with adapter modules

- Property Predictors: Fine-tuned for target properties (mechanical, electronic, magnetic)

- Validation Set: Alex-MP-ICSD (850,384 structures for stability assessment)

Procedure:

- Base Model Pretraining:

- Train diffusion process on Alex-MP-20 dataset

- Configure corruption processes for atom types, coordinates, and periodic lattice

- Implement periodic boundary-aware coordinate diffusion

- Train score network with invariant outputs for atom types and equivariant outputs for coordinates/lattice

Property Fine-Tuning:

- Inject adapter modules into base model layers

- Fine-tune on specialized datasets with property labels