Revolutionizing Discovery: High-Throughput Automated Platforms for Reaction Optimization

This article explores the paradigm shift in chemical synthesis driven by high-throughput experimentation (HTE) and automation.

Revolutionizing Discovery: High-Throughput Automated Platforms for Reaction Optimization

Abstract

This article explores the paradigm shift in chemical synthesis driven by high-throughput experimentation (HTE) and automation. Aimed at researchers, scientists, and drug development professionals, it covers the foundational principles of HTE, detailing core components like liquid handling systems and batch reactors. It examines methodological applications across various reaction types and the integration of machine learning for closed-loop optimization. The content also addresses critical troubleshooting for variability and data handling challenges, and concludes with a discussion on the validation frameworks and comparative analyses of different platforms and technologies, providing a comprehensive guide to leveraging automation for accelerated discovery.

The New Paradigm: Foundations of High-Throughput Automated Optimization

Defining High-Throughput Experimentation (HTE) in Modern Chemistry

High-Throughput Experimentation (HTE) represents a paradigm shift in chemical research, enabling the rapid and parallel execution of millions of chemical, genetic, or pharmacological tests through the integration of robotics, data processing software, liquid handling devices, and sensitive detectors [1]. This approach has become increasingly vital in modern chemistry and drug discovery, enabling researchers to quickly identify active compounds, antibodies, or genes that modulate specific biomolecular pathways [1]. The core value of HTE lies in its ability to explore vast experimental spaces that would be intractable using traditional one-factor-at-a-time approaches, dramatically accelerating the optimization of chemical reactions and the discovery of new reactivities [2] [3].

In contemporary research environments, HTE has evolved from specialized industrial applications to an accessible tool in academic settings, with platforms utilizing miniaturized reaction scales and automated robotic tools to execute numerous reactions in parallel [2] [4]. This transition has been facilitated by the development of more accessible hardware and software solutions that lower the barrier to implementation while maintaining the rigorous data quality required for scientific discovery [4] [3].

Key Technological Components of HTE Systems

Core Hardware Infrastructure

Modern HTE platforms incorporate sophisticated robotic systems and specialized labware to enable highly parallel experimentation. The essential hardware components include:

Microtiter Plates: These disposable plastic plates form the fundamental reaction vessels for HTE, featuring grids of small wells in standardized formats of 24, 96, 384, 1536, 3456, or 6144 wells [1] [3]. The original 96-well microplate established the standard with 8×12 well arrangements and 9 mm spacing [1].

Integrated Robotic Platforms: Automation systems transport assay microplates between specialized stations for sample and reagent addition, mixing, incubation, and detection [1]. These can include solid dispensers, liquid handlers with positive displacement pipetting for viscous liquids, capping/uncapping stations, on-deck magnetic stirrers with heating/cooling, vortex mixers, and centrifuges [5].

Environmental Control Systems: Dedicated configurations maintain appropriate reaction environments, including nitrogen purge boxes for general experiments and argon glove boxes for highly sensitive experiments [5].

Detection and Analysis Instruments: High-capacity analysis machines measure dozens of plates in minutes, generating thousands of data points through techniques including GC, GC-MS, HPLC, UPLC, UPLC-MS, and SFC [4].

Software and Data Management

Contemporary HTE workflows rely on specialized software solutions to manage the enormous organizational load associated with designing, executing, and analyzing high-throughput experiments. Platforms like phactor provide interfaces for rapidly designing arrays of chemical reactions in various wellplate formats, accessing online reagent data, generating liquid handling instructions, and facilitating experimental evaluation [3]. These systems store chemical data, metadata, and results in machine-readable formats that support translation to various software and enable machine learning applications [3].

Table 1: Essential HTE Hardware Components and Their Functions

| Component Category | Specific Examples | Function in HTE Workflow |

|---|---|---|

| Reaction Vessels | 96, 384, 1536-well microtiter plates | Miniaturized containers for parallel reaction execution at scales of 10-100 µL [4] [1] |

| Liquid Handling Systems | Positive displacement pipettes, liquid handlers | Accurate dispensing of reagents, especially viscous liquids/slurries [5] |

| Solid Dispensing | Powder dispensers, automated balances | Precise delivery of solid reagents and catalysts [5] |

| Environmental Control | On-deck magnetic stirrers with heating/cooling, inert atmosphere chambers | Maintaining optimal reaction conditions (temperature, mixing, atmosphere) [5] |

| Analysis Instrumentation | UPLC-MS, GC-MS, HPLC | High-throughput analysis of reaction outcomes [4] |

HTE Experimental Design and Workflow

The implementation of a successful HTE campaign requires meticulous planning and execution across multiple stages. The following diagram illustrates the core HTE workflow:

Experimental Design Phase

HTE experiments begin with careful planning of the reaction array design. Researchers must select appropriate combinations of variables such as catalysts, ligands, solvents, bases, and additives to explore the chemical space effectively [4]. Two primary approaches dominate this phase:

Traditional Factorial Designs: Chemists employ fractional factorial screening plates with grid-like structures that distill chemical intuition into plate design, exploring a limited subset of fixed combinations [2].

Machine Learning-Guided Design: Advanced approaches use Bayesian optimization and other ML techniques to balance exploration and exploitation of reaction spaces, identifying optimal conditions in fewer experimental cycles [2]. Algorithms like quasi-random Sobol sampling select initial experiments to maximize reaction space coverage, increasing the likelihood of discovering regions containing optima [2].

Protocol: Standard HTE Reaction Setup for Reaction Optimization

Application: Optimization of catalytic reactions (e.g., Suzuki-Miyaura coupling, Buchwald-Hartwig amination)

Materials and Equipment:

- 96-well microtiter plates (glass inserts or plastic, depending on reaction compatibility)

- Automated liquid handling system (e.g., Opentrons OT-2, SPT Labtech mosquito) or multi-channel pipettes

- Solid dispensing system or powdered reagent stock plates

- Inert atmosphere chamber or glove box for air-sensitive reactions

- Reagent stock solutions prepared at standardized concentrations (typically 0.1-1.0 M in appropriate solvents)

Procedure:

Plate Layout Design: Define the experimental matrix using HTE software (e.g., phactor), assigning specific reagent combinations to each well according to the experimental design [3].

Stock Solution Preparation: Prepare master stock solutions of all substrates, catalysts, ligands, bases, and additives in appropriate solvents. Ensure concentrations account for final reaction volume and desired stoichiometries.

Reagent Transfer:

- Program automated liquid handler or manually transfer calculated volumes of each stock solution to designated wells using multi-channel pipettes.

- For solid reagents, use automated powder dispensers or pre-prepared stock plates.

- Maintain consistent total reaction volume across all wells (typically 10-100 µL) [4].

Reaction Initiation:

- For reactions requiring heating/cooling, seal plates with appropriate septum caps and transfer to thermal control units.

- Initiate reactions simultaneously through plate-wise agitation or thermal activation.

Quenching and Dilution:

- After predetermined reaction time, add quenching solution (e.g., acetonitrile with internal standard) to each well.

- Dilute samples appropriately for analytical analysis.

Analysis:

- Transfer aliquots to analysis plates using liquid handler.

- Analyze via UPLC-MS, GC-MS, or other high-throughput analytical methods.

- Export data for processing and visualization.

Quality Control Considerations:

- Include control reactions (positive, negative, background) in each plate

- Randomize well assignments to minimize positional effects

- Implement replication strategies to assess experimental variability

Data Analysis and Hit Selection in HTE

Statistical Methods for HTE Data Analysis

The analysis of HTE data requires specialized statistical approaches to distinguish meaningful signals from experimental noise. Several quality assessment measures have been developed to evaluate data quality and identify promising "hits":

Table 2: Statistical Measures for HTE Data Analysis and Hit Selection

| Statistical Measure | Formula/Calculation | Application Context | Advantages/Limitations |

|---|---|---|---|

| Z-Factor | 1 - (3σ₊ + 3σ₋)/|μ₊ - μ₋| | Assay quality assessment | Measures separation between positive and negative controls; values >0.5 indicate excellent assays [1] |

| Strictly Standardized Mean Difference (SSMD) | (μ₊ - μ₋)/√(σ₊² + σ₋²) | Data quality assessment and hit selection | More robust than Z-factor for assessing effect sizes; suitable for both replicates and no-replicate screens [1] |

| Z-Score Method | (x - μ)/σ | Primary screens without replicates | Simple implementation; assumes each compound has same variability as negative reference [1] |

| q-Expected Hypervolume Improvement (q-EHVI) | Complex multi-objective calculation | Bayesian optimization for multiple objectives | Identifies Pareto-optimal conditions; computationally intensive for large batch sizes [2] |

Machine Learning Integration in Modern HTE

Contemporary HTE workflows increasingly incorporate machine learning to guide experimental design and optimization. Frameworks like Minerva demonstrate robust performance in handling large parallel batches, high-dimensional search spaces, reaction noise, and batch constraints present in real-world laboratories [2]. The typical ML-driven HTE workflow involves:

Initial Sampling: Algorithmic quasi-random Sobol sampling selects initial experiments to diversely cover the reaction condition space [2].

Model Training: Gaussian Process (GP) regressors train on initial experimental data to predict reaction outcomes and their uncertainties for all possible conditions [2].

Acquisition Function Evaluation: Functions balancing exploration and exploitation select the most promising next batch of experiments [2].

Iterative Refinement: The process repeats with new experimental data until convergence or experimental budget exhaustion [2].

The integration of ML with HTE has demonstrated significant advantages over traditional approaches, successfully identifying optimal conditions for challenging transformations where chemist-designed plates failed [2].

Applications and Case Studies in Pharmaceutical Development

Protocol: HTE Campaign for Nickel-Catalyzed Suzuki Reaction Optimization

Background: Non-precious metal catalysis represents an important cost-saving and sustainable approach in pharmaceutical process chemistry. This protocol outlines an HTE campaign for optimizing a challenging nickel-catalyzed Suzuki reaction [2].

Experimental Design:

- Search Space: 88,000 possible reaction conditions exploring combinations of ligands, bases, solvents, and additives [2]

- Platform: 96-well HTE format with automated liquid handling

- Analysis: UPLC-MS for yield and selectivity determination

Procedure:

Initial Screening Plate:

- Design 96-condition plate using algorithmic Sobol sampling to maximize spatial coverage of parameter space

- Include diverse ligand classes (phosphines, N-heterocyclic carbenes, amines), bases (carbonates, phosphates, organic bases), and solvents (ethers, aromatics, amides)

- Fix nickel catalyst loading at 5 mol% and reaction temperature at 80°C

Machine Learning-Guided Optimization:

- Apply Minerva ML framework with q-NParEgo acquisition function for multi-objective optimization (maximizing both yield and selectivity)

- Train Gaussian Process regressor on initial data to predict outcomes across unexplored conditions

- Select subsequent 96-condition batches based on acquisition function values

Hit Validation:

- Identify promising conditions achieving >76% yield and >92% selectivity

- Scale up confirmed hits to mmol scale for verification

- Characterize isolated products for quality control

Results: The ML-driven approach identified conditions with 76% area percent yield and 92% selectivity for this challenging transformation, outperforming traditional experimentalist-driven methods which failed to find successful conditions [2].

Pharmaceutical Process Development Case Studies

HTE has demonstrated significant impact in accelerating pharmaceutical process development, as evidenced by these implemented case studies:

Table 3: HTE Success Stories in Pharmaceutical Process Chemistry

| Reaction Type | Challenge | HTE Approach | Outcome | Timeline Impact |

|---|---|---|---|---|

| Ni-catalyzed Suzuki Coupling | Earth-abundant catalyst alternative to Pd; unpredictable reactivity | 96-well HTE with ML guidance (Minerva framework) | Identified multiple conditions achieving >95% yield and selectivity | Improved process conditions identified in 4 weeks vs. previous 6-month campaign [2] |

| Pd-catalyzed Buchwald-Hartwig Amination | Optimization of multiple objectives (yield, selectivity, cost) | Data-driven reagent selection using z-score analysis of 66,000 historical HTE reactions | Optimal conditions differing from literature-based guidelines | High-quality starting points improving overall campaign efficiency [6] |

| Oxidative Indolization | Optimization of penultimate step in umifenovir synthesis | 24-well copper catalyst and ligand array using phactor software | Identified optimal copper bromide/ligand system providing 66% isolated yield | Accelerated identification of optimal conditions for key synthetic step [3] |

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of HTE requires careful selection of reagents and materials that enable reproducible, high-quality results. The following table details key solutions and their applications:

Table 4: Essential Research Reagent Solutions for HTE Implementation

| Reagent Category | Specific Examples | Function in HTE | Selection Considerations |

|---|---|---|---|

| Catalyst Systems | NiCl₂(glyme), Pd₂(dba)₃, CuI, RuPhos, SPhos, XantPhos | Enable key bond-forming transformations (cross-couplings, aminations) | Cost, stability, reactivity, compatibility with automation [2] [6] |

| Solvent Libraries | 1,4-dioxane, toluene, DMF, NMP, acetonitrile, DMSO | Reaction medium influencing solubility, reactivity, and outcome | Boiling point, safety profile, environmental impact, compatibility with detection methods [2] [1] |

| Base Arrays | K₃PO₄, Cs₂CO₃, KOAc, DBU, Et₃N | Facilitate reaction progression through acid neutralization or substrate activation | Solubility, basicity strength, nucleophilicity, safety considerations [2] [3] |

| Additive Sets | Ag salts, MgSOâ‚„, phase-transfer catalysts, molecular sieves | Modify reactivity, remove impurities, or enable challenging transformations | Compatibility with other components, potential side reactions [3] |

| Internal Standards | Caffeine, anthracene, tetraphenylethylene | Enable quantitative analysis by correcting for injection variability and instrument drift | Chromatographic separation from reactants and products, stability [3] |

| Citroxanthin | Citroxanthin, CAS:515-06-0, MF:C40H56O, MW:552.9 g/mol | Chemical Reagent | Bench Chemicals |

| Tripalmitolein | Tripalmitolein, CAS:20246-55-3, MF:C51H92O6, MW:801.3 g/mol | Chemical Reagent | Bench Chemicals |

Advanced HTE Methodologies and Future Directions

Quantitative High-Throughput Screening (qHTS)

Recent advances in HTE methodology include the development of quantitative HTS (qHTS), which enables pharmacological profiling of large chemical libraries through generation of full concentration-response relationships for each compound [1]. This approach yields half maximal effective concentration (ECâ‚…â‚€), maximal response, and Hill coefficient (nH) for entire libraries, enabling assessment of nascent structure-activity relationships (SAR) at an unprecedented scale [1].

Ultra-High-Throughput Experimentation

Emerging technologies are pushing the boundaries of HTE throughput and efficiency. Recent research demonstrates an HTS process allowing 100 million reactions in 10 hours at one-millionth the cost of conventional techniques using drop-based microfluidics [1]. In these systems, drops of fluid separated by oil replace microplate wells and allow analysis and hit sorting while reagents flow through channels, enabling analysis of 200,000 drops per second [1].

The integration of machine learning with advanced HTE platforms continues to evolve, with frameworks now capable of handling batch sizes of 96 experiments and high-dimensional search spaces of 530 dimensions [2]. These developments promise to further accelerate reaction discovery and optimization, particularly in pharmaceutical applications where rapid development is crucial and many reactions prove unsuccessful [2].

The continued innovation in HTE technologies, combined with sophisticated data analysis approaches, ensures that high-throughput experimentation will remain a cornerstone of modern chemical research, enabling scientists to navigate increasingly complex reaction landscapes and accelerate the discovery of novel chemical transformations.

High-Throughput Experimentation (HTE) represents a paradigm shift in chemical research, replacing traditional one-variable-at-a-time approaches with miniaturized, parallelized reaction execution. This methodology enables the rapid exploration of vast chemical spaces by conducting numerous experiments simultaneously, dramatically accelerating data generation for reaction optimization, compound library synthesis, and data collection for machine learning applications [7]. Modern automated HTE platforms integrate three core technological components—liquid handling, reactor systems, and analytical technologies—into a seamless workflow. This integration is crucial for uncovering optimal reaction conditions in pharmaceutical process development, where it must accommodate diverse reagents, solvents, and analytical methods while maintaining the highest standards of reproducibility and data integrity [7] [8]. The following sections detail these core components, their technical specifications, and practical protocols for their implementation in drug development research.

Core Component 1: Automated Liquid Handling Systems

Automated liquid handlers are foundational to HTE workflows, replacing manual pipetting with robotic precision to dispense specified volumes of liquids or samples into designated containers. These systems typically utilize motorized pipettes or syringes attached to robotic arms, with some advanced models incorporating additional devices such as heater-cooler plates to meet specific experimental requirements [9].

The primary function of these systems is to transfer reagents, catalysts, and solvents in precise quantities to reaction vessels, enabling the high-fidelity setup of complex experimental arrays. This automation brings four significant advantages to HTE workflows: a substantial reduction in human error, decreased human labor for repetitive tasks, minimized cross-contamination between samples, and the capacity for uninterrupted operation 24/7 [9]. This level of precision and efficiency is critical for generating the high-quality, reproducible data required for reliable reaction optimization and machine learning applications.

Automated liquid handling finds application across diverse domains, including Nucleic Acid Preparation, PCR Setup, Next Generation Sequencing (NGS) Library Sample Preparation, ELISA, Solid Phase Extraction (SPE), Liquid-Liquid Extraction (LLE), and liquid biopsy workflows [9]. In organic synthesis HTE, this technology must accommodate a wide range of solvent properties, including varying surface tensions and viscosities, while often maintaining an inert atmosphere to handle air-sensitive reagents [7].

Table 1: Key Specifications of Automated Liquid Handling Systems

| Feature | Standard Specifications | Application Notes |

|---|---|---|

| Throughput | 96-well, 384-well, 1536-well plates [7] | Ultra-HTE (1536 reactions) significantly accelerates data generation [7]. |

| Dispensing Technology | Motorized pipettes or syringes on robotic arms [9] | Ensizes consistent and accurate liquid transfer. |

| Typical Deck Configuration | Customizable decks (e.g., 6 positions) [9] | Allows for placement of source plates, destination plates, tip boxes, and auxiliary modules. |

| Additional Capabilities | Grippers for plate movement, heater-cooler plates [9] | Enables complex, multi-step protocols and temperature control. |

| Key Benefit | Reduces human error and labor, increases reproducibility [9] [7] | Essential for generating robust data for AI/ML applications. |

Protocol: Automated Setup of a 96-Well Reaction Plate

This protocol details the automated setup of a 96-well plate for a catalyst screening study, utilizing an automated liquid handler.

Research Reagent Solutions & Materials: Table 2: Essential Materials for HTE Plate Setup

| Item | Function | Example/Note |

|---|---|---|

| Automated Liquid Handler | Precisely dispenses liquids. | Aurora Biomed VERSA 10 or equivalent [9]. |

| 96-Well Reaction Plate | Miniaturized reaction vessel. | Must be chemically compatible with solvents/reagents. |

| Stock Solutions | Source of reaction components. | Prepared in appropriate solvents at specified concentrations. |

| Inert Atmosphere | Handles air-sensitive reagents. | Nitrogen/argon glovebox or sealed plates [7]. |

| HTE Software | Designs plate layout and controls robot. | Virscidian AS-Experiment Builder, Katalyst D2D [10] [8]. |

Procedure:

- Experiment Design: Using HTE software (e.g., Virscidian AS-Experiment Builder or ACD/Labs Katalyst), design the 96-well plate layout. Specify the chemicals and conditions for each well. The software can generate optimized layouts automatically or allow for manual customization, including gradient fills for varying concentrations [8].

- Instruction Generation: The software automatically generates sample preparation instructions, detailing the creation of stock solutions and providing volumes for equivalence, concentration, and volume calculations [8].

- System Setup: Load the required stock solutions, clean pipette tips, and the empty 96-well destination plate onto the designated deck positions of the liquid handler.

- Automated Dispensing: Execute the transfer protocol. The robotic arm follows the software-generated instructions, sequentially dispensing specified volumes of substrates, catalysts, ligands, and solvents from source vials into the designated wells of the 96-well plate.

- Sealing and Transfer: Once dispensing is complete, seal the plate to prevent evaporation and contamination. If reactions are air-sensitive, this step must be performed in an inert atmosphere glovebox. The sealed plate is then transferred to a parallel reactor system for initiation.

Core Component 2: Laboratory Reactor Systems

Parallel laboratory reactor systems are the environment where the chemical transformations physically occur under controlled conditions. These systems consist of multiple miniature reactors (autoclaves) that operate simultaneously, providing excellent comparability and generating high-quality, scalable data [11] [12]. They are designed to be robust, modular, and easily expandable, offering valuable support for scaling up, process development, and extended catalyst testing [11].

HTE reactor platforms are highly versatile and can be configured for various reaction modes. Standard systems from companies like hte include batch reactor systems that are parallelized and highly automated, typically operating four to eight reactors [11] [12]. These are suitable for testing various chemical processes, including polymerization or the precipitation of materials such as battery materials [11]. Other specialized systems include those designed for high-throughput parallel screening of heterogeneous catalysts, micro downflow technology for fluid catalytic cracking (FCC) testing, and systems for the parallel screening of electrochemical cells [11].

A critical engineering challenge in parallel reactors is mitigating spatial bias, where wells at the edges versus the center of a plate experience different conditions, such as uneven stirring, temperature distribution, or, in photoredox chemistry, inconsistent light irradiation [7]. Advanced reactor systems are designed to minimize these effects, ensuring that results are reproducible and consistent across all wells in a single microtiter plate.

Table 3: Specifications of Parallel Laboratory Reactor Systems

| Reactor Type | Scale & Parallelization | Typical Operating Range | Common Applications |

|---|---|---|---|

| Batch Reactors [12] | 4 to 8 parallel reactors (e.g., 300 mL volume) | Wide pressure and temperature range | Hydrocycling, battery material synthesis, polymerization. |

| High-Throughput Screening Reactors [11] | Designed for parallel screening (e.g., 16 reactors) | Various reaction conditions | Optimization of heterogeneous catalysts. |

| Electrochemical Reactors [11] | Parallel screening of up to 16 electrochemical cells | Equipped with specific electrochemical analytics | Electrolysis, fuel cell research. |

Protocol: Execution of Parallel Reactions in a Batch Reactor System

This protocol describes the operation of an automated batch reactor system, such as an 8-fold system from hte, for a hydrocycling reaction optimization.

Research Reagent Solutions & Materials:

- Parallel Batch Reactor System: e.g., 8 parallel Hastelloy autoclaves [12].

- Pre-dispensed Reaction Plate: Prepared via automated liquid handling (from Protocol 2.1).

- Inert Gas Supply: For maintaining pressure and an inert atmosphere.

Procedure:

- System Initialization: Power on the reactor control system and software. Purge the reactor lines with an inert gas to create an oxygen-free environment, which is crucial for air-sensitive catalysts.

- Plate Loading: Transfer the pre-dispensed and sealed 96-well reaction plate from the liquid handler into the batch reactor system.

- Parameter Setting: In the control software, set the desired reaction parameters for the campaign. This includes:

- Temperature: Set a uniform temperature or a temperature gradient across different reactor blocks.

- Pressure: Pressurize the system with the required gas (e.g., Hâ‚‚ for hydrogenation).

- Stirring Speed: Define the agitation rate to ensure efficient mixing in all wells.

- Reaction Time: Set the duration for the experiment.

- Reaction Initiation and Monitoring: Start the reaction sequence. The system will automatically heat, pressurize, and stir the reactors. The software records parameters like temperature and pressure in real-time for each reactor.

- Reaction Quenching: After the set reaction time elapses, the system automatically cools the reactors to quench the reactions, typically to room temperature or lower.

- Sample Extraction: Once the reactors are safe to open, manually or robotically retrieve the reaction plate for analysis.

Core Component 3: Integrated Analytics and Data Management

The final core component of an automated HTE platform is the integrated analytics and data management system. This element transforms the physical reaction outcomes into actionable, digitized data. Modern HTE leverages advanced analytical techniques, primarily mass spectrometry (MS) and LC/UV/MS, coupled with automated data processing software to efficiently evaluate the large volumes of samples generated [10] [7]. The software is critical, as it must automatically process raw analytical data, link results back to the specific experimental conditions in each well, and present the data in an interpretable format for rapid decision-making [10] [8].

A significant challenge in HTE is that analytical data is often initially processed with sub-optimal methods, requiring scientists to spend 50% or more of their time on manual data reprocessing [10]. Integrated, chemically intelligent software solutions like ACD/Labs' Katalyst or Virscidian's Analytical Studio address this by automatically reading vendor data formats, processing the data, and displaying results in heat maps or other visualizations linked directly to the plate layout [10] [8]. This connection is vital; it ensures that the identity of every component in the experiment is stored, allowing for automatic targeted analysis of spectra and preventing the time-consuming and error-prone manual transcription of data [10] [8].

Furthermore, effective data management consistent with FAIR (Findable, Accessible, Interoperable, and Reusable) principles is key to establishing HTE's long-term utility [7]. The structured, high-quality data generated by integrated HTE workflows is ideal for data science and training machine learning algorithms, which can, in turn, guide future experimental design through Bayesian Optimization modules, creating a virtuous cycle of discovery and optimization [2] [10].

Protocol: Automated Analysis and Data Processing for HTE Plates

This protocol covers the automated processing of analytical data from an HTE plate and its integration with experimental metadata.

Research Reagent Solutions & Materials:

- Analytical Instrumentation: LC/UV/MS or MS system.

- HTE Data Processing Software: e.g., ACD/Labs Katalyst, Virscidian Analytical Studio [10] [8].

- Processed Reaction Plate: From the batch reactor system.

Procedure:

- Sample Injection: Using an autosampler, automatically inject samples from the 96-well reaction plate into the analytical instrument (e.g., LC/MS) for analysis.

- Data Acquisition: The instrument runs the samples and generates raw data files.

- Automated Data Sweeping and Processing: The HTE software (e.g., AS-Professional) automatically sweeps the raw data files from the network. It processes them using predefined methods, integrating peaks, identifying compounds via linked reaction schemes, and calculating metrics like percent conversion or yield [10] [8].

- Data Visualization and Review:

- Well-Plate View: The primary results are displayed in a well-plate view, color-coded for quick assessment (e.g., green for successful product formation) [8].

- Detailed Inspection: For any well, the scientist can drill down to view the chromatogram (TWC) showing all detected compounds, color-coded by substance [8].

- Charting: The software allows for the creation of custom plots and charts to extract trends from the data across the entire plate.

- Data Export for AI/ML: The structured and normalized experimental data—including reaction conditions, yields, and byproduct formation—is exported for use in AI/ML frameworks to build predictive models or guide the next round of experiments [10].

Integrated Workflow: A Pharmaceutical Case Study

The power of an automated HTE platform is fully realized when its core components are integrated into a seamless, iterative workflow. A recent study published in Nature Communications exemplifies this, deploying a machine learning framework called "Minerva" to optimize a challenging Ni-catalyzed Suzuki coupling for pharmaceutical process development [2].

In this campaign, the workflow began with the ML algorithm using quasi-random Sobol sampling to select an initial batch of 96 reaction conditions from a vast space of 88,000 possibilities, ensuring diverse coverage of the parameter space [2]. An automated liquid handler was then used to dispense reagents accordingly into a 96-well plate. The parallel reactor system executed the reactions under the specified conditions of temperature, concentration, and solvent [2]. After quenching, integrated analytics, likely LC/UV/MS, provided rapid yield and selectivity data for all 96 reactions [2].

This data was fed back to the ML model, which used a Gaussian Process regressor to predict outcomes for all untested conditions. A scalable multi-objective acquisition function (like q-NParEgo or TS-HVI) then balanced the goals of maximizing yield and selectivity while exploring uncertain regions, selecting the next most informative batch of 96 experiments [2]. This closed-loop cycle of ML-directed design, automated execution, and automated analysis was repeated for several iterations.

The result was the identification of conditions achieving >95% yield and selectivity for the API synthesis. Crucially, this ML-driven HTE approach successfully navigated a complex reaction landscape with unexpected chemical reactivity, outperforming traditional chemist-designed HTE plates and accelerating a process development timeline from an estimated 6 months down to just 4 weeks [2]. This case demonstrates how the integration of liquid handling, reactors, and analytics, when guided by machine intelligence, creates a transformative platform for accelerated research and development.

{Article Content start}

Batch vs. Flow: Choosing the Right HTE Platform for Your Application

High-Throughput Experimentation (HTE) has become a cornerstone in accelerating chemical research and development, particularly in pharmaceuticals. The choice between batch and flow platforms is pivotal, impacting scalability, parameter control, safety, and the types of chemistry accessible. This application note provides a structured comparison, detailed experimental protocols, and a decision-making framework to guide researchers in selecting the optimal HTE platform for their specific reaction optimization goals. By integrating current methodologies and data analysis techniques, we frame this choice within the broader context of developing fully automated, data-driven research platforms.

The drive towards automation in chemical synthesis has positioned HTE as an indispensable strategy for rapid reaction discovery and optimization. While traditional batch-based HTE in multi-well plates offers extensive parallelization for screening diverse chemical spaces, flow-based HTE provides superior control over continuous process variables and facilitates direct scale-up. This document delineates the capabilities, applications, and practical implementation of both platforms, empowering scientists to make informed decisions that align with their project objectives, whether for exploring vast reagent combinations or intensifying a specific chemical process.

Comparative Analysis: Batch vs. Flow HTE at a Glance

The decision between batch and flow HTE is multifaceted. The following table summarizes the core characteristics of each platform to provide a foundational comparison.

Table 1: Key Characteristics of Batch and Flow HTE Platforms

| Feature | Batch HTE | Flow HTE |

|---|---|---|

| Throughput Nature | High parallelization (96-, 384-well plates) [13] [14] | High serial throughput via process intensification; typically not parallelized [13] |

| Parameter Control | Limited for continuous variables (time, temperature) [13] | Precise, dynamic control of residence time, temperature, and pressure [13] [15] |

| Scale-up Translation | Often requires re-optimization due to changing heat/mass transfer [13] | Simplified scale-up by increasing runtime; consistent heat/mass transfer [13] [15] |

| Process Window | Limited by solvent boiling points and safety in microtiter plates [13] | Access to extreme T/P (high T, pressurized systems), and hazardous reagents [13] [15] |

| Ideal For | Screening vast arrays of substrates, catalysts, and reagents [16] | Optimizing continuous variables, hazardous chemistry, and photoelectrochemistry [13] [15] |

| Automation & Analysis | Robotic liquid handlers, analysis by DESI-MS or LC-MS [14] [17] | Integrated pumps, inline PAT (e.g., NMR, MS), and self-optimizing systems [18] [19] |

Detailed Experimental Protocols

Protocol 1: Batch HTE for Nucleophilic Aromatic Substitution (SNAr)

Application Note: This protocol, adapted from a published study, is designed for the rapid screening of amines and bases in an SNAr reaction using a liquid handling robot and Desorption Electrospray Ionization Mass Spectrometry (DESI-MS) for ultra-fast analysis [14].

Table 2: Key Research Reagent Solutions for SNAr Batch HTE

| Reagent / Material | Function | Specific Examples |

|---|---|---|

| Aryl Halides | Electrophilic substrate | Various substituted aryl fluorides and chlorides [14] |

| Amine Nucleophiles | Nucleophilic reactant | A set of 16 diverse primary and secondary amines [14] |

| Base | Scavenges acid, promotes reaction | DIPEA, NaOtBu, Triethylamine [14] |

| Polar Aprotic Solvent | Reaction medium | N-Methyl-2-pyrrolidone (NMP), 1,4-Dioxane [14] |

| DESI Spray Solvent | Ionization and analysis medium | MeOH, or MeOH with 1% Formic Acid [14] |

Procedure:

- Reaction Mixture Preparation: A Beckman-Coulter Biomek i7 liquid handling robot is used to prepare reaction mixtures in a glass-lined 96-well metal plate [14].

- Reagent Dispensing: For each well, combine 1 equivalent of aryl halide, 1 equivalent of amine, and 2.5 equivalents of base in 400 µL of solvent (e.g., NMP or 1,4-dioxane) [14].

- Incubation (Optional):

- Droplet/Thin-Film Reactions: Spot 50 nL of the reaction mixture onto a PTFE surface using a 384-format pin tool for immediate DESI-MS analysis at room temperature [14].

- Bulk Microtiter Reactions: Seal the plate and incubate at an elevated temperature (e.g., 150 °C) for a defined period (e.g., 15 hours) to promote reaction. After incubation, cool the plate and spot samples onto the PTFE surface [14].

- DESI-MS Analysis: Raster the DESI-MS inlet over the PTFE surface to analyze all spots, acquiring full mass spectra in positive ion mode at a rate of approximately 1 second per reaction [14].

- Data Processing: Analyze MS data using specialized software (e.g., in-house "CHRIS" software) to generate heat maps of product peak intensities, identifying successful reaction conditions [14].

Protocol 2: Flow HTE for Photoredox Fluorodecarboxylation

Application Note: This protocol outlines the translation and scale-up of a photoredox reaction from batch HTE to a continuous flow system, demonstrating how flow chemistry addresses scale-up challenges and provides access to wider process windows [13].

Procedure:

- Initial Batch HTE Screening: Conduct preliminary screening in a 96-well plate photoreactor to identify optimal photocatalysts, bases, and fluorinating agents [13].

- Validation and DoE: Validate the best-performing conditions from HTE in a batch reactor. Further optimize using a Design of Experiments (DoE) approach to understand parameter interactions [13].

- Flow Reactor Setup: Assemble a flow system comprising two feed streams and a commercial or custom photoreactor (e.g., Vapourtec UV150) [13].

- Feed Solution A: Contains the carboxylic acid substrate and base.

- Feed Solution B: Contains the homogeneous photocatalyst and fluorinating agent.

- Process Intensification: Pump the two feeds through the photoreactor, systematically optimizing flow parameters:

- Residence Time: Controlled by adjusting the combined flow rate.

- Light Intensity: Adjusted on the photoreactor.

- Temperature: Controlled via a water bath or heating block.

- Scale-up: Once optimal conversion is achieved at a small scale (e.g., 2 g), increase the production scale simply by running the process continuously for a longer duration, successfully demonstrating a 100 g to kilogram-scale synthesis [13].

Protocol 3: Self-Driving Laboratory for Multi-Objective Optimization

Application Note: This protocol describes a workflow for a self-driving laboratory (SDL), which integrates automated flow chemistry platforms with real-time analytics and machine learning to autonomously optimize reactions, bridging the gap between batch and flow paradigms [18] [20].

Procedure:

- Platform Configuration: Utilize a modular SDL platform (e.g., RoboChem-Flex or Reac-Discovery) that integrates automated pumps, a flow reactor, and an inline benchtop NMR spectrometer for real-time monitoring [18] [20].

- Define Optimization Goals: Input the reaction and set the optimization objectives into the control software (e.g., maximize yield, maximize selectivity, or a weighted multi-objective function) [20].

- Autonomous Experimentation: The SDL executes the following closed-loop cycle:

- The machine learning algorithm (e.g., Bayesian optimization) selects a new set of reaction conditions (e.g., temperature, flow rates, concentration) [18] [20].

- The automated platform configures the reactor and executes the reaction under the selected conditions.

- The inline NMR spectrometer analyzes the effluent stream in real-time, providing yield and conversion data to the software [18] [19].

- The ML model is updated with the new result, and the cycle repeats until the optimization goal is met or the experimental budget is exhausted [18] [20].

Workflow and Decision Pathways

The following diagram illustrates the strategic decision-making process for selecting between batch and flow HTE, based on the primary goal of the research campaign.

The Scientist's Toolkit: Essential Research Reagent Solutions

Beyond platform hardware, success in HTE relies on a suite of analytical and data analysis tools.

Table 3: Essential Tools for Modern HTE Workflows

| Tool Category | Specific Technology | Function in HTE |

|---|---|---|

| High-Throughput Analytics | DESI-MS [14] | Ultra-fast analysis (~1 sec/sample) for batch HTE reaction mixtures. |

| Inline Benchtop NMR [18] [19] | Real-time, non-destructive monitoring for flow and SDL platforms. | |

| Automation & Robotics | Liquid Handling Robots [14] [17] | Automated preparation of batch HTE reactions in microtiter plates. |

| Automated Synthesis Workstations [19] | Fully automated platforms for parallel reaction execution and sampling. | |

| Data Analysis Software | HiTEA (High-Throughput Experimentation Analyzer) [16] | Statistical framework (Random Forest, Z-score) to extract insights from large HTE datasets. |

| Chrom Reaction Optimization [21] | Automated software for processing and reporting large chromatography datasets from HTE. | |

| Advanced Fabrication | High-Resolution 3D Printing [18] | Fabrication of custom flow reactors with optimized periodic open-cell structures (POCS) for enhanced mass/heat transfer. |

| Stemonidine | Stemonidine, MF:C19H29NO5, MW:351.4 g/mol | Chemical Reagent |

| Hydroxy Darunavir | Hydroxy Darunavir|RUO | Hydroxy Darunavir (CAS 1130635-75-4) is a research compound for biochemical use. This product is For Research Use Only and not for human or veterinary use. |

The choice between batch and flow HTE is not a question of which is universally superior, but which is contextually appropriate. Batch HTE excels in the parallel exploration of discrete chemical variables across a vast space, while flow HTE provides unparalleled control over continuous process parameters and facilitates direct, efficient scale-up. The emerging paradigm of self-driving laboratories, which leverage machine learning and real-time analytics, begins to unify these approaches. By applying the decision framework and protocols outlined in this application note, researchers can strategically deploy these powerful platforms to accelerate reaction optimization within their automated research workflows.

{Article Content end}

The Role of Machine Learning and AI in Guiding Experimental Design

The integration of machine learning (ML) and artificial intelligence (AI) with high-throughput automated platforms is revolutionizing experimental design in chemical synthesis. This paradigm shift addresses the resource-intensive challenge of optimizing chemical reactions, which traditionally relies on chemical intuition and one-factor-at-a-time (OFAT) approaches [2] [22]. Modern ML-driven workflows now enable autonomous navigation of high-dimensional parameter spaces, dramatically accelerating the identification of optimal reaction conditions for objectives such as yield and selectivity [2]. These approaches are particularly crucial in pharmaceutical process development, where stringent economic, environmental, health, and safety considerations must be met [2]. The synergy between machine intelligence and laboratory automation creates a powerful framework for self-driving laboratories, marking a significant advancement over traditional methods [23] [24].

Machine Learning Frameworks and Algorithms

Core Machine Learning Categories in Experimental Design

The application of ML in experimental design spans several learning paradigms, each with distinct capabilities [25].

- Supervised Learning: Used for predicting reaction outcomes when historical data with labeled inputs and outputs are available. Common algorithms include Support Vector Machines and Decision Trees, applied to tasks like reactivity prediction and chemical reaction classification [25].

- Unsupervised Learning: Employed to infer inherent structures in experimental data without pre-existing labels. Techniques such as K-means clustering and Gaussian mixture models are valuable for information extraction and molecular simulation [25].

- Reinforcement Learning: Enables autonomous systems to learn optimal behavior through trial-and-error interactions with the experimental environment. This approach is particularly useful for synthetic route planning and robotic control [25].

- Bayesian Optimization: A powerful strategy for reaction optimization that uses probabilistic models to balance exploration of unknown parameter spaces with exploitation of promising regions [2]. It efficiently handles the complex, multi-dimensional landscapes typical of chemical reactions.

Advanced Learning Methods

Several advanced ML methods have shown significant promise in experimental design applications:

- Deep Learning: Utilizing architectures like graph convolutional neural networks, deep learning models achieve state-of-the-art performance in property prediction and catalyst design by learning hierarchical representations of chemical data [25].

- Transfer Learning: Enables knowledge gained from source tasks to be applied to new target tasks with different domains or data distributions, addressing data scarcity challenges [25].

- Active Learning: Strategically selects the most informative experiments to label and train on, maximizing model performance while minimizing experimental effort [25].

Table 1: Machine Learning Algorithms and Their Applications in Experimental Design

| ML Category | Specific Algorithms | Application Examples |

|---|---|---|

| Supervised Learning | Support Vector Machine, Decision Trees, Multivariate Linear Regression | Reactivity prediction, Chemical reaction classification [25] |

| Unsupervised Learning | K-means, X-means, Gaussian Mixture Model | Information extraction, Molecular simulation [25] |

| Reinforcement Learning | Q-learning, Temporal Difference, Policy Gradient | Robotic control, Synthetic route planning [25] |

| Bayesian Optimization | Gaussian Process with q-EHVI, q-NParEgo, TS-HVI | Multi-objective reaction optimization [2] |

| Deep Learning | Graph Neural Networks, Convolutional Neural Networks | Property prediction, Catalyst design [25] |

Implementation in High-Throughput Experimentation

Integration with Automated Workflows

The fusion of ML with High-Throughput Experimentation (HTE) has created a powerful paradigm for reaction optimization [2] [7]. HTE involves miniaturized, parallelized reactions that enable rapid exploration of multivariable experimental spaces [7]. ML algorithms enhance this capability by guiding the design of HTE campaigns to focus on the most promising regions of the chemical landscape. This integration is exemplified by platforms like Minerva, which combines Bayesian optimization with 96-well HTE systems to efficiently navigate complex reaction spaces containing thousands of potential conditions [2]. Such systems can autonomously handle various reaction parameters including catalysts, ligands, solvents, and temperatures, while automatically filtering out impractical or unsafe combinations [2].

Multi-Objective Optimization

Real-world reaction optimization often involves balancing multiple competing objectives such as yield, selectivity, cost, and safety [2]. ML frameworks address this challenge through scalable multi-objective acquisition functions including q-NParEgo, Thompson sampling with hypervolume improvement (TS-HVI), and q-Noisy Expected Hypervolume Improvement (q-NEHVI) [2]. These algorithms enable simultaneous optimization of multiple reaction objectives across large batch sizes, with performance quantifiable using metrics like the hypervolume indicator, which calculates the volume of objective space dominated by the identified conditions [2].

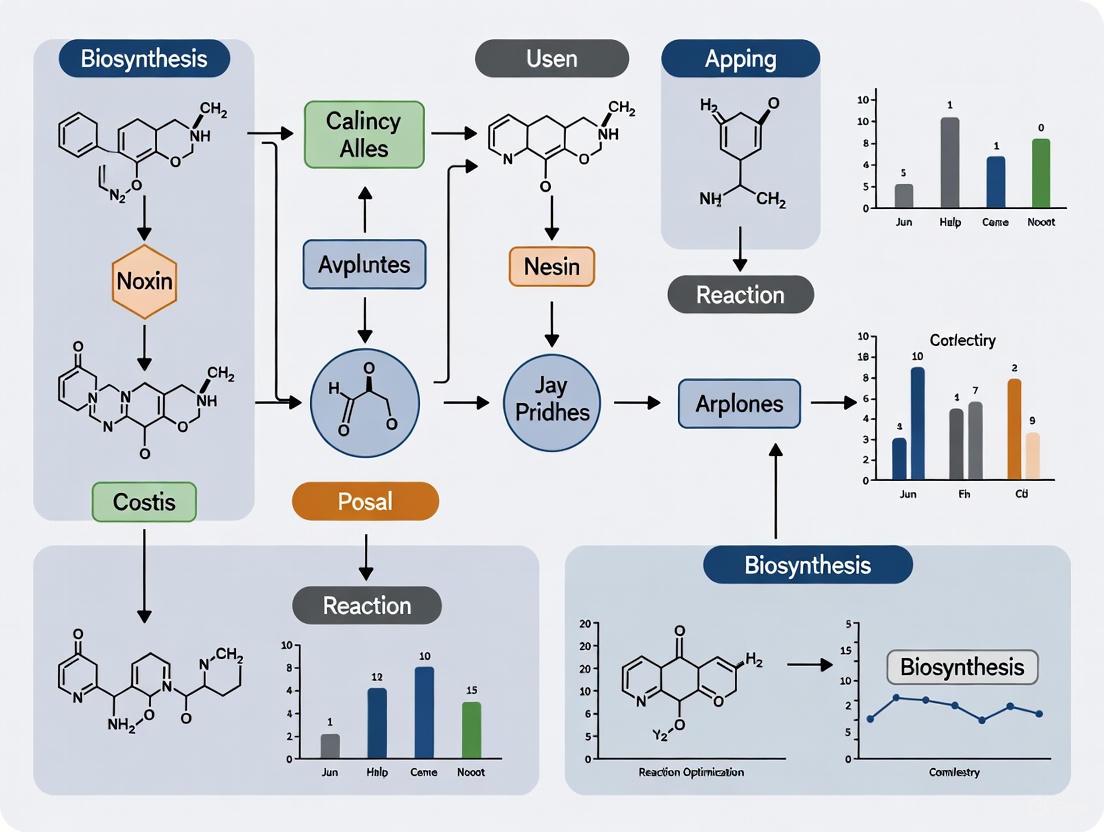

Figure 1: ML-Driven Workflow for Reaction Optimization. This diagram illustrates the iterative cycle of machine learning-guided high-throughput experimentation.

Case Studies and Experimental Protocols

Pharmaceutical Process Development

Ni-Catalyzed Suzuki Coupling Optimization

Background: The optimization of nickel-catalyzed Suzuki reactions presents challenges in non-precious metal catalysis, with traditional experimentalist-driven methods often failing to identify successful conditions [2].

Experimental Protocol:

- Reaction Setup: Conduct reactions in 96-well plate format with automated liquid handling systems.

- Parameter Space: Define an 88,000-condition search space encompassing variations in catalysts, ligands, bases, solvents, concentrations, and temperatures.

- Initial Sampling: Employ Sobol sampling for the first batch of 96 reactions to maximize initial coverage of the reaction space [2].

- ML-Guided Optimization: Implement the Minerva framework with q-NParEgo acquisition function for 5 iterative cycles [2].

- Analysis: Quantify yield and selectivity using HPLC with area percent (AP) measurements.

Results: The ML-driven approach identified conditions achieving 76% AP yield and 92% selectivity, outperforming traditional chemist-designed HTE plates which failed to find successful conditions [2].

API Synthesis Optimization

Background: Pharmaceutical process development requires rapid identification of optimal conditions for Active Pharmaceutical Ingredient (API) syntheses with rigorous purity requirements [2].

Experimental Protocol:

- Platform Configuration: Utilize a self-driving lab platform integrating liquid handling stations, robotic arms, and analytical instruments (e.g., UV-vis spectroscopy, UPLC-ESI-MS) [24].

- Multi-Objective Optimization: Simultaneously maximize yield and selectivity while maintaining >95% AP purity thresholds.

- Algorithm Selection: Employ Bayesian optimization with a Matérn kernel after evaluating over 10,000 simulated campaigns to identify the most efficient algorithm [24].

- Validation: Execute autonomous optimization campaigns for multiple enzyme-substrate pairings in a 5-dimensional design space (pH, temperature, cosubstrate concentration, etc.) [24].

Results: The ML framework identified multiple conditions achieving >95% AP yield and selectivity for both Ni-catalyzed Suzuki coupling and Pd-catalyzed Buchwald-Hartwig reactions, reducing process development time from 6 months to 4 weeks in one case [2].

Table 2: Performance Metrics from Pharmaceutical Case Studies

| Reaction Type | Key Objectives | Traditional Approach | ML-Guided Approach | Time Savings |

|---|---|---|---|---|

| Ni-Catalyzed Suzuki Reaction | Yield, Selectivity | Failed to find successful conditions [2] | 76% AP yield, 92% selectivity [2] | Not quantified |

| API Synthesis (Case 1) | >95% AP yield and selectivity | ~6 months development [2] | Multiple optimal conditions identified [2] | ~80% reduction (6 months to 4 weeks) [2] |

| Enzymatic Biocatalysis | Maximum enzyme activity | Labor-intensive, time-consuming [24] | Accelerated optimization in 5D parameter space [24] | Significant reduction reported [24] |

LLM-Driven Synthesis Development

Background: Large Language Models (LLMs) have recently emerged as powerful tools for end-to-end chemical synthesis development, facilitating multiple stages of experimental design [26].

Experimental Protocol:

- Framework Setup: Implement an LLM-based reaction development framework (LLM-RDF) with specialized agents (Literature Scouter, Experiment Designer, Hardware Executor, Spectrum Analyzer, Separation Instructor, Result Interpreter) [26].

- Literature Mining: Use the Literature Scouter agent with retrieval-augmented generation (RAG) to search academic databases and extract relevant synthetic methodologies [26].

- Experimental Design: Employ the Experiment Designer agent to formulate high-throughput screening plans for substrate scope and condition screening.

- Automated Execution: Utilize the Hardware Executor to translate experimental designs into automated operations on HTE platforms.

- Data Analysis: Apply Spectrum Analyzer and Result Interpreter agents to process analytical data and draw conclusions.

Results: The LLM-RDF successfully guided the end-to-end development of copper/TEMPO-catalyzed aerobic alcohol oxidation, including literature search, condition screening, kinetic studies, optimization, and scale-up, demonstrating versatility across distinct reaction types [26].

The Researcher's Toolkit

Essential Research Reagent Solutions

Table 3: Key Reagents and Materials for ML-Guided Reaction Optimization

| Reagent/Material | Function | Application Examples |

|---|---|---|

| Nickel Catalysts | Earth-abundant alternative to precious metal catalysts | Suzuki coupling reactions [2] |

| Ligand Libraries | Modulate catalyst activity and selectivity | Screening in cross-coupling reactions [2] |

| Enzyme Substrates | Target molecules for biocatalytic optimization | Pharmaceutical synthesis, biotransformations [24] |

| TEMPO Catalyst | Mediator in oxidation reactions | Aerobic alcohol oxidation to aldehydes [26] |

| Palladium Catalysts | Facilitate cross-coupling reactions | Buchwald-Hartwig amination [2] |

| Methylcobalamin | Methylcobalamin Research Grade|High-Purity Vitamin B12 | High-purity Methylcobalamin (Vitamin B12) for neuroscience, metabolism, and biochemistry research. For Research Use Only. Not for human consumption. |

| Niacin | Niacin, CAS:59-67-6, MF:C6H5NO2, MW:123.11 g/mol | Chemical Reagent |

Laboratory Automation Components

Table 4: Essential Hardware and Software Components

| Component | Function | Implementation Examples |

|---|---|---|

| Liquid Handling Station | Automated pipetting, heating, shaking | Opentrons OT Flex [24] |

| Robotic Arm | Transport and arrangement of labware | Universal Robots UR5e [24] |

| Plate Reader | Spectroscopic analysis | Tecan Spark [24] |

| UPLC-ESI-MS | Highly sensitive detection and characterization | Sciex X500-R [24] |

| Python Framework | Backend control and integration | Modular SDL software [24] |

| Electronic Lab Notebook | Experimental documentation and metadata management | eLabFTW [24] |

Experimental Workflows and Protocols

Standard ML-Guided Reaction Optimization Protocol

Objective: To optimize chemical reactions for multiple objectives using machine learning guidance.

Materials and Equipment:

- Automated liquid handling station (e.g., Opentrons OT Flex)

- Robotic arm with adaptive gripper (e.g., Universal Robots UR5e)

- Multimode plate reader (e.g., Tecan Spark)

- HPLC or UPLC system for analysis

- 96-well reaction plates

- Appropriate chemical reagents, solvents, and catalysts

Procedure:

- Define Search Space:

- Identify key reaction variables (catalyst, ligand, solvent, temperature, concentration, etc.)

- Establish practical constraints (e.g., solvent boiling points, incompatible combinations)

- Define optimization objectives (yield, selectivity, cost, etc.)

Initial Experimental Design:

- Implement Sobol sampling to select an initial batch of 24-96 reactions

- Ensure diverse coverage of the parameter space

- Program liquid handling station for reagent addition

Reaction Execution:

- Execute reactions in parallel using automated platforms

- Maintain appropriate environmental controls (temperature, atmosphere)

- Monitor reaction progress as needed

Analysis and Data Collection:

- Quench reactions automatically if required

- Perform analytical measurements (GC, HPLC, MS, or UV-vis)

- Record yields, selectivity, and other relevant metrics

Machine Learning Cycle:

- Train Gaussian Process regressor on collected data

- Apply acquisition function (q-NEHVI, q-NParEgo, or TS-HVI) to select next experiments

- Iterate steps 3-5 for 3-8 cycles or until convergence

Validation:

- Manually verify optimal conditions identified by ML

- Scale up promising reactions

- Document all results in electronic laboratory notebook

Figure 2: Multi-Agent LLM Framework for Synthesis Development. This architecture shows how specialized LLM agents coordinate with external tools to guide experimental design.

The integration of machine learning and artificial intelligence with high-throughput automated platforms represents a transformative advancement in experimental design for chemical synthesis. ML-driven approaches including Bayesian optimization, deep learning, and LLM-based frameworks have demonstrated remarkable capabilities in navigating complex, high-dimensional reaction spaces efficiently. These methods consistently outperform traditional experimentation by simultaneously optimizing multiple objectives, handling categorical variables, and reducing development timelines from months to weeks. The emerging paradigm of self-driving laboratories, powered by sophisticated algorithms and comprehensive automation, promises to accelerate discovery across pharmaceutical development, materials science, and chemical manufacturing. As these technologies continue to evolve, the synergy between machine intelligence and human expertise will undoubtedly unlock new frontiers in synthetic chemistry and reaction optimization.

For decades, the one-factor-at-a-time (OFAT) approach served as the default methodology for chemical reaction optimization across pharmaceutical development and synthetic chemistry. This method involves systematically varying a single experimental factor while holding all others constant, which appeals to researchers through its straightforward implementation and interpretation [27] [28]. However, this traditional approach contains fundamental limitations that become critically problematic when optimizing complex chemical reactions with interacting parameters. OFAT methodologies cannot detect interactions between factors, often miss optimal conditions, and require extensive experimental runs for equivalent precision compared to modern multidimensional approaches [28] [29].

The emergence of high-throughput experimentation (HTE) platforms has catalyzed a fundamental shift from these traditional linear methodologies toward multidimensional search strategies [30] [2]. Automated HTE systems enable the highly parallel execution of numerous reactions, exploring vast chemical spaces through miniaturized reaction scales and robotic instrumentation [2] [31]. This technological foundation, combined with advanced algorithmic optimization approaches, allows researchers to efficiently navigate complex reaction landscapes that would be intractable using OFAT methodologies [2].

Table 1: Core Limitations of OFAT versus Advantages of Multidimensional Approaches

| Aspect | OFAT approach | Multidimensional approaches |

|---|---|---|

| Factor Interactions | Cannot detect interactions between parameters [28] | Identifies and quantifies parameter interactions [29] |

| Experimental Efficiency | Requires more runs for equivalent precision [28] | Fewer experiments needed to identify optimal conditions [2] [29] |

| Optimal Condition Identification | Can miss optimal settings due to factor dependencies [28] | Greater probability of finding global optimum in complex landscapes [2] |

| Implementation Complexity | Simple to implement and interpret [27] [28] | Requires specialized software and statistical knowledge [2] [31] |

| Scalability | Becomes impractical for high-dimensional spaces [2] | Efficiently navigates spaces with dozens to hundreds of dimensions [2] |

Modern Methodologies: Integrating HTE with Advanced Optimization

High-Throughput Experimentation Platforms

Contemporary HTE platforms provide the technical foundation for implementing multidimensional optimization strategies. These automated intelligent systems offer unique advantages of low consumption, high efficiency, high reproducibility, and good versatility [30]. Modern HTE workflows, facilitated by software solutions like phactor, enable researchers to rapidly design arrays of chemical reactions in 24-, 96-, 384-, or 1,536-well plates, accessing online reagent data and producing instructions for manual execution or robotic liquid handling [31]. This automation significantly reduces the organizational load and time required between experiment ideation and result interpretation, transforming HTE from a logistical challenge to a creative tool for reaction discovery [31].

The iChemFoundry platform exemplifies next-generation HTE systems, integrating automated high-throughput chemical synthesis with sample treatment and characterization techniques [30]. Such platforms provide the essential infrastructure for implementing machine learning-driven optimization, generating the standardized, machine-readable data required for training predictive models [30] [31].

Machine Learning-Driven Optimization

Machine learning (ML) frameworks represent the cutting edge in multidimensional optimization, efficiently handling large parallel batches, high-dimensional search spaces, and reaction noise present in real-world laboratories [2]. The Minerva ML framework demonstrates robust performance in highly parallel multi-objective reaction optimization, employing Bayesian optimization with Gaussian Process regressors to predict reaction outcomes and their uncertainties across vast condition spaces [2].

These ML approaches fundamentally differ from OFAT by simultaneously exploring multiple parameters through an exploration-exploitation balance. After initial quasi-random Sobol sampling to maximize reaction space coverage, the algorithm uses an acquisition function to select the most promising next batch of experiments based on predicted outcomes and uncertainties [2]. This strategy has proven exceptionally effective, identifying optimal conditions for challenging transformations like nickel-catalyzed Suzuki couplings where traditional chemist-designed HTE plates failed [2].

Flow Chemistry for HTE

Flow chemistry has emerged as a powerful complement to plate-based HTE, particularly for reactions involving hazardous reagents, extreme conditions, or photochemical transformations [13]. Unlike batch-based HTE, flow systems enable continuous variation of parameters like temperature, pressure, and reaction time throughout an experiment, providing access to wide process windows not achievable in batch systems [13]. This capability is especially valuable for photochemical reactions, where flow reactors minimize light path length and precisely control irradiation time, overcoming limitations of traditional batch photoreactors [13].

The combination of flow chemistry with self-optimizing systems creates particularly powerful platforms for multidimensional optimization. These integrated systems employ modular, autonomous microreactor setups equipped with real-time reaction monitoring (e.g., inline FT-IR spectroscopy) and optimization algorithms that automatically adjust parameters to maximize objective functions [29]. This approach enables model-free autonomous optimization while simultaneously collecting kinetic data for additional process insights [29].

Experimental Protocols

Protocol 1: ML-Driven HTE Optimization Campaign for Suzuki Reaction

This protocol outlines the application of the Minerva ML framework for optimizing a nickel-catalyzed Suzuki reaction, representing a state-of-the-art multidimensional optimization approach [2].

Materials and Reagents

- Ligand Library: Diverse phosphine ligands (e.g., BippyPhos, JohnPhos, dppf)

- Solvent Library: Multiple solvent classes (ether, hydrocarbon, dipolar aprotic)

- Base Library: Inorganic and organic bases (e.g., K3PO4, Cs2CO3, KOtBu)

- Catalyst: Nickel precursor (e.g., NiCl2·glyme)

- Substrates: Boronic acid and aryl electrophile

Equipment

- Automated liquid handling system (e.g., Opentrons OT-2)

- 96-well plate reactor system

- UPLC-MS system for analysis

- Computer running Minerva framework

Procedure

- Reaction Condition Space Definition: Define the multidimensional parameter space including categorical variables (ligand, solvent, base) and continuous variables (temperature, concentration, catalyst loading).

- Constraint Implementation: Programmatically exclude impractical conditions (e.g., temperatures exceeding solvent boiling points, unsafe reagent combinations).

- Initial Experiment Selection: Execute algorithmic Sobol sampling to select an initial batch of 96 diverse reaction conditions maximizing space coverage.

- Plate Preparation:

- Prepare stock solutions of substrates, catalyst, and bases.

- Use automated liquid handling to distribute reagents according to the experimental design.

- Seal plates and initiate reactions with precise temperature control.

- Reaction Analysis:

- Quench reactions after specified time.

- Analyze yields and selectivity via UPLC-MS.

- Process analytical data into standardized format (e.g., area percent yield).

- ML Optimization Cycle:

- Input results into Minerva framework to train Gaussian Process regressor.

- Use q-NParEgo acquisition function to select next batch of 96 experiments balancing exploration and exploitation.

- Repeat plate preparation and analysis for 3-5 optimization cycles.

- Result Validation: Confirm optimal conditions identified through HTE in traditional batch reactor at preparative scale.

Troubleshooting Notes

- For reactions with precipitation issues, include filtration steps before analysis.

- If analytical results show high variance, increase replicates for critical conditions.

- When optimization stagnates, adjust acquisition function parameters to favor exploration.

Protocol 2: Flow Chemistry with Real-Time Self-Optimization

This protocol describes the implementation of a self-optimizing flow chemistry system for imine synthesis, combining continuous flow with real-time multidimensional optimization [29].

Materials and Reagents

- Benzaldehyde (ReagentPlus, 99%)

- Benzylamine (ReagentPlus, 99%)

- Methanol (for synthesis, >99%)

- Inline FT-IR spectrometer (e.g., Bruker ALPHA)

Equipment

- Microreactor system (stainless steel capillaries, 1.87 mL total volume)

- Syringe pumps (e.g., SyrDos2)

- Temperature control system

- Inline FT-IR spectrometer with ATR diamond crystal

- MATLAB control system with OPC interface

Procedure

- System Configuration:

- Assemble microreactor setup with connected capillaries (0.5 mm ID, 5 m length and 0.75 mm ID, 2 m length).

- Connect syringe pumps for benzaldehyde, benzylamine, and methanol feeds.

- Integrate inline FT-IR spectrometer for real-time reaction monitoring.

- Establish communication between pumps, thermostat, spectrometer, and MATLAB control system.

- Calibration:

- Collect reference FT-IR spectra for pure benzaldehyde (characteristic band: 1680-1720 cmâ»Â¹) and imine product (characteristic band: 1620-1660 cmâ»Â¹).

- Establish calibration curves correlating IR band intensities with concentrations.

- Objective Function Definition:

- Program objective function to maximize imine concentration or minimize benzaldehyde concentration.

- Define optimization constraints (temperature limits, flow rate ranges, pressure limits).

- Optimization Execution:

- Select optimization algorithm (modified Nelder-Mead simplex or Design of Experiments).

- Initialize system with starting conditions (residence time: 0.5-6 min, temperature: 20-80°C, stoichiometry variations).

- Implement real-time optimization loop:

- Monitor conversion via FT-IR band intensities.

- Calculate objective function value.

- Algorithm determines new parameter sets.

- System automatically adjusts pump flow rates and temperature.

- Continue optimization until convergence (minimal improvement in objective function).

- Disturbance Response Testing (optional):

- Introduce deliberate disturbances (concentration variations, temperature fluctuations).

- Verify system capability to respond and re-optimize in real-time.

- Data Collection:

- Record all parameter combinations and corresponding objective function values.

- Extract kinetic parameters from concentration profiles if desired.

Troubleshooting Notes

- Ensure Bo >100 for nearly plug flow conditions.

- Monitor system pressure for potential clogging.

- Verify ATR crystal cleanliness regularly to maintain IR signal quality.

Table 2: Key Research Reagent Solutions for Multidimensional Optimization

| Reagent Category | Specific Examples | Function in Optimization |

|---|---|---|

| Catalyst Systems | NiCl2·glyme, Pd2(dba)3, CuI | Vary catalytic activity and selectivity; explore earth-abundant alternatives [2] |

| Ligand Libraries | Phosphine ligands (BippyPhos, XPhos), N-heterocyclic carbenes | Modulate catalyst properties; significant impact on reaction outcome [2] |

| Solvent Collections | Ethers, hydrocarbons, dipolar aprotic solvents, alcohols | Influence solubility, reactivity, and reaction mechanism [2] [31] |

| Base Arrays | K3PO4, Cs2CO3, KOtBu, Et3N | Affect reaction kinetics and pathways; crucial for coupling reactions [31] |

| Additive Libraries | Silver salts, magnesium sulfate, ammonium additives | Fine-tune reaction outcomes; can dramatically improve yields [31] |

Comparative Performance Analysis

Multidimensional optimization strategies demonstrate compelling advantages over traditional OFAT approaches across multiple performance metrics. In direct comparisons, ML-driven HTE identified conditions achieving >95% yield for pharmaceutical syntheses where OFAT approaches failed to find viable conditions [2]. The Minerva framework successfully navigated a search space of 88,000 possible conditions for a challenging nickel-catalyzed Suzuki reaction, identifying conditions with 76% yield and 92% selectivity, while traditional chemist-designed HTE plates found no successful conditions [2].

Flow chemistry approaches with self-optimization have demonstrated remarkable efficiency in identifying optimal conditions with minimal experimental iterations. In the optimization of imine synthesis, multidimensional approaches using modified Nelder-Mead simplex algorithms required significantly fewer experiments to identify optimal conditions compared to theoretical OFAT requirements [29]. The integration of real-time analytics with autonomous optimization enabled simultaneous optimization of multiple parameters while collecting kinetic data, providing both practical optimum conditions and fundamental mechanistic insights [29].

Implementation Framework

Strategic Selection Guide

Choosing the appropriate multidimensional optimization strategy depends on multiple factors including available instrumentation, reaction constraints, and project objectives. The decision workflow diagram provides a structured approach to selecting the optimal methodology based on specific experimental requirements and constraints. For laboratories with extensive high-throughput capabilities, ML-driven HTE campaigns provide the most powerful approach for navigating large parameter spaces exceeding 50 dimensions [2]. When reactions involve hazardous conditions, extreme temperatures or pressures, or photochemical transformations, flow HTE with self-optimization offers distinct advantages [13] [29]. For more constrained parameter spaces with limited screening capabilities, traditional Design of Experiments approaches remain valuable for efficient optimization [29].

Integration with Existing Workflows

Successful implementation of multidimensional optimization requires thoughtful integration with established research workflows. The phactor software platform demonstrates how HTE data management can be streamlined, providing interfaces to chemical inventories, robotic liquid handlers, and analytical instruments while storing data in machine-readable formats compatible with various analysis tools [31]. This approach minimizes disruption to existing workflows while maximizing the value extracted from HTE campaigns.

For pharmaceutical development teams, adopting these methodologies can dramatically accelerate process development timelines. In one documented case, an ML framework identified improved process conditions in 4 weeks compared to a previous 6-month development campaign using traditional approaches [2]. As these technologies continue to mature and become more accessible, they represent increasingly essential tools for research organizations seeking to maintain competitive advantage in reaction discovery and optimization.

From Theory to Practice: Implementing HTE and Machine Learning workflows

Within modern research and development, particularly in pharmaceutical and specialty chemical industries, the demand for rapid and efficient process development is paramount. Traditional one-factor-at-a-time (OFAT) approaches to reaction optimization are often resource-intensive and time-consuming, failing to capture critical factor interactions [2]. The fusion of High-Throughput Experimentation (HTE), Design of Experiments (DOE), and Machine Learning (ML) represents a paradigm shift, enabling researchers to navigate complex experimental spaces with unprecedented speed and intelligence [30] [2]. This document details a standardized workflow for reaction optimization, framed within the context of high-throughput automated platforms, guiding researchers from initial experimental design to final experimental validation. By adopting this structured methodology, scientists can accelerate development timelines, improve process understanding, and identify robust optimal conditions, thereby fully leveraging the advantages of automated and intelligent synthesis platforms [30].

Foundational Principles of Design of Experiments (DOE) for Reaction Optimization

The first and most critical step in the optimization workflow is the strategic planning of experiments using DOE. This approach systematically varies multiple factors simultaneously to model the relationship between process parameters and reaction outcomes, such as yield, selectivity, and purity.

Key DOE Concepts and Response Surface Methodology (RSM)

In a typical chemical process optimization, a researcher might investigate factors like Time, Temperature, and Catalyst percentage [32]. The goal of RSM is to fit a quadratic surface to the experimental data, which is well-suited for identifying optimal process settings [32]. A central composite design (CCD) is a standard RSM design composed of a core factorial portion (forming a cube in the factor space), augmented with axial (star) points and center points to allow for the estimation of curvature [32].

- Experimental Design Layout: A three-factor CCD can be structured in two blocks. Block 1 includes the eight factorial points plus four center points, while Block 2 includes six axial points plus two additional center points. This blocking structure accounts for potential day-to-day experimental variability [32].

- Factor Ranges and Alpha (α): The distance of the axial points from the design center, denoted as alpha (α), is a key consideration. Standard options include a Rotatable design (α ≈ 1.681 for three factors), a "Practical" value (α = 1.316 for three factors), or a Face-Centered design (α = 1). The choice influences the extreme ranges of the factors and the properties of the design [32].

Table 1: Key Software Tools for Experimental Design and Data Analysis

| Tool Name | Primary Function | Key Features | Application in Workflow |

|---|---|---|---|