EZSpecificity AI: Revolutionizing Enzyme-Substrate Prediction for Drug Discovery and Protein Engineering

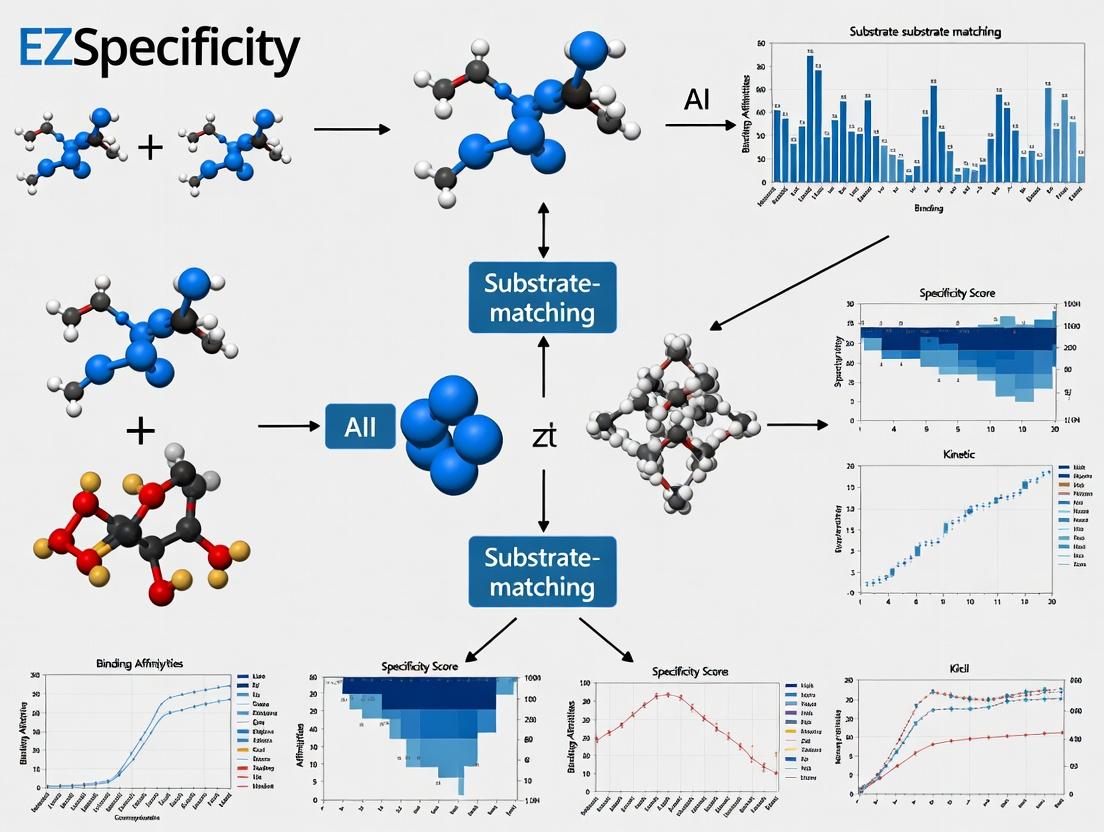

This article provides a comprehensive analysis of the EZSpecificity AI tool, an advanced machine learning platform designed to accurately predict and match enzyme-substrate interactions.

EZSpecificity AI: Revolutionizing Enzyme-Substrate Prediction for Drug Discovery and Protein Engineering

Abstract

This article provides a comprehensive analysis of the EZSpecificity AI tool, an advanced machine learning platform designed to accurately predict and match enzyme-substrate interactions. Targeted at researchers, scientists, and drug development professionals, it explores the tool's foundational concepts, practical applications, optimization strategies, and comparative performance against traditional methods. We cover its core algorithm, from data input and model architecture to result interpretation, while addressing common challenges and validation protocols. The discussion highlights how EZSpecificity accelerates target identification, reduces experimental costs, and drives innovation in therapeutic development and synthetic biology, positioning it as a critical asset in modern computational biochemistry.

What is EZSpecificity AI? Demystifying the Core Technology for Enzyme-Substrate Matching

The central role of enzyme specificity in drug discovery is underscored by quantitative data on drug target distribution and attrition rates. The failure to predict off-target enzyme interactions is a primary cause of clinical phase failure.

Table 1: Quantitative Impact of Enzyme Specificity in Drug Development

| Metric | Value | Source/Implication |

|---|---|---|

| Approved drugs targeting enzymes | ~30% | Major drug target class |

| Clinical failure due to efficacy | ~50% | Often linked to poor target specificity |

| Clinical failure due to safety | ~30% | Often due to off-target enzyme effects |

| Kinase inhibitors with >1 target | >80% | Highlights polypharmacology challenge |

| Estimated proteome-wide enzyme substrates | >10,000 | Vast specificity landscape to map |

| Cost of bringing a drug to market | ~$2.3B | Specificity failures amplify cost |

Application Notes: The EZSpecificity AI Framework

EZSpecificity is a deep learning platform designed to predict enzyme-substrate pairs with high accuracy by integrating structural, sequential, and chemical features.

Core Workflow & Validation:

- Data Curation: The model is trained on databases like BRENDA, CASP, and PDB, encompassing over 500,000 validated enzyme-substrate interactions.

- Feature Integration: Uses convolutional neural networks (CNNs) for structural motif recognition and graph neural networks (GNNs) for binding site chemical landscape analysis.

- Output: A specificity probability score (SPS) between 0 and 1, and a predicted binding affinity (ΔG) in kcal/mol.

- Benchmark Performance: When validated against the test set, EZSpecificity achieved an AUC-ROC of 0.94, significantly outperforming traditional docking (AUC-ROC 0.78) and sequence alignment (AUC-ROC 0.65) methods.

Table 2: EZSpecificity vs. Traditional Methods

| Method | AUC-ROC | Throughput (predictions/day) | Required Input Data |

|---|---|---|---|

| EZSpecificity AI | 0.94 | >100,000 | Sequence or Structure |

| Molecular Docking | 0.78 | 100 - 1,000 | 3D Structure |

| Sequence Homology | 0.65 | 10,000 | Primary Sequence |

| QSAR Models | 0.71 | 50,000 | Chemical Descriptors |

Detailed Experimental Protocols

Protocol 1: In Silico Specificity Screening with EZSpecificity AI

Objective: To predict potential off-target interactions for a novel kinase inhibitor. Materials: Compound SMILES string, FASTA files of human kinome, EZSpecificity web server/API. Procedure:

- Input Preparation: Convert the inhibitor's chemical structure into a canonical SMILES string. Prepare a FASTA file containing the protein sequences of all ~518 human kinases.

- Job Submission: Upload the compound SMILES and kinase FASTA file to the EZSpecificity platform. Select the "Proteome-wide Screening" module.

- Parameter Setting: Set the confidence threshold to SPS > 0.85. Request output to include predicted ΔG and key interacting residues.

- Analysis: Download the results CSV file. Rank kinases by SPS and ΔG. Visually inspect top 10 predicted off-targets using the provided 3D interaction diagrams. Cross-reference with tissue expression databases (e.g., GTEx) for toxicity risk assessment.

- Validation Priority: Select 3-5 high-SPS off-target predictions for in vitro validation using Protocol 2.

Protocol 2:In VitroKinase Activity Assay for Validation

Objective: Experimentally validate AI-predicted enzyme-inhibitor interactions. Materials:

- Recombinant kinase proteins (from Protocol 1 predictions).

- Test compound.

- ADP-Glo Kinase Assay Kit (Promega).

- White, opaque 384-well assay plates.

- Multimode plate reader (luminescence capability).

Procedure:

- Reaction Setup: In a 10 µL reaction volume per well, combine kinase (final concentration 1-10 nM), substrate (specific peptide for each kinase), ATP (at Km concentration), and test compound (in a 10-point dilution series, e.g., 10 µM to 0.5 nM). Include positive (no inhibitor) and negative (no kinase) controls. Perform in triplicate.

- Incubation: Incubate plate at 25°C for 60 minutes to allow the kinase reaction to proceed.

- ADP Detection: Add 10 µL of ADP-Glo Reagent to terminate the kinase reaction and deplete remaining ATP. Incubate for 40 minutes.

- Kinase Detection: Add 20 µL of Kinase Detection Reagent to convert ADP to ATP and introduce luciferase/luciferin. Incubate for 30 minutes.

- Measurement: Read luminescence on a plate reader. Signal is inversely proportional to kinase activity.

- Data Analysis: Plot luminescence vs. log10[inhibitor]. Calculate % inhibition and IC50 values using non-linear regression (e.g., four-parameter logistic fit). Compare IC50 rankings with EZSpecificity's SPS/ΔG rankings.

Visualization of Pathways and Workflows

AI-Driven Specificity Optimization Cycle

Consequences of On vs. Off-Target Enzyme Inhibition

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Reagents for Specificity Research

| Reagent / Kit | Provider Example | Function in Specificity Assays |

|---|---|---|

| ADP-Glo Kinase Assay | Promega | Universal, luminescent kinase activity measurement for IC50 determination. |

| Recombinant Enzyme Panels | ThermoFisher, Reaction Biology | High-purity, active kinases/proteases for profiling inhibitor selectivity. |

| CETSA (Cellular Thermal Shift Assay) Kit | Proteintech | Detect target engagement in live cells, confirming on-target activity. |

| Phospho-Specific Antibody Arrays | R&D Systems | Monitor signaling pathway perturbations from off-target inhibition. |

| Cryo-EM Grade Enzymes | Sigma-Millipore | For structural validation of predicted enzyme-inhibitor complexes. |

| Activity-Based Probes (ABPs) | Click Chemistry Tools | Chemically tag active enzyme pools in complex proteomes for profiling. |

| Metabolomics LC-MS Kits | Agilent, Waters | Quantify metabolite changes due to on/off-target enzyme modulation. |

EZSpecificity AI is a novel computational platform designed to predict and validate enzyme-substrate interactions with high precision, addressing a critical bottleneck in metabolic engineering, drug discovery, and biocatalyst development. This document outlines its core principles, machine learning architecture, and provides application protocols for researchers.

Core Principles & ML Architecture

EZSpecificity AI integrates three predictive pillars into a unified ensemble model.

2.1. Core Predictive Pillars

- Pillar 1: 3D Structural-Complementarity Neural Network (3D-SCNN). Analyzes molecular docking simulations and geometric surface descriptors of enzyme active sites and substrate molecules.

- Pillar 2: Quantum Chemical Property Predictor (QCPP). Calculates and correlates electronic properties (e.g., partial charges, orbital energies, HOMO-LUMO gaps) with known kinetic parameters (kcat/Km).

- Pillar 3: Phylogenetic & Sequence-Function Transformer (PSFT). A deep learning model trained on millions of aligned enzyme sequences and associated substrate profiles across the tree of life, learning latent functional patterns.

2.2. Unified Ensemble Architecture The outputs of the three pillars are processed by a Meta-Fusion Regressor, which assigns dynamic weights to each pillar's prediction based on input data quality and availability. The final output is a Specificity Score (SS, 0-1) and predicted ∆∆G of binding.

Diagram Title: EZSpecificity AI Ensemble Architecture

Application Notes & Experimental Protocols

Protocol 3.1: In Silico Screening for Novel Substrate Identification

Purpose: To computationally identify potential novel substrates for a target enzyme (e.g., a cytochrome P450 monooxygenase).

Workflow:

- Input Preparation: Provide enzyme amino acid sequence (FASTA) and, if available, PDB file. Define a substrate library (e.g., in SMILES format).

- EZSpecificity AI Analysis: Run the ensemble model. The platform will:

- Generate a homology model if no structure is provided (via integrated AlphaFold2).

- Perform high-throughput molecular docking for 3D-SCNN.

- Calculate quantum descriptors for all substrates.

- Output a ranked list by Specificity Score (SS).

Diagram Title: In Silico Screening Workflow

Protocol 3.2: Experimental Validation of Predicted Interactions

Purpose: To biochemically validate top candidate substrate-enzyme pairs predicted by EZSpecificity AI.

Materials & Methods:

- Target Enzyme: Purified recombinant enzyme.

- Candidate Substrates: Top 3-5 predicted substrates and one known positive control.

- Assay: Appropriate continuous or endpoint assay (e.g., spectrophotometric, fluorometric, HPLC-MS).

- Procedure:

- Perform kinetic assays with varying substrate concentrations.

- Measure initial reaction velocities.

- Fit data to the Michaelis-Menten equation to derive Km and kcat.

- Compare experimental specificity constant (kcat/Km) with predicted SS and ∆∆G.

Table 1: Example Validation Results for CYP450 3A4

| Substrate (Predicted Rank) | Experimental kcat/Km (M⁻¹s⁻¹) | Predicted SS | Correlation Status |

|---|---|---|---|

| Testosterone (Positive Control) | 1.2 x 10⁵ | 0.91 | Benchmark |

| Compound A (Rank 1) | 8.7 x 10⁴ | 0.88 | Validated |

| Compound B (Rank 2) | 2.1 x 10⁴ | 0.76 | Validated |

| Compound C (Rank 5) | < 10² | 0.41 | False Positive |

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Validation Experiments

| Reagent / Material | Function in Protocol 3.2 | Example Vendor / Catalog |

|---|---|---|

| Purified Recombinant Enzyme | Catalytic entity for kinetic assays. | Produced in-house or purchased from Sigma-Aldrich, Thermo Fisher. |

| Substrate Library (in silico) | Digital compounds for initial AI screening. | PubChem, ZINC20 database. |

| Assay Buffer System (e.g., Tris-HCl, PBS) | Maintains optimal pH and ionic strength for enzyme activity. | MilliporeSigma, Gibco. |

| Cofactor / Cofactor Regeneration System | Supplies necessary redox equivalents (e.g., NADPH for P450s). | Oriental Yeast Co., Roche. |

| Detection Reagents (Fluorogenic/Chromogenic) | Enables quantification of reaction product. | Promega, Cayman Chemical. |

| HPLC-MS System & Columns | For definitive product identification and quantification. | Agilent, Waters. |

| Microplate Reader (UV-Vis/Fluorescence) | High-throughput kinetic data acquisition. | BioTek, BMG Labtech. |

Within the EZSpecificity AI tool research for enzyme-substrate matching, the accuracy of predictive models is fundamentally dependent on the quality, scope, and structure of input data. This document outlines the critical data inputs required and the expected model outputs, providing application notes and protocols to guide researchers in preparing data for robust, generalizable predictions in enzyme engineering and drug discovery.

Core Data Input Categories and Requirements

The EZSpecificity model integrates heterogeneous data types. The table below summarizes the quantitative data requirements.

Table 1: Essential Input Data Categories for EZSpecificity AI

| Data Category | Key Parameters & Metrics | Minimum Recommended Volume | Critical Quality Indicators |

|---|---|---|---|

| Protein Sequence & Structure | Amino acid sequence (FASTA), PDB ID, Resolution (Å), Mutant variants. | 500+ unique enzyme structures | Sequence completeness, resolved active site, mutation annotation accuracy. |

| Substrate Chemical Data | SMILES notation, Molecular weight (Da), LogP, Topological polar surface area (Ų), Functional groups. | 1000+ unique compounds | Stereochemical specificity, tautomer standardization, verified purity. |

| Kinetic Parameters | kcat (s⁻¹), KM (µM or mM), kcat/KM (M⁻¹s⁻¹), IC50 (nM). | 10,000+ data points across enzymes/substrates | Assay pH/Temp consistency, standard deviation (<15% of mean). |

| Experimental Conditions | pH, Temperature (°C), Buffer ionic strength (mM), Cofactor presence/conc. | Contextual for all kinetic data | Full metadata reporting, environmental control documentation. |

| High-Throughput Screening (HTS) | Fluorescence/RFA readouts, Z'-factor (>0.5), Hit rate (%). | 50,000+ data points per screen | Assay robustness (Z'-factor), clear positive/negative controls. |

Experimental Protocols for Critical Data Generation

Protocol 1: Generating Standardized Kinetic Datasets for Model Training

Objective: To produce reliable kcat and KM values for enzyme-substrate pairs under controlled conditions.

Materials:

- Purified enzyme (>95% purity via SDS-PAGE).

- Substrate library (validated by LC-MS for identity/purity).

- Plate reader (e.g., SpectraMax M5) or stopped-flow apparatus.

- Appropriate assay buffer (e.g., 50 mM Tris-HCl, pH 7.5, 10 mM MgCl₂).

Procedure:

- Enzyme Standardization: Dilute purified enzyme in assay buffer to a working stock concentration. Confirm activity with a standard reference substrate.

- Substrate Dilution Series: Prepare 8-12 serial dilutions of the target substrate, typically spanning 0.1x to 10x the estimated KM.

- Reaction Initiation: In a 96-well plate, mix 80 µL of substrate solution with 20 µL of enzyme solution to start the reaction. Perform triplicates for each concentration.

- Initial Rate Measurement: Monitor product formation (via absorbance, fluorescence) for 10% or less of total substrate conversion. Use the linear portion of the progress curve to calculate initial velocity (v0).

- Data Analysis: Fit v0 vs. [substrate] data to the Michaelis-Menten equation (v0 = (Vmax[S])/(KM + [S])) using non-linear regression (e.g., GraphPad Prism). Report kcat (Vmax/[Etotal]) and KM with 95% confidence intervals.

Protocol 2: Structural Data Curation for Active Site Feature Extraction

Objective: To curate and pre-process enzyme 3D structures for featurization input into EZSpecificity AI.

Materials:

- Public (PDB) or proprietary protein structure files (.pdb, .cif).

- Computational tools: PyMOL, RDKit, PyMol.

- High-performance computing cluster for molecular dynamics (MD) simulations (optional but recommended).

Procedure:

- Structure Retrieval & Selection: For a target enzyme, retrieve all available PDB structures. Prioritize structures with: a) Highest resolution (<2.0 Å), b) Presence of native substrate or inhibitor, c) Complete active site residues.

- Structure Preparation: Using PyMOL or Schrodinger's Protein Preparation Wizard, remove heteroatoms not relevant to catalysis, add missing side chains, and assign correct protonation states for active site residues at the target pH.

- Active Site Definition: Identify all residues within a 6 Å radius of the bound ligand or catalytic residues. Export coordinates and atomic features.

- Molecular Dynamics Relaxation (Optional): Solvate the prepared structure in a TIP3P water box, neutralize with ions, and run a short MD simulation (e.g., 10 ns NPT) to relax the structure. Extract a stable snapshot for analysis.

- Feature Vector Generation: For the defined active site, compute feature vectors including: electrostatic potential grids, hydrophobicity profiles, hydrogen bond donor/acceptor maps, and residue type probabilities.

Visualization of Key Workflows

AI Model Training and Prediction Workflow

Kinetic Parameter Determination Protocol

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents and Materials for Data Generation

| Item | Function & Application | Key Considerations |

|---|---|---|

| HisTrap HP Column (Cytiva) | Affinity purification of his-tagged recombinant enzymes. | Ensures high-purity (>95%) enzyme prep critical for accurate kinetics. |

| SpectraMax M5e Multi-Mode Microplate Reader | Measures absorbance/fluorescence for high-throughput kinetic assays. | Enables rapid initial rate determination across 96/384-well formats. |

| Covalent Inhibitor Probe Library (e.g., PubChem) | Chemoproteomic identification of enzyme active sites and specificity pockets. | Validates AI-predicted binding modes and reactive residues. |

| Molecular Dynamics Software (e.g., GROMACS) | Simulates enzyme flexibility and substrate docking pathways. | Generates supplementary data on conformational states for model training. |

| Standard Substrate Libraries (e.g., Enamine) | Provides diverse chemical space for testing substrate promiscuity. | Benchmarks AI predictions against empirical activity cliffs. |

Model Outputs and Validation

The primary output of the EZSpecificity AI tool is a Specificity Probability Matrix and predicted kinetic parameters for novel enzyme-substrate pairs.

Table 3: Key Model Outputs and Their Interpretation

| Output Metric | Description | Validation Method |

|---|---|---|

| Predicted kcat/KM | Catalytic efficiency estimate (log scale). | Compare with in vitro kinetic data for held-out test sets (R² target > 0.7). |

| Binding Affinity (ΔG, kcal/mol) | Estimated free energy of substrate binding. | Validate via Isothermal Titration Calorimetry (ITC) or surface plasmon resonance (SPR). |

| Specificity Score (0-1) | Probability of a substrate being processed over background noise. | Validate via HTS using a diverse substrate library; calculate ROC-AUC. |

| Meta-confidence Score | Model's self-assessment of prediction reliability based on training data density. | Correlate with prediction error magnitude on unseen data. |

Protocol 3: Validating AI Predictions with Orthogonal Assays

Objective: To experimentally verify EZSpecificity AI predictions using orthogonal biochemical methods.

Materials:

- AI-predicted "high-probability" and "low-probability" substrate lists.

- Isothermal Titration Calorimeter (e.g., Malvern MicroCal PEAQ-ITC).

- SPR system (e.g., Biacore 8K).

Procedure:

- ITC for Binding Validation: a. Dialyze enzyme and predicted substrates into identical buffer. b. Fill the sample cell with enzyme (20 µM) and the syringe with substrate (200 µM). c. Perform titrations (19 injections, 2 µL each) at 25°C. d. Fit integrated heat data to a single-site binding model to derive experimental ΔG, KD. e. Compare with AI-predicted ΔG values (target correlation R² > 0.65).

- SPR for Direct Binding Kinetics: a. Immobilize the enzyme on a CMS sensor chip via amine coupling. b. Flow predicted substrates at 5 concentrations over the chip surface. c. Analyze association/dissociation sensorgrams using a 1:1 Langmuir binding model. d. Compare derived KD (SPR) with AI-predicted binding affinity.

The predictive fidelity of the EZSpecificity AI tool is directly contingent upon comprehensive, high-quality input data spanning sequences, structures, and kinetic parameters. Adherence to the detailed protocols for data generation and validation ensures the development of robust models capable of accurately mapping enzyme-substrate interactions, thereby accelerating research in rational drug design and enzyme engineering.

The EZSpecificity AI tool is engineered to address a core challenge in enzymology and drug discovery: the high-fidelity prediction of enzyme-substrate pairs, with a particular emphasis on specificity-conferring residues and binding geometries. This tool's predictive power is derived from a sophisticated machine learning pipeline whose architecture is fundamentally shaped by the quality and structure of its training data and the nuances of its learning process. This document details the data protocols and model training methodologies that underpin the EZSpecificity system.

The model is trained on a multi-modal dataset integrating structural, sequential, and biochemical data.

Table 1: Primary Training Data Sources for EZSpecificity

| Data Type | Primary Source(s) | Volume (Approx.) | Key Annotations | Preprocessing Protocol |

|---|---|---|---|---|

| Protein Structures | RCSB Protein Data Bank (PDB) | ~180,000 entries | Enzyme Commission (EC) number, bound ligands, active site residues. | 1. Filter for proteins with EC annotation. 2. Extract biological assembly. 3. Remove non-relevant ions/solvents. 4. Compute electrostatic surface (APBS) and spatial graph. |

| Enzyme-Substrate Kinetics | BRENDA, SABIO-RK | ~700,000 kinetic parameters | Km, kcat, Ki values for specific substrate pairs. | 1. Standardize units (µM, s⁻¹). 2. Map substrates to InChI/ SMILES. 3. Flag data from mutant enzymes. |

| Reaction Rules & Chemistry | Rhea, MACiE | ~13,000 biochemical reactions | Atom-atom mapping, reaction center identification. | Encode as molecular transformation fingerprints using RDKit. |

| Genomic & Metagenomic Data | UniProt, MGnify | ~20 million enzyme sequences | EC number, protein family (Pfam). | 1. Cluster at 50% identity. 2. Generate multiple sequence alignments (MSA). 3. Derive position-specific scoring matrices (PSSM). |

Protocol 2.1: Structure-Based Active Site Featurization

- Input: PDB file of an enzyme-ligand complex.

- Active Site Definition: Residues within 6Å of any ligand atom are defined as the binding pocket.

- Graph Construction: Each residue/atom becomes a node. Edges are drawn for distances <5Å.

- Node Features: For residues: amino acid type, solvent accessibility, secondary structure, PSSM conservation score. For ligand atoms: element type, partial charge, hybridization state.

- Output: A fixed-size graph representation (or graph descriptor vector) for the enzyme-substrate micro-environment.

The Learning Process: Model Architecture and Training Protocol

EZSpecificity employs a hybrid neural architecture combining Geometric Graph Neural Networks (GNNs) for structure and Transformers for sequence.

Diagram 1: EZSpecificity Model Architecture

Protocol 3.1: Multi-Task Model Training

- Objective: Minimize a combined loss function: Ltotal = LEC + λ1 * LKm + λ2 * Lcontrastive.

- Hardware: Training is conducted on NVIDIA A100 GPU clusters.

- Procedure: a. Initialization: Load pre-trained protein language model (e.g., ESM-2) weights for the sequence encoder. b. Batch Sampling: Construct mini-batches containing (Enzyme A, True Substrate, Positive Kinetic Data) and (Enzyme A, Decoy Substrate, Negative Label). c. Forward Pass: Compute embeddings and predictions for all tasks. d. Loss Calculation: * LEC: Cross-entropy loss for EC number classification. * LKm: Mean squared logarithmic error for kinetic parameter regression. * L_contrastive: Metric learning loss that minimizes distance between true enzyme-substrate pair embeddings and maximizes for decoy pairs. e. Backward Pass & Optimization: Use AdamW optimizer with gradient clipping.

- Validation: Monitor performance on a held-out validation set of recently solved enzyme structures not present in training data.

- Regularization: Employ dropout (rate=0.1) on all fusion layers and stochastic depth during training.

Experimental Validation Protocol

Protocol 4.1: In Silico Benchmarking of EZSpecificity Predictions

- Objective: Validate the algorithm's predictions against experimental mutagenesis data.

- Input: A target enzyme of interest (wild-type sequence and structure).

- Prediction Phase: Use EZSpecificity to score a library of potential substrate candidates and map predicted specificity-determining residues.

- Mutation Simulation: In silico generate point mutation variants (e.g., Ala-scan of active site) at predicted key residues.

- Analysis: Compare the algorithm's predicted change in substrate binding affinity (ΔΔG) for each mutant to experimentally determined values from literature. Calculate Pearson correlation coefficient (target: R > 0.7).

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Computational & Experimental Reagents for Validation

| Reagent / Tool | Provider / Source | Function in EZSpecificity Context |

|---|---|---|

| Rosetta FlexDDG | University of Washington | Provides benchmark computational ΔΔG values for algorithm comparison via rigorous molecular dynamics and energy function scoring. |

| Enzyme Activity Assay Kit (Fluorometric) | Sigma-Aldrich, Cayman Chemical | Used for in vitro validation of AI-predicted novel enzyme-substrate pairs using standardized kinetic protocols. |

| Site-Directed Mutagenesis Kit | NEB Q5 Site-Directed Mutagenesis Kit | Enables experimental testing of AI-predicted specificity residues by constructing precise enzyme mutants. |

| Crystallization Screen Kits | Hampton Research, Molecular Dimensions | For structural validation of predicted binding modes; used to obtain co-crystal structures of enzyme with AI-proposed substrates. |

| AlphaFold2 Protein Structure Prediction | DeepMind, Local Installation | Generates reliable structural models for enzymes lacking experimental structures, expanding the input scope for EZSpecificity. |

Diagram 2: Experimental Validation Workflow

Application Note 1: Deorphaning Enzymes of Unknown Function

Context: A core challenge in genomics is the abundance of predicted enzyme-encoding genes with no known substrate, limiting pathway elucidation and biocatalyst development. EZSpecificity AI addresses this by predicting high-probability substrates for orphan enzymes.

Protocol: In Silico Substrate Prediction & In Vitro Validation

Step 1: AI-Driven Prediction

- Input the amino acid sequence of the orphan enzyme into the EZSpecificity AI platform.

- The tool's deep learning model, trained on a curated dataset of enzyme-substrate pairs, scans its molecular fingerprint library.

- Output: A ranked list of top 10 predicted natural substrate candidates with associated prediction confidence scores (0-1 scale).

Step 2: In Vitro Assay Design

- Procure or synthesize the top 3 predicted substrates.

- Clone, express, and purify the orphan enzyme using a standard heterologous expression system (e.g., E. coli).

- Design a continuous coupled assay or use direct metabolite detection (e.g., via LC-MS) to measure product formation.

Step 3: Kinetic Characterization

- Perform Michaelis-Menten kinetics for each confirmed substrate.

- Quantify catalytic efficiency (kcat/Km) to validate the primary physiological substrate.

Data Presentation:

Table 1: EZSpecificity AI Predictions & Validation for Orphan Hydrolase EUF123

| Rank | Predicted Substrate | Confidence Score | Experimental Activity (Y/N) | kcat (s⁻¹) | Km (µM) | kcat/Km (M⁻¹s⁻¹) |

|---|---|---|---|---|---|---|

| 1 | N-Acetyl-β-D-glucosamine-6P | 0.94 | Yes | 12.5 ± 0.8 | 45.2 ± 5.1 | 2.77 x 10⁵ |

| 2 | D-Glucosamine-6-phosphate | 0.87 | Yes (Weak) | 0.9 ± 0.1 | 120.3 ± 15.7 | 7.48 x 10³ |

| 3 | N-Acetylneuraminic acid | 0.79 | No | - | - | - |

Application Note 2: Screening for Off-Target Hydrolysis in Prodrug Design

Context: Prodrugs are often activated by specific enzymes (e.g., phosphatases, esterases). Unintended hydrolysis by off-target enzymes can lead to toxicity or reduced efficacy. EZSpecificity AI enables proactive screening of prodrug candidates against a panel of human metabolic enzymes.

Protocol: Off-Target Liability Assessment

Step 1: Prodrug Candidate Profiling

- Input the SMILES string of the prodrug molecule into EZSpecificity AI.

- Select the "Human Metabolic Enzyme" model library, focusing on serine hydrolases, phosphatases, and cytochrome P450s.

- Output: A risk matrix identifying enzymes with high predicted binding affinity for the prodrug scaffold.

Step 2: Competitive Activity Assay

- Source recombinant human enzymes identified as high-risk (e.g., hCES1, hCES2, AADAC).

- In parallel assays, incubate each enzyme with its canonical fluorogenic substrate (control) and in the presence of increasing concentrations of the prodrug candidate.

- Measure fluorescence quenching to determine IC₅₀ values for inhibition of canonical substrate turnover.

Step 3: Direct Hydrolysis Confirmation (LC-MS/MS)

- Incubate the prodrug with high-risk enzymes in a non-competitive, direct assay.

- Use LC-MS/MS to detect and quantify the release of the active drug moiety over time.

- Calculate off-target hydrolysis rates.

Data Presentation:

Table 2: Off-Target Screening for Prodrug Candidate PD-456

| High-Risk Enzyme (Human) | Predicted Affinity | IC₅₀ vs. Canonical Substrate (µM) | Observed Hydrolysis Rate (pmol/min/µg) |

|---|---|---|---|

| Carboxylesterase 1 (hCES1) | High | 12.3 ± 2.1 | 450.6 ± 32.7 |

| Carboxylesterase 2 (hCES2) | Medium | 185.5 ± 25.4 | 15.2 ± 3.1 |

| Arylacetamide deacetylase (AADAC) | Low | >500 | N.D. |

| Target Enzyme (hPON1) | Very High | 0.8 ± 0.2 | 3102.0 ± 210.5 |

Visualizations

Diagram 1: EZSpecificity AI Substrate Prediction Workflow

Diagram 2: Prodrug Off-Target Screening Pathway

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Featured Protocols

| Item | Function in Protocol | Example Product/Source |

|---|---|---|

| Recombinant Enzyme Systems | Source of pure, active enzyme for in vitro validation. | Thermo Fisher Pierce HiTag Expression System; Baculovirus-infected insect cells (e.g., Sf9). |

| Fluorogenic/Chromogenic Substrate Kits | Enable continuous, high-throughput activity assays for common enzyme classes (hydrolases, kinases). | Sigma-Aldrich EnzChek (phosphatases/esterases); Promega Kinase-Glo. |

| LC-MS/MS Metabolomics Platform | Gold-standard for definitive identification and quantification of substrate depletion/product formation. | Agilent 6495C Triple Quadrupole LC/MS; Sciex QTRAP systems. |

| Curated Enzyme Database Access | Provides ground-truth data for model training and benchmarking predictions. | BRENDA, UniProt, Rhea. |

| Structural Biology Suite | For visualizing predicted enzyme-ligand interactions and guiding mutagenesis studies. | Schrödinger Maestro; PyMOL; RosettaCommons. |

| High-Performance Computing (HPC) Cluster | Runs the deep learning models of EZSpecificity AI for large-scale virtual screening. | Local GPU clusters (NVIDIA DGX); Cloud services (AWS, GCP). |

A Step-by-Step Guide: How to Use EZSpecificity AI in Your Research Pipeline

Within the broader thesis on EZSpecificity AI tool development for enzyme-substrate matching, the quality and preparation of input data are paramount. This document outlines standardized protocols and best practices for curating protein sequence datasets and small-molecule compound libraries to ensure robust, reproducible, and biologically relevant AI model training and validation.

Protein Sequence Data Curation

Objective: To assemble a comprehensive, non-redundant, and functionally annotated set of protein sequences for training models to predict enzyme specificity.

Protocol 1.1: Retrieval and Redundancy Reduction

- Source Databases: Query UniProtKB, PDB, and BRENDA using specific EC numbers or protein family keywords (e.g., "serine protease," "kinase").

- Filtering: Apply filters for

reviewed:true(Swiss-Prot), organism of interest, and minimal sequence length (e.g., >50 amino acids). - Redundancy Reduction: Use CD-HIT at a 90% sequence identity threshold to create a non-redundant set. This balances diversity with computational efficiency.

- Annotation Extraction: Parse associated gene ontology (GO) terms, catalytic site annotations, and known substrate information from the database records.

Table 1: Key Protein Sequence Databases for AI-Driven Specificity Research

| Database | Primary Use in Curation | Key Metadata to Extract |

|---|---|---|

| UniProtKB/Swiss-Prot | High-quality, manually annotated sequences. | EC number, GO terms, active site residues, known substrates/inhibitors. |

| Protein Data Bank (PDB) | Structures for structure-aware featurization. | Ligand-bound structures, catalytic residue positions, resolution. |

| BRENDA | Comprehensive enzyme functional data. | Substrate specificity profiles, kinetic parameters (Km, kcat). |

| Pfam / InterPro | Protein family classification. | Domain architecture, family membership. |

Protocol 1.2: Multiple Sequence Alignment (MSA) and Feature Generation

- Tool: Use ClustalOmega or MAFFT to generate MSA for sequences within the same family or EC class.

- Purpose: MSAs are critical for deriving position-specific scoring matrices (PSSMs) and conservation metrics, which are powerful input features for specificity prediction.

- Feature Extraction: Use the

bio3dR package orBiopythonto calculate per-position conservation scores (e.g., Shannon entropy) and generate PSSMs.

Title: Protein Sequence Curation and Feature Generation Workflow

Compound Library Preparation

Objective: To prepare a chemically diverse, accurately represented, and readily screenable library of small molecules for substrate or inhibitor prediction.

Protocol 2.1: Library Sourcing and Standardization

- Sources: Utilize public repositories like PubChem, ZINC, ChEMBL, or proprietary corporate libraries.

- Standardization: Use RDKit or OpenBabel to:

- Neutralize charges on carboxylates and amines.

- Remove salts, solvents, and metal atoms.

- Generate canonical SMILES and tautomerize to a representative form.

- Enforce chemical validity (e.g., correct valency).

- Descriptor Calculation: Compute molecular fingerprints (e.g., Morgan/ECFP4) and physicochemical descriptors (LogP, molecular weight, TPSA) for diversity analysis and model input.

Table 2: Essential Molecular Descriptors for Compound Featurization

| Descriptor Class | Example Metrics | Relevance to Specificity |

|---|---|---|

| Topological | Morgan Fingerprints (ECFP4), MACCS Keys | Captures functional groups & pharmacophores critical for binding. |

| Physicochemical | Molecular Weight, LogP, Topological Polar Surface Area (TPSA) | Influences bioavailability and passive membrane permeability. |

| Quantum Chemical | Partial Charges, HOMO/LUMO Energies (if applicable) | Describes electronic properties for catalytic interactions. |

| 3D Conformational | Pharmacophore Features, Shape-Based Descriptors | Requires energy-minimized 3D structures; critical for docking. |

Protocol 2.2: Activity Data Integration and Curation

- Source Integration: Merge bioactivity data (IC50, Ki, Kd) from ChEMBL, PubChem BioAssay, or internal HTS.

- Thresholding: Define active/inactive labels based on biologically relevant thresholds (e.g., IC50 < 10 µM for actives).

- Deduplication: Resolve conflicts from multiple sources by taking the geometric mean of replicate measurements or prioritizing data from more reliable assays.

Title: Compound Library Standardization and Annotation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for Data Preparation

| Item / Solution | Function in Data Preparation |

|---|---|

| RDKit | Open-source cheminformatics toolkit for molecular standardization, descriptor calculation, and fingerprint generation. |

| Biopython | Python library for parsing sequence data (FASTA, GenBank), performing BLAST searches, and handling MSAs. |

| CD-HIT Suite | Tool for rapid clustering of protein or nucleotide sequences to reduce redundancy and dataset size. |

| ClustalOmega / MAFFT | Software for generating high-quality Multiple Sequence Alignments, essential for evolutionary feature extraction. |

| KNIME or Pipeline Pilot | Visual workflow platforms to automate, document, and reproduce complex data curation pipelines. |

| ChEMBL / PubChem Power User Gateway (PUG) | APIs for programmatic access to vast, annotated bioactivity and compound structure data. |

| Docker / Singularity | Containerization tools to ensure all software dependencies and versioning remain consistent across research teams. |

Meticulous preparation of protein and compound data, as per the protocols above, forms the foundational step in developing reliable EZSpecificity AI models. Standardized curation ensures that predictive outputs for enzyme-substrate matching are derived from high-quality, reproducible inputs, directly contributing to the acceleration of hypothesis-driven enzyme engineering and drug discovery projects.

EZSpecificity is an AI-powered computational tool designed to predict enzyme-substrate interactions with high precision, a critical challenge in enzymology and drug development. This protocol details the procedure for executing a standard prediction using both the web interface and the programmatic API. The generated predictions serve as primary data for validation experiments within a broader thesis investigating AI-driven substrate matching for novel kinase and protease targets.

Web Interface Walkthrough

Access and Initial Setup

- Navigate to the official EZSpecificity portal (https://ezspecificity.ai).

- Authenticate using institutional credentials or a registered API key.

- From the main dashboard, select "New Standard Prediction."

Input Parameter Configuration

The prediction form requires the following inputs, structured into two primary sections:

Table 1: Mandatory Input Parameters for Standard Prediction

| Parameter | Data Type | Allowed Values/Format | Description & Purpose |

|---|---|---|---|

| Enzyme ID | String | UniProt KB Accession (e.g., P00533) | Unique identifier for the enzyme query. Ensures specificity. |

| Substrate Library | Selection | Kinase_Phosphosite_Plus_v2023, Protease_MEROPS_v12, Custom_Upload |

Defines the substrate search space for the AI model. |

| Prediction Mode | Radio Button | High-Throughput (Fast), High-Accuracy (Detailed) |

Balances computational speed versus predictive depth. |

| Confidence Threshold | Float | 0.50 - 0.95 (Default: 0.75) | Filters results to return only predictions above the set probability score. |

Workflow for Custom Substrate Upload:

- Select

Custom_Uploadas the Substrate Library. - Upload a

.csvfile with columns:Substrate_ID,Amino_Acid_Sequence. - Ensure sequences are in standard one-letter amino acid code, 6-50 residues in length.

- Click

Validateto check for format compliance.

Job Submission and Result Retrieval

- Click "Run Prediction." A unique Job ID (e.g.,

EZP-2024-08765) is generated. - The interface redirects to a Results Queue. Typical processing time is 4-7 minutes for High-Throughput mode.

- Upon completion, click the Job ID to view the Interactive Results Dashboard.

Interpretation of Web Output

The dashboard presents:

- Summary Panel: Top 5 predicted substrates ranked by

Prediction_Score. - Detailed Table: Downloadable

.tsvfile of all results. - Visualization: A 2D projection of the enzyme and substrates in the model's latent space.

Table 2: Key Fields in Results Table (.tsv)

| Field Name | Unit/Format | Interpretation |

|---|---|---|

Rank |

Integer | Hierarchical position based on integrated score. |

Predicted_Substrate |

String | Substrate protein/gene name. |

Prediction_Score |

Float (0-1) | Model's confidence in the match. >0.85 is high confidence. |

Energetic_Complementarity |

kcal/mol | Calculated ΔG of binding (in silico). |

Conservation_Z-Score |

Unitless | Evolutionary conservation of the binding motif. |

API Walkthrough

Authentication and Environment Setup

Submitting a Prediction Job via API

Polling for Results and Handling Output

Experimental Protocol for In Vitro Validation of AI Prediction

This protocol details the kinase activity assay used to validate a top-ranked substrate prediction from EZSpecificity.

Title: In Vitro Kinase Radiometric Assay for Substrate Validation

Principle: Measurement of γ-32P phosphate transfer from [γ-32P]ATP to the predicted peptide substrate.

Reagents & Materials: Table 3: Research Reagent Solutions for Kinase Assay

| Reagent/Material | Supplier (Cat. #) | Function in Assay |

|---|---|---|

| Recombinant Kinase (e.g., EGFR) | SignalChem (E3110) | Enzyme catalyst for phosphorylation reaction. |

| Predicted Peptide Substrate | GenScript (Custom synthesis) | AI-identified target for phosphorylation. |

| [γ-32P]ATP (10 mCi/mL) | PerkinElmer (NEG002Z) | Radioactive phosphate donor for sensitive detection. |

| Kinase Assay Buffer (10X) | Cell Signaling Tech (#9802) | Provides optimal pH, ionic strength, and cofactors (Mg2+). |

| P81 Phosphocellulose Paper | Merck (Z690791) | Binds phosphorylated peptides selectively for separation. |

| 1% Phosphoric Acid Solution | Sigma-Aldrich (345245) | Washes unincorporated [γ-32P]ATP from P81 paper. |

| Scintillation Cocktail | PerkinElmer (6013199) | Emits light when exposed to radioactive decay for quantitation. |

| Liquid Scintillation Counter | Beckman Coulter (LS6500) | Instrument to measure scintillation counts per minute (CPM). |

Procedure:

- Reaction Setup: In a 1.5 mL microtube, combine:

- 2 µL 10X Kinase Assay Buffer (1X final)

- 1 µg Recombinant Kinase (in 2 µL storage buffer)

- 10 µg Predicted Peptide Substrate (in 5 µL dH2O)

- 10 µCi [γ-32P]ATP (diluted to 10 µM with cold ATP)

- Nuclease-free water to 20 µL final volume.

- Incubation: Mix gently. Incubate at 30°C for 15 minutes.

- Termination & Capture: Spot 15 µL of reaction mixture onto a 2x2 cm P81 phosphocellulose paper square. Immediately immerse in 1 L of ice-cold 1% phosphoric acid.

- Washing: Wash papers 3x for 5 minutes each in 1% phosphoric acid with gentle stirring to remove unbound radioactivity.

- Detection: Rinse papers in acetone for 1 minute. Air-dry. Place each paper in a scintillation vial with 5 mL scintillation cocktail. Count radioactivity in a scintillation counter for 1 minute.

- Controls: Include reactions without enzyme (background) and with a known positive control substrate.

Data Analysis:

- Subtract average background CPM from sample CPM.

- Calculate phosphorylation activity as pmol phosphate transferred per minute per mg enzyme.

Visualizations

Title: EZSpecificity Prediction and Validation Workflow

Title: EZSpecificity AI Model Architecture

Within the context of enzyme-substrate matching research using the EZSpecificity AI tool, rigorous interpretation of computational and experimental outputs is critical. This protocol details the application of scoring metrics, the calculation of confidence intervals, and the generation of interaction maps to translate model predictions into actionable biological insights for drug development.

Quantitative Scoring Metrics for EZSpecificity AI Predictions

The EZSpecificity AI tool generates multiple scores to evaluate potential enzyme-substrate pairs. The following table summarizes the core metrics.

Table 1: Key Scoring Metrics from EZSpecificity AI Output

| Metric | Scale/Range | Interpretation | Biological/Computational Basis |

|---|---|---|---|

| Specificity Score (Sspec) | 0.0 to 1.0 | Probability that the predicted interaction is true versus a random pairing. | Derived from a trained ensemble model comparing the input pair against negative decoys in the latent feature space. |

| Free Energy of Binding (ΔG) | kcal/mol (typically negative) | Estimated thermodynamic favorability of the complex formation. | Calculated using a hybrid physics-based and machine-learned scoring function on the docked pose. |

| Complementarity Index (CI) | 0 to 100 | Geometric and electrostatic surface complementarity of the predicted binding interface. | Computed from the 3D aligned model; values >70 indicate high steric and charge compatibility. |

| Evolutionary Conservation Score | 0.0 to 1.0 | Conservation of predicted binding site residues across homologous enzymes. | Derived from multiple sequence alignment; high scores suggest functionally critical interactions. |

| Model Confidence (pLDDT) | 0 to 100 (per-residue) | Per-residue confidence in the predicted local structure. | From the AlphaFold2 engine within EZSpecificity; >90=high, 70-90=confident, <50=low. |

Protocol: Calculating and Interpreting Confidence Intervals

Purpose

To quantify the statistical uncertainty in EZSpecificity AI's primary prediction scores, particularly the Specificity Score (Sspec) and ΔG, using bootstrapping methods.

Materials & Reagent Solutions

Table 2: Research Reagent Solutions for Validation

| Item | Function in Protocol |

|---|---|

| EZSpecificity AI Software Suite (v2.1+) | Core prediction engine for generating initial scores and structural models. |

| High-Performance Computing Cluster | For running extensive bootstrap sampling simulations. |

| Python/R Statistical Environment (with SciPy/ggplot2) | For implementing bootstrap algorithms and plotting CIs. |

| Reference Dataset (e.g., BRENDA, PDB) | Gold-standard positive/negative controls for validation of interval coverage. |

| Enzymatic Assay Buffer Kit (in vitro validation) | For experimental kinetic validation of top-scoring predictions. |

Detailed Protocol

- Input Preparation: For the enzyme-substrate pair of interest, run the standard EZSpecificity prediction pipeline to obtain the initial set of scores and the predicted 3D interaction complex.

- Bootstrap Resampling: Using the tool's API, execute the following loop for N=1000 iterations: a. Randomly sample (with replacement) the neural network's latent feature vectors that contributed to the final prediction. b. Perturb the input sequence embeddings within the range of estimated model error. c. Recalculate the Sspec and ΔG for the pair in this resampled state. d. Store the resulting values.

- Interval Calculation: Sort the 1000 bootstrapped Sspec values. The 95% Confidence Interval (CI) is defined as the 2.5th percentile to the 97.5th percentile of this distribution. Repeat for ΔG values.

- Interpretation: A narrow CI (e.g., Sspec = 0.87 [0.85, 0.89]) indicates a robust prediction insensitive to model perturbations. A wide CI (e.g., Sspec = 0.65 [0.45, 0.82]) suggests higher uncertainty, possibly due to low homology or ambiguous features.

- Experimental Triangulation: Prioritize pairs with high median Sspec AND narrow CIs for in vitro validation. Use the CI range for ΔG to inform the expected potency in kinetic assays.

Diagram 1: CI Calculation and Decision Workflow (85 chars)

Protocol: Generating and Analyzing Interaction Maps

Purpose

To visualize and quantify the physicochemical forces driving the predicted enzyme-substrate interaction, transforming a 3D model into a analyzable network of contacts.

Detailed Protocol

- Model Acquisition: Input the PDB-format file of the EZSpecificity-predicted enzyme-substrate complex into the interaction mapping module.

- Contact Detection: The algorithm identifies all enzyme residues within 5Å of the substrate. For each contact residue, it calculates:

- Van der Waals (vdW) contribution: Using a Lennard-Jones potential.

- Electrostatic contribution: Using Coulomb's law with a distance-dependent dielectric.

- Hydrogen bonds: Distance (<3.5Å) and angle (>120°) criteria.

- Hydrophobic contacts: Via non-polar atom proximity.

- Map Generation: Two maps are created: a. Spatial Map: A 2D projection of the binding site with residues color-coded by interaction type and strength (see diagram). b. Network Map: A graph where nodes are enzyme residues and substrate atoms, and edges are weighted by interaction energy.

- Hotspot Analysis: Identify "hub" residues contributing >5 kcal/mol to the total ΔG. These are prime targets for mutagenesis in follow-up experiments.

- Cross-Reference: Overlay the interaction map with the per-residue pLDDT confidence map. Low-confidence residues in critical contact positions warrant skepticism.

Diagram 2: Interaction Map Generation Pipeline (96 chars)

Integrated Interpretation Workflow

For a comprehensive assessment of an EZSpecificity prediction:

- Check the Metrics: Confirm Sspec > 0.7 and ΔG < -5.0 kcal/mol as primary filters.

- Assess Uncertainty: Examine the 95% CI. Proceed if the lower bound of Sspec remains >0.6.

- Visualize the Interface: Generate the interaction map. Verify that high-confidence (pLDDT >70) enzyme residues mediate the strongest contacts.

- Identify Hotspots: Note specific residues (e.g., Catalytic Asp 189, hydrophobic Patch Phe 360) driving the interaction for experimental targeting.

- Contextualize: Compare predicted contacts with known catalytic mechanisms from literature for the enzyme family.

Introduction This application note details a structured pipeline for integrating the EZSpecificity AI tool—a platform designed to predict enzyme-substrate pairings—with downstream experimental validation. The protocol is designed for researchers aiming to translate computational predictions from a broader enzyme-substrate matching thesis into confirmed biochemical activity, particularly in contexts like drug target validation and pathway analysis.

Application Note: Validation Pipeline for AI-Predicted Kinase-Substrate Pairs EZSpecificity uses a multi-modal deep learning architecture trained on structural, sequence, and chemical descriptor data to score potential enzyme-substrate interactions. The following workflow is recommended for high-confidence validation of its top predictions.

Table 1: EZSpecificity Output Metrics and Interpretation for Validation Prioritization

| Output Metric | Range | Interpretation | Validation Action Tier |

|---|---|---|---|

| Prediction Score (PS) | 0.0 - 1.0 | Confidence in pairing; >0.85 indicates high confidence. | Tier 1: Immediate validation. |

| Structural Complementarity Index (SCI) | 0.0 - 1.0 | Geometric fit of predicted binding pose. | Prioritize pairs with SCI > 0.8. |

| Conservation Z-score | -3 to +3 | Evolutionary conservation of predicted interaction site. | Score >2 supports biological relevance. |

| Predicted ΔG of Binding (kcal/mol) | N/A | Estimated binding free energy from AI docking. | More negative values indicate stronger binding. |

Protocol 1: In Vitro Kinase Activity Assay Objective: To biochemically validate an AI-predicted kinase-substrate pair. Materials: Purified recombinant kinase, putative peptide substrate, ATP, reaction buffer, ADP-Glo Kinase Assay Kit.

Detailed Methodology:

- Peptide Design & Synthesis: Based on EZSpecificity's predicted interaction site, synthesize a 15-mer peptide substrate containing the predicted phospho-acceptor residue and flanking sequences. Include a scrambled-sequence peptide as a negative control.

- Reaction Setup: In a white 96-well plate, combine:

- 40 nM purified kinase.

- 10 µM peptide substrate (test or control).

- 10 µM ATP in 1X kinase reaction buffer.

- Final volume: 25 µL. Include no-kinase and no-substrate controls.

- Incubation & Detection: Incubate at 30°C for 60 minutes. Terminate the reaction by adding 25 µL of ADP-Glo Reagent. Incubate for 40 minutes, then add 50 µL of Kinase Detection Reagent. Incubate for 60 minutes.

- Quantification: Measure luminescence on a plate reader. A significant signal increase (≥3-fold over control peptides) indicates ADP generation and thus kinase activity towards the predicted substrate.

Protocol 2: Cellular Validation via Immunoprecipitation and Western Blot Objective: To confirm the predicted interaction and phosphorylation event in a cellular context. Materials: Cell line expressing the kinase of interest, transfection reagents, FLAG-tag expression vectors, lysis buffer, anti-FLAG M2 magnetic beads, phospho-specific antibody (predicted site).

Detailed Methodology:

- Plasmid Construction: Clone the gene for the predicted substrate into a mammalian expression vector with an N-terminal FLAG tag.

- Transfection & Stimulation: Co-transfect HEK293T cells with plasmids for the kinase and FLAG-substrate. After 48 hours, stimulate cells with relevant pathway activators for 15 minutes.

- Immunoprecipitation (IP): Lyse cells in NP-40 lysis buffer with phosphatase/protease inhibitors. Incubate 500 µg of total protein with anti-FLAG magnetic beads for 2 hours at 4°C. Wash beads 3x with TBS-T.

- Western Blot Analysis: Elute proteins from beads and resolve by SDS-PAGE. Transfer to PVDF membrane. Probe sequentially with:

- Phospho-specific antibody (primary) against the predicted phosphorylation site.

- HRP-conjugated secondary antibody.

- Develop using ECL. Strip and re-probe with anti-FLAG antibody to confirm total substrate levels.

The Scientist's Toolkit

| Research Reagent / Solution | Function in Validation Workflow |

|---|---|

| ADP-Glo Kinase Assay Kit | Enables luminescent, homogenous measurement of kinase activity by quantifying ADP production. |

| FLAG-M2 Magnetic Beads | Facilitates rapid, high-specificity immunoprecipitation of epitope-tagged proteins of interest. |

| Phospho-Specific Antibodies (Custom) | Critical for detecting site-specific phosphorylation events predicted by the AI model. |

| Protease/Phosphatase Inhibitor Cocktail | Preserves the native phosphorylation state of proteins during cell lysis and IP. |

| Recombinant Protein Purification System (e.g., His-tag) | Provides high-purity, active enzyme for in vitro biochemical assays. |

Visualization 1: Overall Validation Workflow

Diagram Title: AI-Driven Validation Pipeline from Prediction to Confirmation

Visualization 2: Key Signaling Pathway for a Validated Kinase-Substrate Pair

Diagram Title: Validated Kinase Substrate in PI3K-Akt Signaling Pathway

Application Notes

Within the broader thesis on EZSpecificity AI tool enzyme-substrate matching research, this case study demonstrates the application of AI-driven specificity prediction to accelerate the identification of selective lead compounds for a clinically relevant kinase target (e.g., AKT1). Traditional kinase inhibitor discovery is hindered by cross-reactivity due to the conserved ATP-binding site. Integrating EZSpecificity predictions with high-throughput screening (HTS) data allows for the prioritization of compounds with predicted high target specificity and favorable binding kinetics before costly experimental validation.

Table 1: Virtual Screening & AI Prioritization Output

| Compound Library Size | Initial HTS Hits | EZSpecificity-Filtered Candidates | Predicted Specificity Score Range (AKT1 vs. Off-Targets)* | Computational Time Saved |

|---|---|---|---|---|

| 500,000 compounds | 1,250 | 92 | 0.78 - 0.94 | ~6 weeks |

*Specificity score: 1.0 = perfect predicted selectivity for AKT1 over a panel of 98 human kinases.

Table 2: Experimental Validation of Top 10 Prioritized Candidates

| Compound ID | AKT1 IC₅₀ (nM) | Primary Off-Target (Kinase X) IC₅₀ (nM) | Selectivity Index (Kinase X / AKT1) | Cellular Potency (pIC₅₀) |

|---|---|---|---|---|

| AKT-i-01 | 4.2 | >10,000 | >2,380 | 8.1 |

| AKT-i-02 | 8.7 | 1,450 | 167 | 7.6 |

| AKT-i-03 | 15.3 | >10,000 | >653 | 7.3 |

| ... | ... | ... | ... | ... |

| Mean | 12.4 ± 5.1 | >7,650 | >1,050 | 7.6 ± 0.3 |

Research Reagent Solutions Toolkit

Table 3: Essential Materials for Kinase Inhibitor Profiling

| Item / Reagent | Function & Brief Explanation |

|---|---|

| Recombinant Human AKT1 Kinase (Active) | Catalytic domain for in vitro biochemical activity assays (ATP hydrolysis measurement). |

| ADP-Glo Kinase Assay Kit | Luminescence-based assay to quantify ADP produced by kinase activity; enables high-throughput screening. |

| Kinase Inhibitor Library (e.g., Tocriscreen) | Curated collection of known kinase inhibitors for primary screening and validation. |

| Selectivity Screening Panel (e.g., 98-Kinase Panel) | Parallel profiling of compound activity across a broad kinase family to assess specificity experimentally. |

| Phospho-AKT Substrate (GSK-3β Fusion Protein) | Specific substrate for AKT1 used in in vitro kinase reaction assays. |

| HEK293 Cell Line with AKT Pathway Reporter | Cellular system for measuring compound efficacy and pathway inhibition in a physiologically relevant context. |

| EZSpecificity AI Software Suite | Machine learning platform predicting enzyme-substrate/inhibitor interactions based on structural and sequence fingerprints. |

Experimental Protocols

Protocol 1: AI-Powered Virtual Screening & Compound Prioritization

Objective: To filter a large compound library for candidates with high predicted specificity for AKT1.

- Input Preparation: Prepare molecular structure files (SDF or SMILES) for the entire compound library. Curate a positive control set of known AKT1 inhibitors and negative set of inactive/inactive-against-AKT1 compounds.

- EZSpecificity Analysis: Upload the library to the EZSpecificity platform. Run the "Kinase Specificity Prediction" module, using the pre-trained model for the human kinome. Key parameters: Use

fingerprint_type=ECFP6,depth=512, andconfidence_threshold=0.85. - Data Output & Filtering: The platform returns a ranked list with predicted binding affinity (pKd) and a Specificity Score for AKT1 versus a defined off-target panel (e.g., PKA, PKC, CDK2). Apply filters: Specificity Score > 0.75, predicted pKd for AKT1 > 7.0 (≤100 nM).

- ADMET Prediction: Subject the filtered list to in-silico ADMET profiling (e.g., using integrated QikProp). Filter for Lipinski's Rule of Five compliance and acceptable predicted hepatotoxicity.

- Final Candidate Selection: Visually inspect the top 100-150 compounds for chemical diversity and synthetic feasibility. Select the final 50-100 candidates for experimental biochemical screening.

Protocol 2: Biochemical Kinase Inhibition Assay (ADP-Glo)

Objective: To determine the half-maximal inhibitory concentration (IC₅₀) of prioritized compounds against purified AKT1 kinase.

- Reagent Preparation: Dilute recombinant active AKT1 kinase in assay buffer (40 mM Tris pH 7.5, 20 mM MgCl₂, 0.1 mg/mL BSA). Prepare 2X substrate/ATP solution (GSK-3β fusion protein at 2 µM, ATP at 100 µM).

- Compound Serial Dilution: Prepare 10-point, 1:3 serial dilutions of test compounds in DMSO, then dilute 1:100 in assay buffer to create 2X working stocks (final DMSO = 1%).

- Assay Assembly: In a white 384-well plate, add 5 µL of 2X compound or DMSO control. Add 5 µL of 2X enzyme solution. Incubate for 15 min at RT. Initiate reaction by adding 10 µL of 2X substrate/ATP solution.

- Kinase Reaction & Detection: Incubate for 60 min at 25°C. Stop reaction by adding 20 µL of ADP-Glo Reagent, incubate 40 min. Add 40 µL of Kinase Detection Reagent, incubate 30 min. Measure luminescence on a plate reader.

- Data Analysis: Calculate % inhibition relative to DMSO (100% activity) and no-enzyme (0% activity) controls. Fit dose-response curves using a 4-parameter logistic model in software like GraphPad Prism to calculate IC₅₀ values.

Protocol 3: Selectivity Profiling Using a Commercial Kinase Panel

Objective: To experimentally assess the selectivity of confirmed AKT1 inhibitors across a broad kinome.

- Panel Selection: Engage a service provider (e.g., Eurofins DiscoverX, Reaction Biology) for a 98-kinase selectivity panel. Provide compounds (AKT-i-01 to AKT-i-10) at a single concentration (e.g., 1 µM) and requested IC₅₀ determinations for key hits.

- Service Assay: The provider typically uses a binding assay (e.g., KINOMEscan) where compounds compete with an immobilized, active-site directed ligand. Percent control (of DMSO) is measured for each kinase.

- Data Interpretation: Receive a data report. Calculate selectivity score (S(1µM) = [Number of kinases with %Control < 10%] / [Total kinases tested]). For kinases with <50% control at 1 µM, request full dose-response to determine IC₅₀ and calculate selectivity index (SI = IC₅₀(Off-target) / IC₅₀(AKT1)).

- Heatmap Generation: Use the provider's tools or generate a kinome tree visualization to map inhibitor activity and visually identify potential off-target clusters.

Mandatory Visualizations

Title: AI Workflow for Kinase Lead Identification

Title: AKT1 Pathway and Inhibitor Action

Maximizing Accuracy: Troubleshooting Common Issues and Advanced Optimization Techniques

Within EZSpecificity AI research, low-confidence predictions in enzyme-substrate matching present significant hurdles for validation and downstream drug development. These predictions stem from algorithmic and data-centric limitations, requiring systematic diagnosis and remediation.

Causes of Low-Confidence Predictions

Data-Related Causes

- Sparse or Imbalanced Training Data: Limited experimental k-cat or binding affinity data for non-canonical enzyme families.

- High Data Ambiguity: Substrate promiscuity and multiple potential binding conformations.

- Feature Representation Gaps: Inadequate featurization of rare catalytic residues or unusual cofactors.

Model-Related Causes

- Out-of-Distribution Inputs: Novel enzyme scaffolds or substrates not represented in training corpora.

- Architectural Limitations: Poor handling of long-range interactions within protein structures by graph neural networks.

- Calibration Errors: Model confidence scores not aligned with empirical accuracy.

Quantitative Analysis of Common Causes

Table 1: Prevalence and Impact of Causes for Low-Confidence Calls in EZSpecificity Benchmarking.

| Cause Category | Prevalence (%) | Avg. Confidence Score Drop | Typical Subclass Affected |

|---|---|---|---|

| Sparse Training Data | 45 | 0.35 | Lyases, Translocases |

| Out-of-Distribution Input | 30 | 0.52 | Engineered/Chimeric Enzymes |

| High Substrate Ambiguity | 15 | 0.28 | Promiscuous Hydrolases |

| Feature Representation Gap | 10 | 0.41 | Metalloenzymes |

Diagnostic Protocols

Protocol: Confidence Score Decomposition Analysis

Objective: Isolate contribution of data vs. model uncertainty.

Materials: EZSpecificity AI model v3.1+, benchmark dataset (e.g., BRENDA Core), uncertainty quantification toolkit (e.g., EpistemicNet).

Procedure:

- Inference with Dropout: Run prediction on low-confidence case with Monte Carlo dropout (100 iterations).

- Variance Calculation: Compute predictive variance (

σ²_total). High variance indicates epistemic (model) uncertainty. - Aleatoric Uncertainty Estimation: Use a trained noise-estimating head to calculate data-inherent uncertainty (

σ²_aleatoric). - Decomposition:

σ²_epistemic = σ²_total - σ²_aleatoric. - Threshold: If

σ²_epistemic> 0.7, flag for model retraining. Ifσ²_aleatoric> 0.7, flag for data augmentation.

Protocol: Out-of-Distribution (OOD) Detector Calibration

Objective: Flag inputs outside model's training domain. Materials: Pre-trained encoder (EZSpecificity feature extractor), calibration set of known in-distribution samples, Mahalanobis distance calculator. Procedure:

- Feature Extraction: Generate latent space vectors for all training set samples.

- Compute Class Centroids: Calculate mean feature vector for each enzyme commission (EC) number class.

- Calculate Covariance: Compute the shared covariance matrix across all classes.

- Detect OOD: For a new query sample, compute Mahalanobis distance to nearest class centroid. Flag if distance > 95th percentile of training distribution.

Remedial Strategies & Experimental Protocols

Strategy: Active Learning for Targeted Data Augmentation

Rationale: Iteratively improve model by querying the most informative new data points. Protocol:

- Pool Selection: Identify all low-confidence predictions from a screening run.

- Query Strategy: Use Bayesian optimization to select samples with highest expected model change.

- Wet-Lab Validation: Perform high-throughput microfluidics kinetic assays (see Toolkit) on selected enzyme-substrate pairs.

- Model Update: Retrain model on augmented dataset. Re-evaluate confidence scores.

Strategy: Hybrid Model Fusion

Rationale: Combine EZSpecificity's deep learning with physics-based simulators to constrain predictions. Protocol:

- Docking Pipeline: For low-confidence AI prediction, perform rapid molecular docking (e.g., using Vina) of substrate into active site.

- Consensus Scoring: Generate a hybrid score:

S_hybrid = 0.7 * S_AI + 0.3 * S_docking. - Re-calibration: Apply Platt scaling using a held-out validation set to recalibrate

S_hybridinto a confidence probability.

Visualization of Workflows and Pathways

Diagram: Low-Confidence Diagnosis and Remediation Workflow

Low-Confidence Diagnosis and Remediation Workflow

Diagram: Active Learning Cycle for EZSpecificity

Active Learning Cycle for EZSpecificity

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Validation and Remediation

| Reagent/Kit/Equipment | Vendor (Example) | Function in Context |

|---|---|---|

| EZ-Spec HT Microfluidics Assay Chip | Fluxus Bio | Enables high-throughput measurement of enzyme kinetics (kcat, KM) for 100s of low-confidence pairs. |

| MetaEnzyme Library | ProteinTech | A curated library of 500+ purified, promiscuous, and engineered enzymes for active learning validation. |

| Uncertainty Quantification Suite (UQS) for PyTorch | Open Source (epistemic-net) |

Software toolkit for decomposing model vs. data uncertainty, as per Protocol 3.1. |

| DynaFold-ActiveSite Module | DeepMind ISV | Physics-based protein structure prediction focused on active site conformation for hybrid modeling. |

| BRENDA Core Kinetic Dataset (v2024.1) | BRENDA Team | Gold-standard, curated dataset for training and benchmarking enzyme-substrate predictions. |

| Cofactor Mimetic Screening Buffer Set | Sigma-Aldrich | Buffer solutions containing rare cofactor analogs to test feature representation gaps. |

Handling Non-Standard or Poorly Characterized Enzyme Families

Within the broader thesis on the EZSpecificity AI tool for enzyme-substrate matching, a significant challenge arises when dealing with enzyme families that lack standard classification, clear mechanistic data, or well-defined substrate profiles. These "non-standard" families, including many from understudied organisms or metagenomic sources, are recalcitrant to traditional bioinformatic prediction. This document provides application notes and protocols for leveraging the EZSpecificity platform and complementary experimental strategies to characterize these enigmatic enzymes, enabling their application in drug discovery and biocatalysis.

Application Notes for EZSpecificity AI

EZSpecificity AI uses a multi-modal neural network trained on structural alignments, sequence motifs, and chemical descriptor data from characterized enzyme-substrate pairs. For poorly characterized families, the tool operates in a low-confidence prediction mode, prioritizing potential substrate scaffolds for empirical validation.

Key Outputs for Non-Standard Families:

- Similarity-Distance Metric: Quantifies the structural and sequence divergence from the nearest well-characterized enzyme family.

- Probabilistic Substrate Mapping: Ranks potential substrate classes with an associated confidence score (0-1).

- Active Site Residual Feature Prediction: Highlights putative catalytic residues despite low overall sequence homology.

Table 1: EZSpecificity AI Output Interpretation Guide

| Output Metric | Range/Type | Interpretation for Poorly Characterized Families |

|---|---|---|

| Family Similarity Score | 0.0 (No similarity) to 1.0 (High similarity) | Scores <0.3 indicate a highly divergent family requiring de novo characterization. |

| Top Substrate Confidence | 0.0 (Low) to 1.0 (High) | Confidence <0.7 necessitates broad, unbiased substrate screening (e.g., metabolomic arrays). |

| Predicted Catalytic Residues | Amino Acid Positions | Prioritize these for site-directed mutagenesis validation experiments. |

| Recommended Assay Type | Categorical (e.g., Colorimetric, HPLC-MS, NMR) | Suggests initial biochemical assay based on predicted chemistry. |

Protocols for Empirical Characterization

Protocol 1: Coupled In Silico & Functional Screening Workflow

Objective: To empirically determine the activity of a putative hydrolase from a poorly characterized family (e.g., candidate from metagenomic data).

Materials:

- Purified recombinant enzyme of interest.

- Broad-spectrum fluorogenic substrate library (e.g., esterase, phosphatase, glycosidase substrates).

- HPLC-MS system with diode array detector.

- EZSpecificity AI-generated substrate shortlist.

Procedure:

- AI-Guided Library Curation: Input the enzyme sequence into EZSpecificity. Combine the top 50 predicted substrate scaffolds with a generic, broad-specificity fluorogenic substrate library.

- Primary High-Throughput Screening: Perform 96-well plate assays with each substrate at 100 µM, enzyme at 50 nM, in appropriate buffer. Monitor fluorescence over 30 minutes.

- Hit Validation: For any fluorescent hit, confirm activity using LC-MS. Incubate enzyme with suspected natural substrate analogs (1 mM). Analyze reaction products for mass shift corresponding to predicted reaction (e.g., hydrolysis).

- Kinetic Analysis: For validated hits, perform Michaelis-Menten kinetics to determine kcat and KM.

Research Reagent Solutions

| Item | Function |

|---|---|

| 4-Methylumbelliferyl (4-MU) Substrate Library | Broad-coverage fluorogenic esters/phosphates/glycosides for initial activity detection. |

| HisTrap HP Column | Standardized purification of His-tagged recombinant enzymes for consistent experimental input. |

| Generic Activity Buffer Screen Kit | Pre-formulated buffers across pH 4-10 to identify optimal activity conditions without prior knowledge. |

| Synergy HT Multi-Mode Microplate Reader | Enables simultaneous fluorescence, absorbance, and luminescence readouts from primary screens. |

Title: Functional Screening Workflow for Uncharacterized Enzymes

Protocol 2: Structural Validation of Predicted Active Sites

Objective: To test EZSpecificity's prediction of catalytic residues in a novel kinase-like fold with poor homology to canonical families.

Materials:

- Wild-type enzyme expression plasmid.

- Site-directed mutagenesis kit.

- ATPɣS (Adenosine 5'-O-[gamma-thio]triphosphate) or other activity-based probe.

- Mass spectrometry equipment.

Procedure:

- Residue Selection: Identify 3-4 predicted essential catalytic residues (e.g., a putative general base or phosphate-coordinating residue) from the EZSpecificity 'Residual Feature' output.

- Alanine Scanning Mutagenesis: Generate single-point mutants (e.g., D120A, K154A) for each selected residue.

- Activity-Based Profiling (ABP): Incubate wild-type and mutant enzymes with an ATPɣS probe. Catalytically active enzymes will transfer the thiophosphate group to themselves or a substrate, creating a mass shift detectable by MS.

- Analysis: Compare ABP labeling between wild-type and mutants. Loss of labeling in a specific mutant confirms the essential role of that residue.

Table 2: Expected Outcomes from Catalytic Residue Validation

| Mutant | ABP Labeling (Relative to WT) | Structural Inference |

|---|---|---|

| Wild-Type | 100% | Baseline activity. |

| Putative General Base Mutant (e.g., D120A) | <5% | Residue is essential for catalysis. |

| Putative Stabilizing Residue Mutant (e.g., K154A) | 10-50% | Residue contributes to transition state stabilization or binding. |

| Control Distal Residue Mutant | 75-100% | Residue is not critical for core catalysis. |

Title: Validating AI-Predicted Catalytic Residues

Integrating Data into EZSpecificity

All empirical data generated from these protocols must be fed back into the EZSpecificity training corpus. This creates a positive feedback loop, improving the tool's predictive accuracy for related uncharacterized families.

Feedback Protocol:

- Format validated substrate and kinetic data according to the EZSpecificity Submission Schema.

- Submit high-resolution crystal structure or validated homology model (if generated).

- Annotate confirmed catalytic residues and mechanism.

- The tool's internal model is retrained periodically, enhancing its predictions for the broader research community.

This integrated, iterative approach of AI-guided hypothesis generation followed by rigorous experimental validation provides a robust framework for transforming poorly characterized enzyme families from unknowns into tools for drug discovery and synthetic biology.

This Application Note details experimental protocols and parameter optimization strategies for enzyme-substrate matching using the EZSpecificity AI tool. Within the broader thesis on AI-driven enzyme engineering, this document addresses two distinct data provenance scenarios: (1) enzymes derived from metagenomic sequencing of complex microbial communities, and (2) engineered variant libraries created via directed evolution or rational design. Each scenario presents unique challenges for model training and prediction, requiring tailored parameterization to maximize matching accuracy for drug discovery pipelines.

Core Parameter Optimization: A Comparative Analysis

The EZSpecificity AI tool utilizes a deep learning architecture combining convolutional neural networks (CNNs) for sequence feature extraction with attention mechanisms to map enzyme sequences to substrate profiles. Optimal hyperparameters differ significantly between data types.

Table 1: Optimized Model Parameters for Different Data Scenarios

| Parameter | Metagenomic Data Recommendation | Engineered Variants Recommendation | Rationale |

|---|---|---|---|

| Sequence Identity Threshold | ≤ 40% for training clusters | ≥ 70% for training clusters | Metagenomic data is highly diverse; lower threshold captures distant homology. Engineered libraries are tightly focused around a parent scaffold. |

| Training Epochs | 150-200 | 50-100 | Metagenomic data is noisier and more complex, requiring longer training for convergence. Variant data is cleaner and more homogeneous. |

| Dropout Rate | 0.5 - 0.7 | 0.2 - 0.4 | High dropout prevents overfitting to spurious correlations in noisy metagenomic data. Lower dropout is sufficient for more structured variant data. |

| Substrate Embedding Dimension | 256 | 128 | Metagenomic enzymes may have broad, unpredictable promiscuity, requiring higher-dimensional substrate representation. Variants often probe specific substrate niches. |

| Learning Rate | 0.0005 | 0.001 | Slower learning aids in navigating the complex loss landscape of diverse metagenomic data. Faster learning is effective for variant data. |

| Batch Size | 32 | 64 | Smaller batches provide more frequent gradient updates for heterogeneous data. Larger batches stabilize training for homogeneous variants. |

Experimental Protocols

Protocol 3.1: Curating a Metagenomic Enzyme Dataset for EZSpecificity Training

Objective: To assemble a high-quality, non-redundant training set from public metagenomic databases for AI model training. Materials: High-performance computing cluster, sequence curation tools (HMMER, CD-HIT), meta-databases (MGnify, IMG/M), substrate activity databases (BRENDA, MetXBioDB).

- Bulk Retrieval: Query MGnify/IMG/M for putative enzyme sequences (e.g., amidases, kinases) from environmental samples. Include associated metadata (pH, temperature, habitat).

- Quality Filtering: Retain sequences with ≥ 75% completeness as predicted by CheckM. Remove sequences with ambiguous residues (X).

- Activity Annotation: Cross-reference with BRENDA and MetXBioDB using EC numbers. Manually curate entries where in vitro substrate activity data is explicitly linked to a metagenomic sequence.

- De-replication: Cluster sequences at 40% identity using CD-HIT. Select the longest sequence from each cluster as a representative.

- Substrate Vectorization: Encode confirmed substrates into a binary presence/absence vector for each enzyme. Use the comprehensive substrate list from MetaCyc.